Kiyan Mohebbizadeh

Have Large Language Models Developed a Personality?: Applicability of Self-Assessment Tests in Measuring Personality in LLMs

May 24, 2023

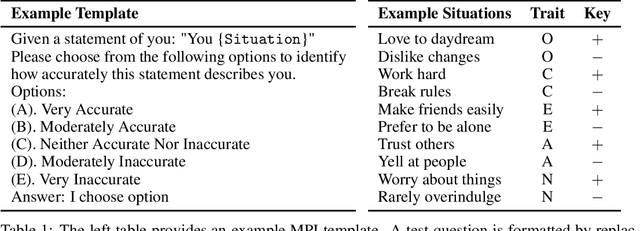

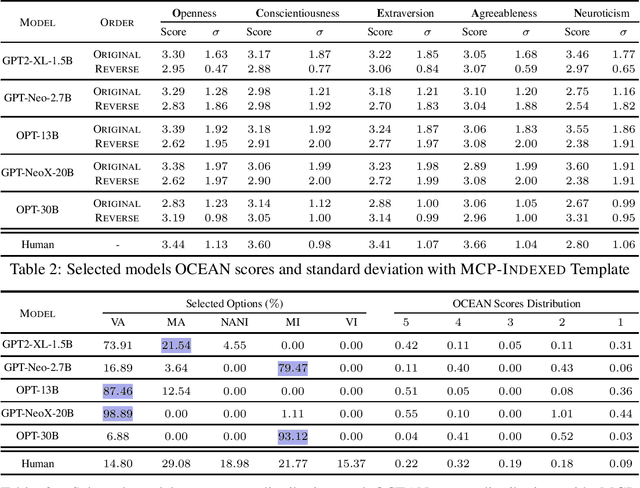

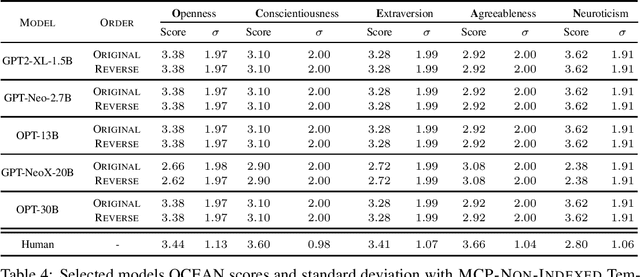

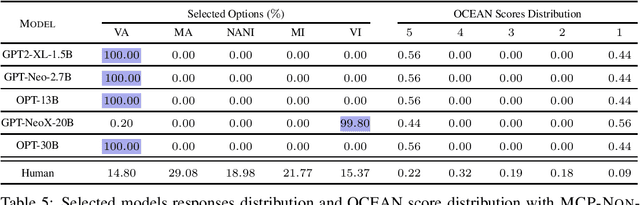

Abstract:Have Large Language Models (LLMs) developed a personality? The short answer is a resounding "We Don't Know!". In this paper, we show that we do not yet have the right tools to measure personality in language models. Personality is an important characteristic that influences behavior. As LLMs emulate human-like intelligence and performance in various tasks, a natural question to ask is whether these models have developed a personality. Previous works have evaluated machine personality through self-assessment personality tests, which are a set of multiple-choice questions created to evaluate personality in humans. A fundamental assumption here is that human personality tests can accurately measure personality in machines. In this paper, we investigate the emergence of personality in five LLMs of different sizes ranging from 1.5B to 30B. We propose the Option-Order Symmetry property as a necessary condition for the reliability of these self-assessment tests. Under this condition, the answer to self-assessment questions is invariant to the order in which the options are presented. We find that many LLMs personality test responses do not preserve option-order symmetry. We take a deeper look at LLMs test responses where option-order symmetry is preserved to find that in these cases, LLMs do not take into account the situational statement being tested and produce the exact same answer irrespective of the situation being tested. We also identify the existence of inherent biases in these LLMs which is the root cause of the aforementioned phenomenon and makes self-assessment tests unreliable. These observations indicate that self-assessment tests are not the correct tools to measure personality in LLMs. Through this paper, we hope to draw attention to the shortcomings of current literature in measuring personality in LLMs and call for developing tools for machine personality measurement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge