Kim Khoa Nguyen

Modeling Topological Impact on Node Attribute Distributions in Attributed Graphs

Feb 01, 2026Abstract:We investigate how the topology of attributed graphs influences the distribution of node attributes. This work offers a novel perspective by treating topology and attributes as structurally distinct but interacting components. We introduce an algebraic approach that combines a graph's topology with the probability distribution of node attributes, resulting in topology-influenced distributions. First, we develop a categorical framework to formalize how a node perceives the graph's topology. We then quantify this point of view and integrate it with the distribution of node attributes to capture topological effects. We interpret these topology-conditioned distributions as approximations of the posteriors $P(\cdot \mid v)$ and $P(\cdot \mid \mathcal{G})$. We further establish a principled sufficiency condition by showing that, on complete graphs, where topology carries no informative structure, our construction recovers the original attribute distribution. To evaluate our approach, we introduce an intentionally simple testbed model, $\textbf{ID}$, and use unsupervised graph anomaly detection as a probing task.

Grothendieck Graph Neural Networks Framework: An Algebraic Platform for Crafting Topology-Aware GNNs

Dec 12, 2024

Abstract:Due to the structural limitations of Graph Neural Networks (GNNs), in particular with respect to conventional neighborhoods, alternative aggregation strategies have recently been investigated. This paper investigates graph structure in message passing, aimed to incorporate topological characteristics. While the simplicity of neighborhoods remains alluring, we propose a novel perspective by introducing the concept of 'cover' as a generalization of neighborhoods. We design the Grothendieck Graph Neural Networks (GGNN) framework, offering an algebraic platform for creating and refining diverse covers for graphs. This framework translates covers into matrix forms, such as the adjacency matrix, expanding the scope of designing GNN models based on desired message-passing strategies. Leveraging algebraic tools, GGNN facilitates the creation of models that outperform traditional approaches. Based on the GGNN framework, we propose Sieve Neural Networks (SNN), a new GNN model that leverages the notion of sieves from category theory. SNN demonstrates outstanding performance in experiments, particularly on benchmarks designed to test the expressivity of GNNs, and exemplifies the versatility of GGNN in generating novel architectures.

Small Contributions, Small Networks: Efficient Neural Network Pruning Based on Relative Importance

Oct 21, 2024

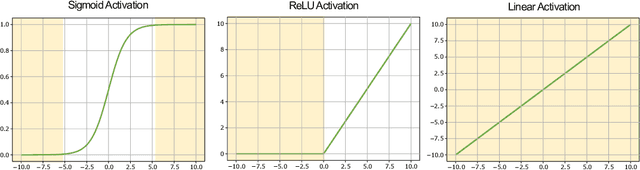

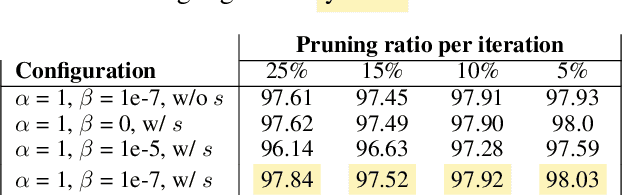

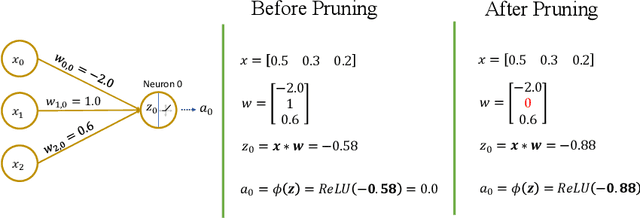

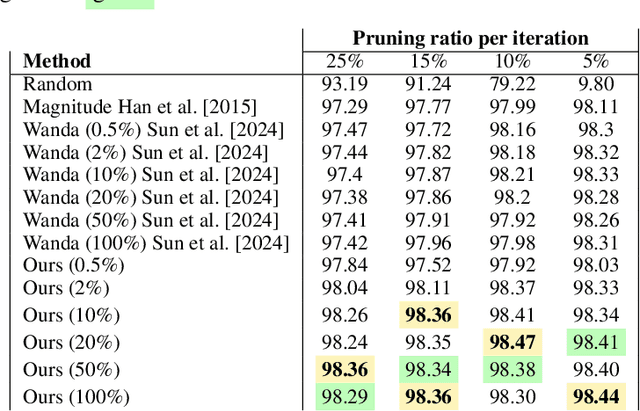

Abstract:Recent advancements have scaled neural networks to unprecedented sizes, achieving remarkable performance across a wide range of tasks. However, deploying these large-scale models on resource-constrained devices poses significant challenges due to substantial storage and computational requirements. Neural network pruning has emerged as an effective technique to mitigate these limitations by reducing model size and complexity. In this paper, we introduce an intuitive and interpretable pruning method based on activation statistics, rooted in information theory and statistical analysis. Our approach leverages the statistical properties of neuron activations to identify and remove weights with minimal contributions to neuron outputs. Specifically, we build a distribution of weight contributions across the dataset and utilize its parameters to guide the pruning process. Furthermore, we propose a Pruning-aware Training strategy that incorporates an additional regularization term to enhance the effectiveness of our pruning method. Extensive experiments on multiple datasets and network architectures demonstrate that our method consistently outperforms several baseline and state-of-the-art pruning techniques.

Multi-IRS Aided Mobile Edge Computing for High Reliability and Low Latency Services

Dec 14, 2023

Abstract:Although multi-access edge computing (MEC) has allowed for computation offloading at the network edge, weak wireless signals in the radio access network caused by obstacles and high network load are still preventing efficient edge computation offloading, especially for user requests with stringent latency and reliability requirements. Intelligent reflective surfaces (IRS) have recently emerged as a technology capable of enhancing the quality of the signals in the radio access network, where passive reflecting elements can be tuned to improve the uplink or downlink signals. Harnessing the IRS's potential in enhancing the performance of edge computation offloading, in this paper, we study the optimized use of a system of multi-IRS along with the design of the offloading (to an edge with multi MECs) and resource allocation parameters for the purpose of minimizing the devices' energy consumption considering 5G services with stringent latency and reliability requirements. After presenting our non-convex mathematical problem, we propose a suboptimal solution based on alternating optimization where we divide the problem into sub-problems which are then solved separately. Specifically, the offloading decision is solved through a matching game algorithm, and then the IRS phase shifts and resource allocation optimizations are solved in an alternating fashion using the Difference of Convex approach. The obtained results demonstrate the gains both in energy and network resources and highlight the IRS's influence on the design of the MEC parameters.

Age of Processing-Based Data Offloading for Autonomous Vehicles in Multi-RATs Open RAN

Aug 14, 2023

Abstract:Today, vehicles use smart sensors to collect data from the road environment. This data is often processed onboard of the vehicles, using expensive hardware. Such onboard processing increases the vehicle's cost, quickly drains its battery, and exhausts its computing resources. Therefore, offloading tasks onto the cloud is required. Still, data offloading is challenging due to low latency requirements for safe and reliable vehicle driving decisions. Moreover, age of processing was not considered in prior research dealing with low-latency offloading for autonomous vehicles. This paper proposes an age of processing-based offloading approach for autonomous vehicles using unsupervised machine learning, Multi-Radio Access Technologies (multi-RATs), and Edge Computing in Open Radio Access Network (O-RAN). We design a collaboration space of edge clouds to process data in proximity to autonomous vehicles. To reduce the variation in offloading delay, we propose a new communication planning approach that enables the vehicle to optimally preselect the available RATs such as Wi-Fi, LTE, or 5G to offload tasks to edge clouds when its local resources are insufficient. We formulate an optimization problem for age-based offloading that minimizes elapsed time from generating tasks and receiving computation output. To handle this non-convex problem, we develop a surrogate problem. Then, we use the Lagrangian method to transform the surrogate problem to unconstrained optimization problem and apply the dual decomposition method. The simulation results show that our approach significantly minimizes the age of processing in data offloading with 90.34 % improvement over similar method.

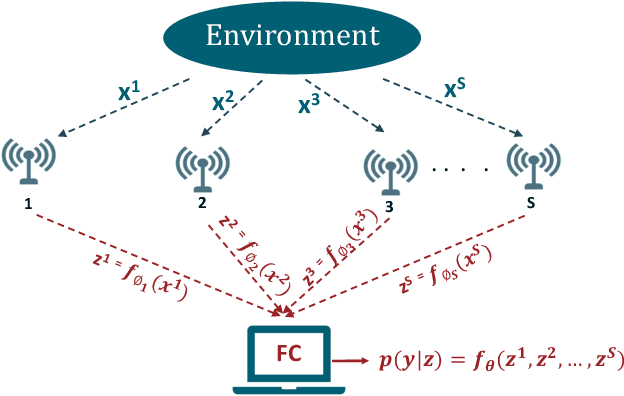

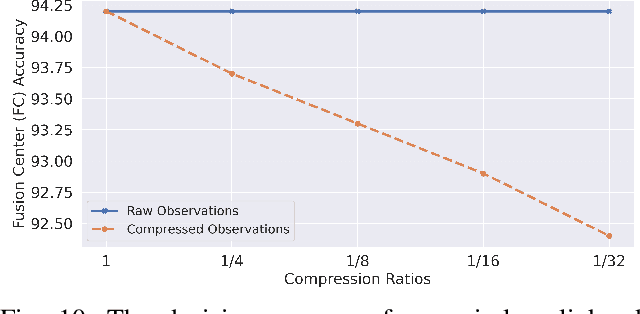

A Learning Framework for Bandwidth-Efficient Distributed Inference in Wireless IoT

Mar 17, 2022

Abstract:In wireless Internet of things (IoT), the sensors usually have limited bandwidth and power resources. Therefore, in a distributed setup, each sensor should compress and quantize the sensed observations before transmitting them to a fusion center (FC) where a global decision is inferred. Most of the existing compression techniques and entropy quantizers consider only the reconstruction fidelity as a metric, which means they decouple the compression from the sensing goal. In this work, we argue that data compression mechanisms and entropy quantizers should be co-designed with the sensing goal, specifically for machine-consumed data. To this end, we propose a novel deep learning-based framework for compressing and quantizing the observations of correlated sensors. Instead of maximizing the reconstruction fidelity, our objective is to compress the sensor observations in a way that maximizes the accuracy of the inferred decision (i.e., sensing goal) at the FC. Unlike prior work, we do not impose any assumptions about the observations distribution which emphasizes the wide applicability of our framework. We also propose a novel loss function that keeps the model focused on learning complementary features at each sensor. The results show the superior performance of our framework compared to other benchmark models.

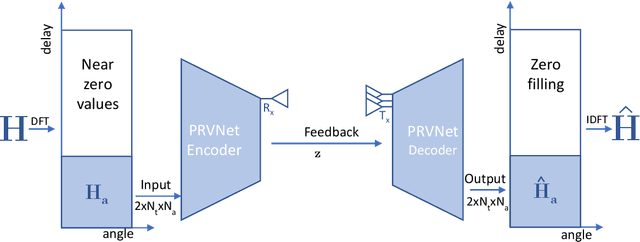

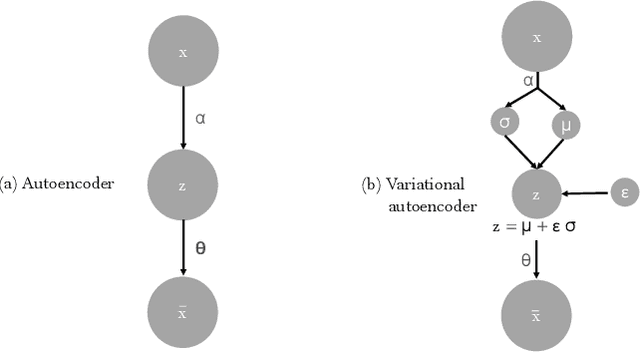

PRVNet: Variational Autoencoders for Massive MIMO CSI Feedback

Nov 09, 2020

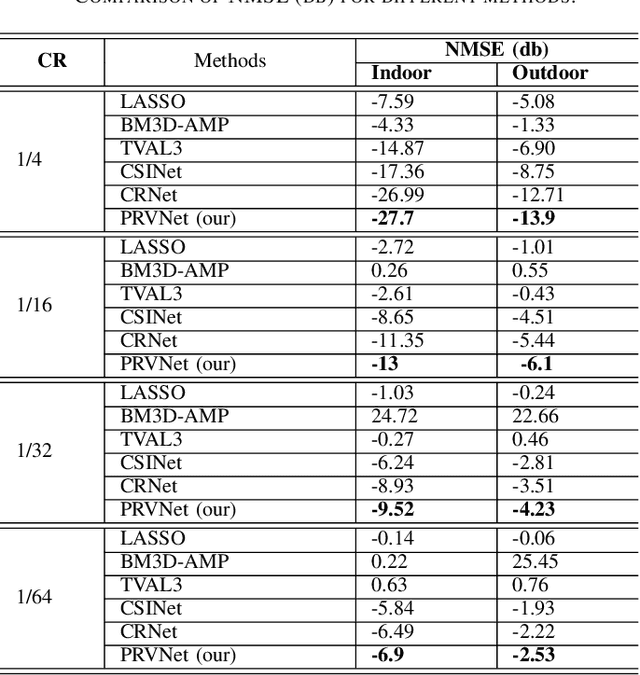

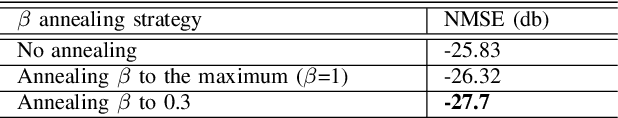

Abstract:In a frequency division duplexing multiple-input multiple-output (FDD-MIMO) system, the user equipment (UE) send the downlink channel state information (CSI) to the base station for performance improvement. However, with the growing complexity of MIMO systems, this feedback becomes expensive and has a negative impact on the bandwidth. Although this problem has been largely studied in the literature, the noisy nature of the feedback channel is less considered. In this paper, we introduce PRVNet, a neural architecture based on variational autoencoders (VAE). VAE gained large attention in many fields (e.g., image processing, language models, or recommendation system). However, it received less attention in the communication domain generally and in CSI feedback problem specifically. We also introduce a different regularization parameter for the learning objective, which proved to be crucial for achieving competitive performance. In addition, we provide an efficient way to tune this parameter using KL-annealing. Empirically, we show that the proposed model significantly outperforms state-of-the-art, including two neural network approaches. The proposed model is also proved to be more robust against different levels of noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge