Khadija Iddrisu

Evaluating Image-Based Face and Eye Tracking with Event Cameras

Aug 19, 2024

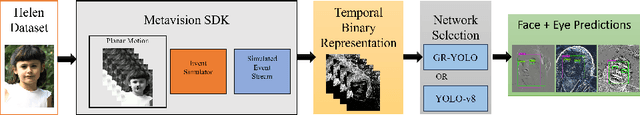

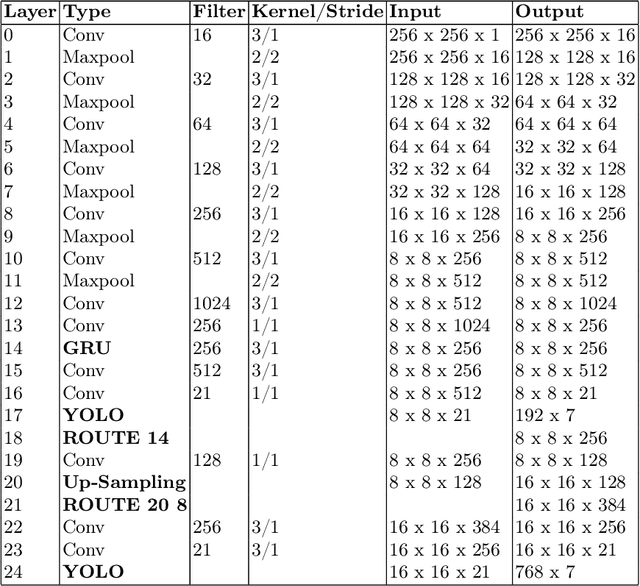

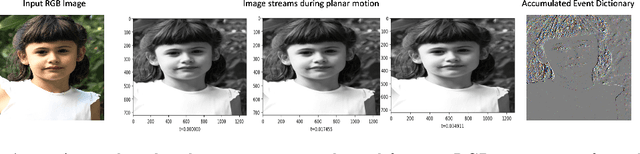

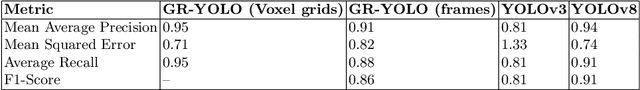

Abstract:Event Cameras, also known as Neuromorphic sensors, capture changes in local light intensity at the pixel level, producing asynchronously generated data termed ``events''. This distinct data format mitigates common issues observed in conventional cameras, like under-sampling when capturing fast-moving objects, thereby preserving critical information that might otherwise be lost. However, leveraging this data often necessitates the development of specialized, handcrafted event representations that can integrate seamlessly with conventional Convolutional Neural Networks (CNNs), considering the unique attributes of event data. In this study, We evaluate event-based Face and Eye tracking. The core objective of our study is to showcase the viability of integrating conventional algorithms with event-based data, transformed into a frame format while preserving the unique benefits of event cameras. To validate our approach, we constructed a frame-based event dataset by simulating events between RGB frames derived from the publicly accessible Helen Dataset. We assess its utility for face and eye detection tasks through the application of GR-YOLO -- a pioneering technique derived from YOLOv3. This evaluation includes a comparative analysis with results derived from training the dataset with YOLOv8. Subsequently, the trained models were tested on real event streams from various iterations of Prophesee's event cameras and further evaluated on the Faces in Event Stream (FES) benchmark dataset. The models trained on our dataset shows a good prediction performance across all the datasets obtained for validation with the best results of a mean Average precision score of 0.91. Additionally, The models trained demonstrated robust performance on real event camera data under varying light conditions.

A Framework for Pupil Tracking with Event Cameras

Jul 23, 2024Abstract:Saccades are extremely rapid movements of both eyes that occur simultaneously, typically observed when an individual shifts their focus from one object to another. These movements are among the swiftest produced by humans and possess the potential to achieve velocities greater than that of blinks. The peak angular speed of the eye during a saccade can reach as high as 700{\deg}/s in humans, especially during larger saccades that cover a visual angle of 25{\deg}. Previous research has demonstrated encouraging outcomes in comprehending neurological conditions through the study of saccades. A necessary step in saccade detection involves accurately identifying the precise location of the pupil within the eye, from which additional information such as gaze angles can be inferred. Conventional frame-based cameras often struggle with the high temporal precision necessary for tracking very fast movements, resulting in motion blur and latency issues. Event cameras, on the other hand, offer a promising alternative by recording changes in the visual scene asynchronously and providing high temporal resolution and low latency. By bridging the gap between traditional computer vision and event-based vision, we present events as frames that can be readily utilized by standard deep learning algorithms. This approach harnesses YOLOv8, a state-of-the-art object detection technology, to process these frames for pupil tracking using the publicly accessible Ev-Eye dataset. Experimental results demonstrate the framework's effectiveness, highlighting its potential applications in neuroscience, ophthalmology, and human-computer interaction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge