Keyang Zhang

Propose and Rectify: A Forensics-Driven MLLM Framework for Image Manipulation Localization

Aug 25, 2025Abstract:The increasing sophistication of image manipulation techniques demands robust forensic solutions that can both reliably detect alterations and precisely localize tampered regions. Recent Multimodal Large Language Models (MLLMs) show promise by leveraging world knowledge and semantic understanding for context-aware detection, yet they struggle with perceiving subtle, low-level forensic artifacts crucial for accurate manipulation localization. This paper presents a novel Propose-Rectify framework that effectively bridges semantic reasoning with forensic-specific analysis. In the proposal stage, our approach utilizes a forensic-adapted LLaVA model to generate initial manipulation analysis and preliminary localization of suspicious regions based on semantic understanding and contextual reasoning. In the rectification stage, we introduce a Forensics Rectification Module that systematically validates and refines these initial proposals through multi-scale forensic feature analysis, integrating technical evidence from several specialized filters. Additionally, we present an Enhanced Segmentation Module that incorporates critical forensic cues into SAM's encoded image embeddings, thereby overcoming inherent semantic biases to achieve precise delineation of manipulated regions. By synergistically combining advanced multimodal reasoning with established forensic methodologies, our framework ensures that initial semantic proposals are systematically validated and enhanced through concrete technical evidence, resulting in comprehensive detection accuracy and localization precision. Extensive experimental validation demonstrates state-of-the-art performance across diverse datasets with exceptional robustness and generalization capabilities.

Image Provenance Analysis via Graph Encoding with Vision Transformer

Aug 26, 2024Abstract:Recent advances in AI-powered image editing tools have significantly lowered the barrier to image modification, raising pressing security concerns those related to spreading misinformation and disinformation on social platforms. Image provenance analysis is crucial in this context, as it identifies relevant images within a database and constructs a relationship graph by mining hidden manipulation and transformation cues, thereby providing concrete evidence chains. This paper introduces a novel end-to-end deep learning framework designed to explore the structural information of provenance graphs. Our proposed method distinguishes from previous approaches in two main ways. First, unlike earlier methods that rely on prior knowledge and have limited generalizability, our framework relies upon a patch attention mechanism to capture image provenance clues for local manipulations and global transformations, thereby enhancing graph construction performance. Second, while previous methods primarily focus on identifying tampering traces only between image pairs, they often overlook the hidden information embedded in the topology of the provenance graph. Our approach aligns the model training objectives with the final graph construction task, incorporating the overall structural information of the graph into the training process. We integrate graph structure information with the attention mechanism, enabling precise determination of the direction of transformation. Experimental results show the superiority of the proposed method over previous approaches, underscoring its effectiveness in addressing the challenges of image provenance analysis.

Learning from Mixed Datasets: A Monotonic Image Quality Assessment Model

Sep 28, 2022

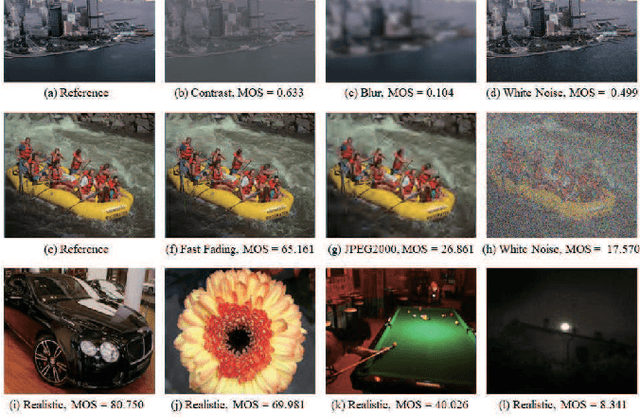

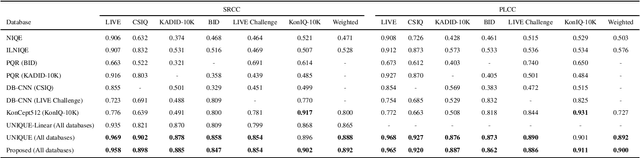

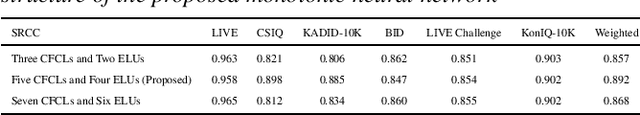

Abstract:Deep learning based image quality assessment (IQA) models usually learn to predict image quality from a single dataset, leading the model to overfit specific scenes. To account for this, mixed datasets training can be an effective way to enhance the generalization capability of the model. However, it is nontrivial to combine different IQA datasets, as their quality evaluation criteria, score ranges, view conditions, as well as subjects are usually not shared during the image quality annotation. In this paper, instead of aligning the annotations, we propose a monotonic neural network for IQA model learning with different datasets combined. In particular, our model consists of a dataset-shared quality regressor and several dataset-specific quality transformers. The quality regressor aims to obtain the perceptual qualities of each dataset while each quality transformer maps the perceptual qualities to the corresponding dataset annotations with their monotonicity maintained. The experimental results verify the effectiveness of the proposed learning strategy and our code is available at https://github.com/fzp0424/MonotonicIQA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge