Kevin Max

Synthetic Biology meets Neuromorphic Computing: Towards a bio-inspired Olfactory Perception System

Apr 14, 2025Abstract:In this study, we explore how the combination of synthetic biology, neuroscience modeling, and neuromorphic electronic systems offers a new approach to creating an artificial system that mimics the natural sense of smell. We argue that a co-design approach offers significant advantages in replicating the complex dynamics of odor sensing and processing. We investigate a hybrid system of synthetic sensory neurons that provides three key features: a) receptor-gated ion channels, b) interface between synthetic biology and semiconductors and c) event-based encoding and computing based on spiking networks. This research seeks to develop a platform for ultra-sensitive, specific, and energy-efficient odor detection, with potential implications for environmental monitoring, medical diagnostics, and security.

Weight transport through spike timing for robust local gradients

Mar 04, 2025

Abstract:In both machine learning and in computational neuroscience, plasticity in functional neural networks is frequently expressed as gradient descent on a cost. Often, this imposes symmetry constraints that are difficult to reconcile with local computation, as is required for biological networks or neuromorphic hardware. For example, wake-sleep learning in networks characterized by Boltzmann distributions builds on the assumption of symmetric connectivity. Similarly, the error backpropagation algorithm is notoriously plagued by the weight transport problem between the representation and the error stream. Existing solutions such as feedback alignment tend to circumvent the problem by deferring to the robustness of these algorithms to weight asymmetry. However, they are known to scale poorly with network size and depth. We introduce spike-based alignment learning (SAL), a complementary learning rule for spiking neural networks, which uses spike timing statistics to extract and correct the asymmetry between effective reciprocal connections. Apart from being spike-based and fully local, our proposed mechanism takes advantage of noise. Based on an interplay between Hebbian and anti-Hebbian plasticity, synapses can thereby recover the true local gradient. This also alleviates discrepancies that arise from neuron and synapse variability -- an omnipresent property of physical neuronal networks. We demonstrate the efficacy of our mechanism using different spiking network models. First, we show how SAL can significantly improve convergence to the target distribution in probabilistic spiking networks as compared to Hebbian plasticity alone. Second, in neuronal hierarchies based on cortical microcircuits, we show how our proposed mechanism effectively enables the alignment of feedback weights to the forward pathway, thus allowing the backpropagation of correct feedback errors.

Backpropagation through space, time, and the brain

Mar 25, 2024Abstract:Effective learning in neuronal networks requires the adaptation of individual synapses given their relative contribution to solving a task. However, physical neuronal systems -- whether biological or artificial -- are constrained by spatio-temporal locality. How such networks can perform efficient credit assignment, remains, to a large extent, an open question. In Machine Learning, the answer is almost universally given by the error backpropagation algorithm, through both space (BP) and time (BPTT). However, BP(TT) is well-known to rely on biologically implausible assumptions, in particular with respect to spatiotemporal (non-)locality, while forward-propagation models such as real-time recurrent learning (RTRL) suffer from prohibitive memory constraints. We introduce Generalized Latent Equilibrium (GLE), a computational framework for fully local spatio-temporal credit assignment in physical, dynamical networks of neurons. We start by defining an energy based on neuron-local mismatches, from which we derive both neuronal dynamics via stationarity and parameter dynamics via gradient descent. The resulting dynamics can be interpreted as a real-time, biologically plausible approximation of BPTT in deep cortical networks with continuous-time neuronal dynamics and continuously active, local synaptic plasticity. In particular, GLE exploits the ability of biological neurons to phase-shift their output rate with respect to their membrane potential, which is essential in both directions of information propagation. For the forward computation, it enables the mapping of time-continuous inputs to neuronal space, performing an effective spatiotemporal convolution. For the backward computation, it permits the temporal inversion of feedback signals, which consequently approximate the adjoint states necessary for useful parameter updates.

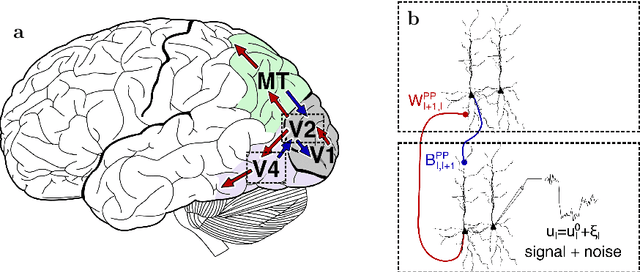

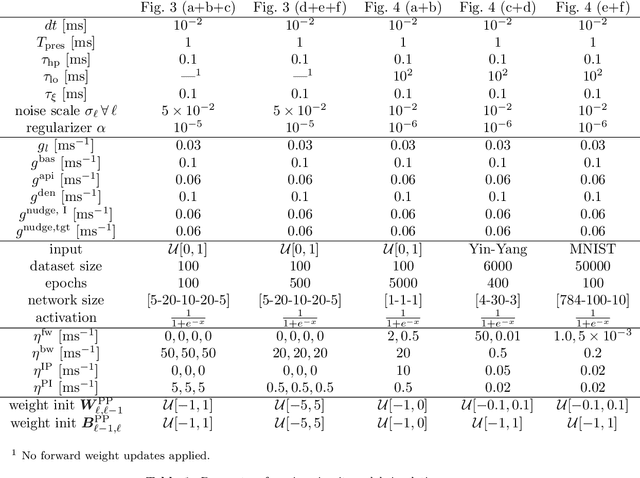

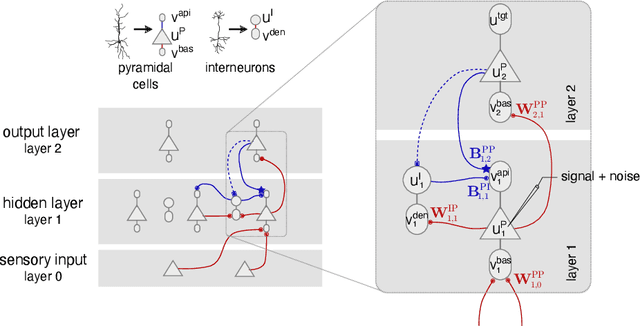

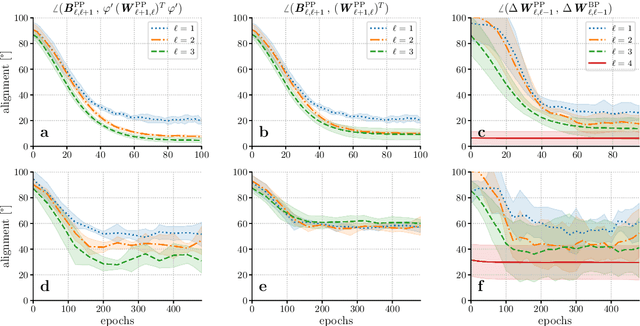

Learning efficient backprojections across cortical hierarchies in real time

Dec 20, 2022

Abstract:Models of sensory processing and learning in the cortex need to efficiently assign credit to synapses in all areas. In deep learning, a known solution is error backpropagation, which however requires biologically implausible weight transport from feed-forward to feedback paths. We introduce Phaseless Alignment Learning (PAL), a bio-plausible method to learn efficient feedback weights in layered cortical hierarchies. This is achieved by exploiting the noise naturally found in biophysical systems as an additional carrier of information. In our dynamical system, all weights are learned simultaneously with always-on plasticity and using only information locally available to the synapses. Our method is completely phase-free (no forward and backward passes or phased learning) and allows for efficient error propagation across multi-layer cortical hierarchies, while maintaining biologically plausible signal transport and learning. Our method is applicable to a wide class of models and improves on previously known biologically plausible ways of credit assignment: compared to random synaptic feedback, it can solve complex tasks with less neurons and learn more useful latent representations. We demonstrate this on various classification tasks using a cortical microcircuit model with prospective coding.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge