Kevin Eckenhoff

MIMC-VINS: A Versatile and Resilient Multi-IMU Multi-Camera Visual-Inertial Navigation System

Jun 28, 2020

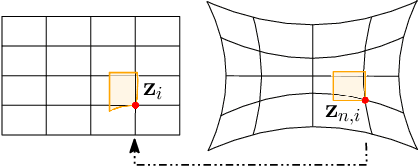

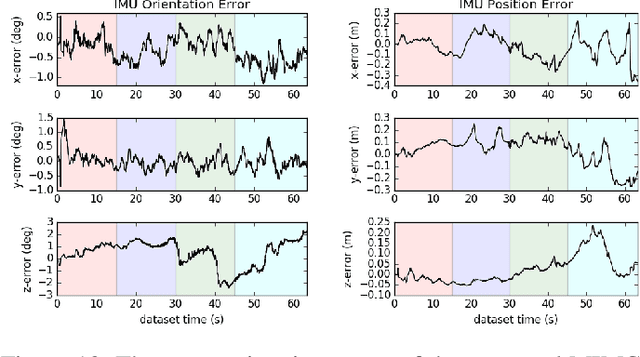

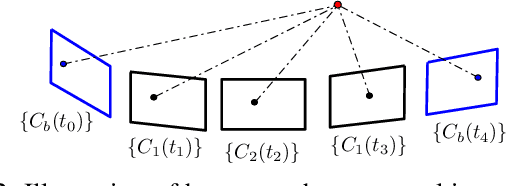

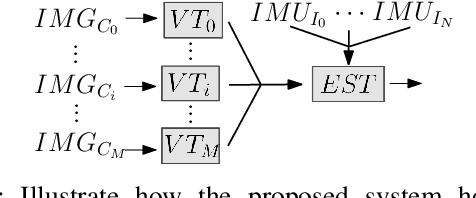

Abstract:As cameras and inertial sensors are becoming ubiquitous in mobile devices and robots, it holds great potential to design visual-inertial navigation systems (VINS) for efficient versatile 3D motion tracking which utilize any (multiple) available cameras and inertial measurement units (IMUs) and are resilient to sensor failures or measurement depletion. To this end, rather than the standard VINS paradigm using a minimal sensing suite of a single camera and IMU, in this paper we design a real-time consistent multi-IMU multi-camera (MIMC)-VINS estimator that is able to seamlessly fuse multi-modal information from an arbitrary number of uncalibrated cameras and IMUs. Within an efficient multi-state constraint Kalman filter (MSCKF) framework, the proposed MIMC-VINS algorithm optimally fuses asynchronous measurements from all sensors, while providing smooth, uninterrupted, and accurate 3D motion tracking even if some sensors fail. The key idea of the proposed MIMC-VINS is to perform high-order on-manifold state interpolation to efficiently process all available visual measurements without increasing the computational burden due to estimating additional sensors' poses at asynchronous imaging times. In order to fuse the information from multiple IMUs, we propagate a joint system consisting of all IMU states while enforcing rigid-body constraints between the IMUs during the filter update stage. Lastly, we estimate online both spatiotemporal extrinsic and visual intrinsic parameters to make our system robust to errors in prior sensor calibration. The proposed system is extensively validated in both Monte-Carlo simulations and real-world experiments.

Closed-form Preintegration Methods for Graph-based Visual-Inertial Navigation

Mar 20, 2019

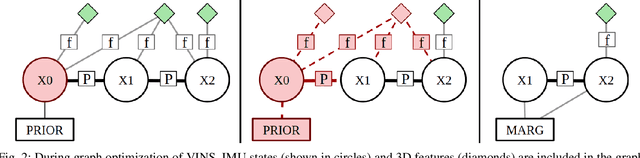

Abstract:In this paper we propose a new analytical preintegration theory for graph-based sensor fusion with an inertial measurement unit (IMU) and a camera (or other aiding sensors).Rather than using discrete sampling of the measurement dynamics as in current methods,we derive the closed-form solutions to the preintegration equations, yielding improved accuracy in state estimation.We advocate two new different inertial models for preintegration: (i) the model that assumes piecewise constant measurements, and (ii) the model that assumes piecewise constant local true acceleration.We show through extensive Monte-Carlo simulations the effect that the choice of preintegration model has on estimation performance.To validate the proposed preintegration theory, we develop both direct and indirect visual-inertial navigation systems (VINS) that leverage our preintegration.In the first, within a tightly-coupled, sliding-window optimization framework, we jointly estimate the features in the window and the IMU states while performing marginalization to bound the computational cost.In the second, we loosely-couple the IMU preintegration with a direct image alignment that estimates relative camera motion by minimizing the photometric errors (i.e., image intensity difference), allowing for efficient and informative loop closures. Both systems are extensively validated in real-world experiments and are shown to offer competitive performance to state-of-the-art methods.

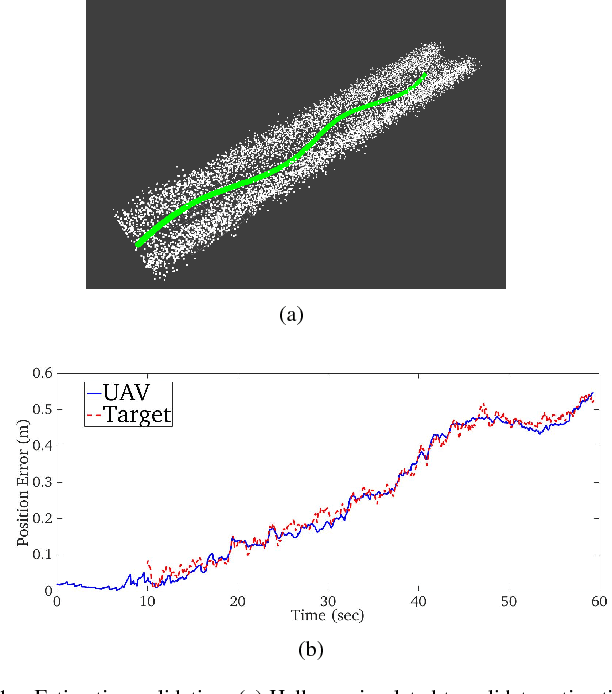

Visual-Inertial Target Tracking and Motion Planning for UAV-based Radiation Detection

May 23, 2018

Abstract:This paper addresses the problem of detecting radioactive material in transit using an UAV of minimal sensing capability, where the objective is to classify the target's radioactivity as the vehicle plans its paths through the workspace while tracking the target for a short time interval. To this end, we propose a motion planning framework that integrates tightly-coupled visual-inertial localization and target tracking. In this framework,the 3D workspace is known, and this information together with the UAV dynamics, is used to construct a navigation function that generates dynamically feasible, safe paths which avoid obstacles and provably converge to the moving target. The efficacy of the proposed approach is validated through realistic simulations in Gazebo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge