Keunsoo Ko

IceNet for Interactive Contrast Enhancement

Sep 13, 2021

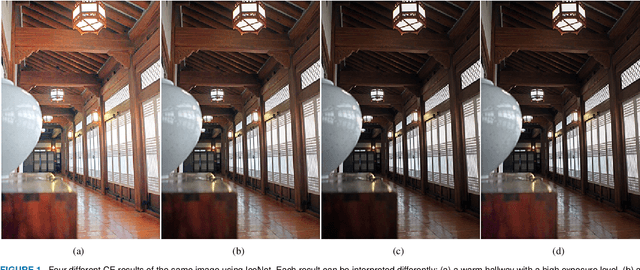

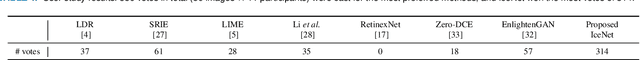

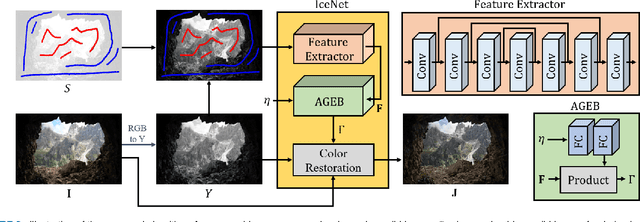

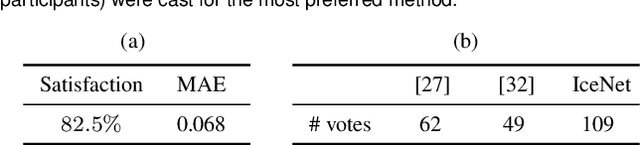

Abstract:A CNN-based interactive contrast enhancement algorithm, called IceNet, is proposed in this work, which enables a user to adjust image contrast easily according to his or her preference. Specifically, a user provides a parameter for controlling the global brightness and two types of scribbles to darken or brighten local regions in an image. Then, given these annotations, IceNet estimates a gamma map for the pixel-wise gamma correction. Finally, through color restoration, an enhanced image is obtained. The user may provide annotations iteratively to obtain a satisfactory image. IceNet is also capable of producing a personalized enhanced image automatically, which can serve as a basis for further adjustment if so desired. Moreover, to train IceNet effectively and reliably, we propose three differentiable losses. Extensive experiments show that IceNet can provide users with satisfactorily enhanced images.

Edge Network-Assisted Real-Time Object Detection Framework for Autonomous Driving

Aug 17, 2020

Abstract:Autonomous vehicles (AVs) can achieve the desired results within a short duration by offloading tasks even requiring high computational power (e.g., object detection (OD)) to edge clouds. However, although edge clouds are exploited, real-time OD cannot always be guaranteed due to dynamic channel quality. To mitigate this problem, we propose an edge network-assisted real-time OD framework~(EODF). In an EODF, AVs extract the region of interests~(RoIs) of the captured image when the channel quality is not sufficiently good for supporting real-time OD. Then, AVs compress the image data on the basis of the RoIs and transmit the compressed one to the edge cloud. In so doing, real-time OD can be achieved owing to the reduced transmission latency. To verify the feasibility of our framework, we evaluate the probability that the results of OD are not received within the inter-frame duration (i.e., outage probability) and their accuracy. From the evaluation, we demonstrate that the proposed EODF provides the results to AVs in real-time and achieves satisfactory accuracy.

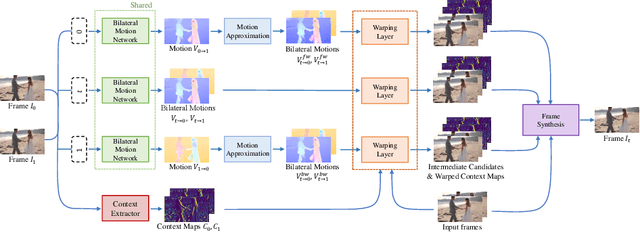

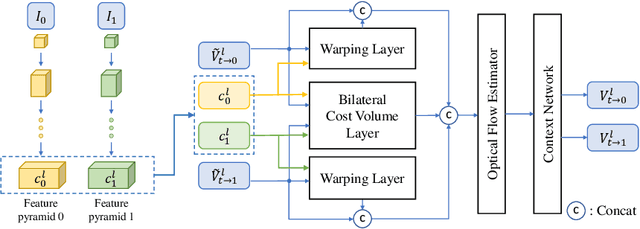

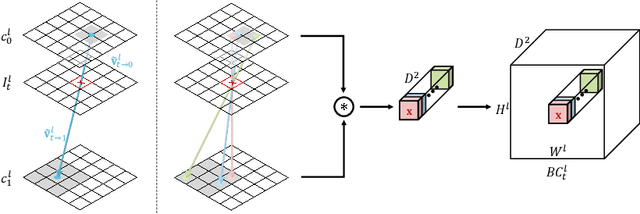

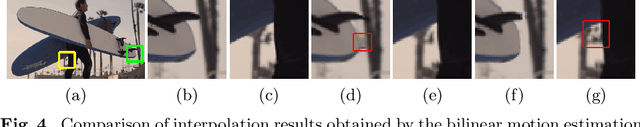

BMBC:Bilateral Motion Estimation with Bilateral Cost Volume for Video Interpolation

Jul 17, 2020

Abstract:Video interpolation increases the temporal resolution of a video sequence by synthesizing intermediate frames between two consecutive frames. We propose a novel deep-learning-based video interpolation algorithm based on bilateral motion estimation. First, we develop the bilateral motion network with the bilateral cost volume to estimate bilateral motions accurately. Then, we approximate bi-directional motions to predict a different kind of bilateral motions. We then warp the two input frames using the estimated bilateral motions. Next, we develop the dynamic filter generation network to yield dynamic blending filters. Finally, we combine the warped frames using the dynamic blending filters to generate intermediate frames. Experimental results show that the proposed algorithm outperforms the state-of-the-art video interpolation algorithms on several benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge