Kent Evans

A novel residual whitening based training to avoid overfitting

Aug 08, 2020

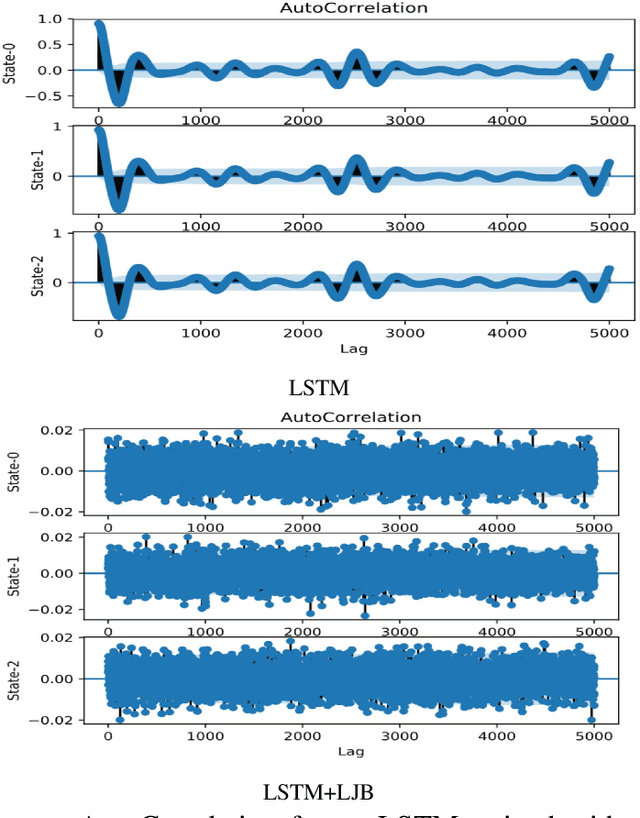

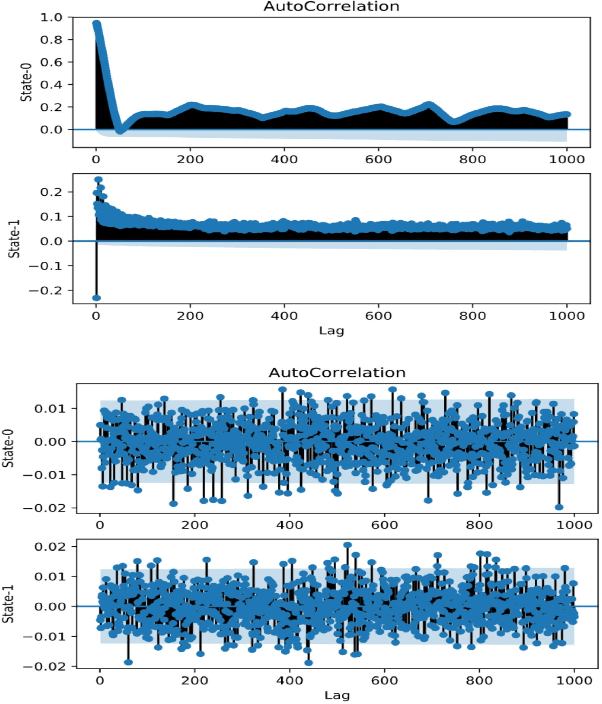

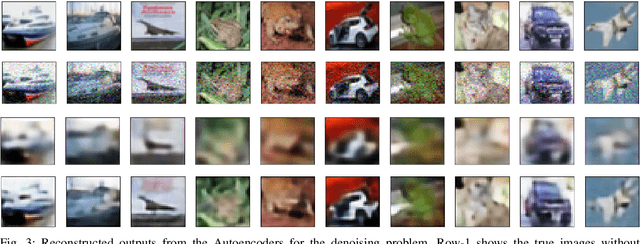

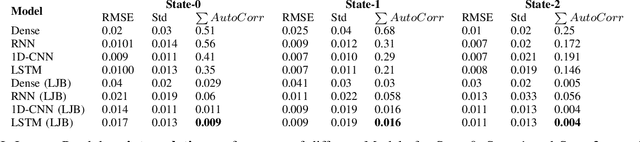

Abstract:In this paper we demonstrate that training models to minimize the autocorrelation of the residuals as an additional penalty prevents overfitting of the machine learning models. We use different problem extrapolative testing sets, and invoking decorrelation objective functions, we create models that can predict more complex systems. The models are interpretable, extrapolative, data-efficient, and capture predictable but complex non-stochastic behavior such as unmodeled degrees of freedom and systemic measurement noise. We apply this improved modeling paradigm to several simulated systems and an actual physical system in the context of system identification. Several ways of composing domain models with neural models are examined for time series, boosting, bagging, and auto-encoding on various systems of varying complexity and non-linearity. Although this work is preliminary, we show that the ability to combine models is a very promising direction for neural modeling.

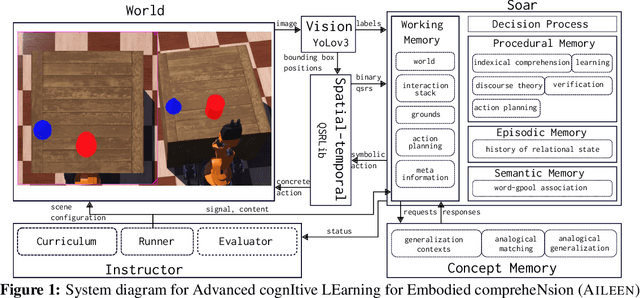

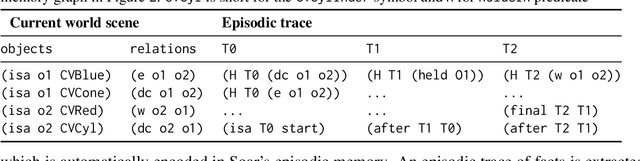

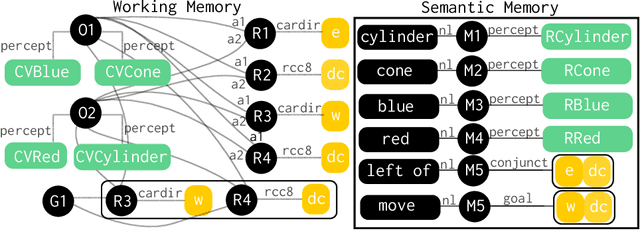

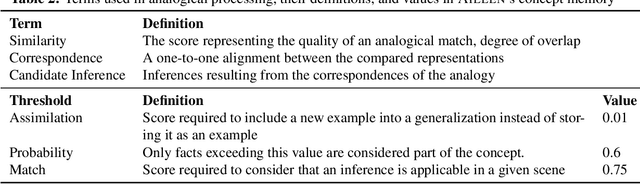

Characterizing an Analogical Concept Memory for Newellian Cognitive Architectures

Jun 19, 2020

Abstract:We propose a new long-term declarative memory for Soar that leverages the computational models of analogical reasoning and generalization. We situate our research in interactive task learning (ITL) and embodied language processing (ELP). We demonstrate that the learning methods implemented in the proposed memory can quickly learn a diverse types of novel concepts that are useful in task execution. Our approach has been instantiated in an implemented hybrid AI system AILEEN and evaluated on a simulated robotic domain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge