Warren B. Jackson

A novel residual whitening based training to avoid overfitting

Aug 08, 2020

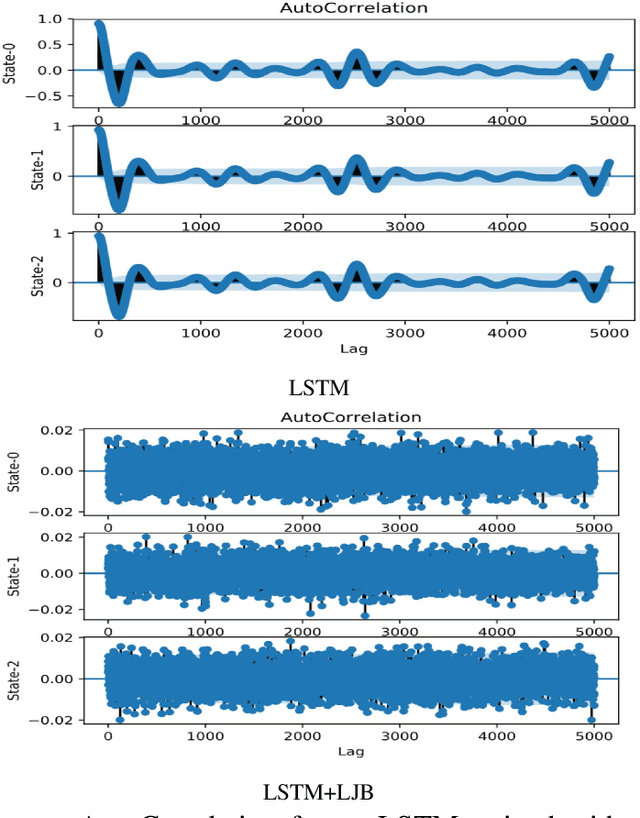

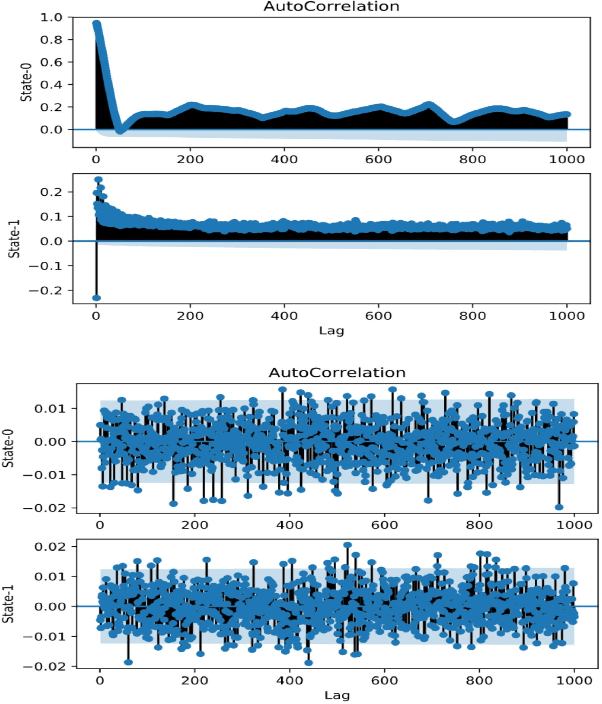

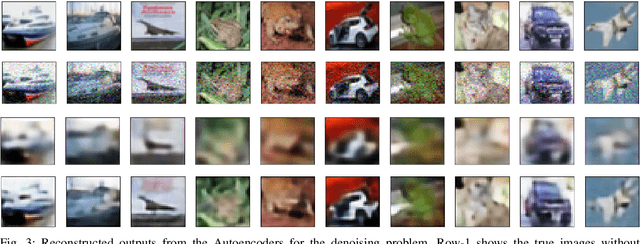

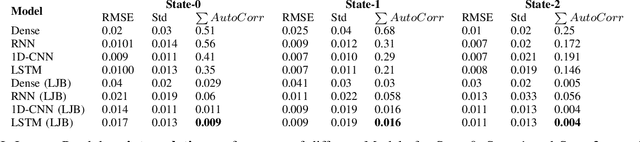

Abstract:In this paper we demonstrate that training models to minimize the autocorrelation of the residuals as an additional penalty prevents overfitting of the machine learning models. We use different problem extrapolative testing sets, and invoking decorrelation objective functions, we create models that can predict more complex systems. The models are interpretable, extrapolative, data-efficient, and capture predictable but complex non-stochastic behavior such as unmodeled degrees of freedom and systemic measurement noise. We apply this improved modeling paradigm to several simulated systems and an actual physical system in the context of system identification. Several ways of composing domain models with neural models are examined for time series, boosting, bagging, and auto-encoding on various systems of varying complexity and non-linearity. Although this work is preliminary, we show that the ability to combine models is a very promising direction for neural modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge