Kenny Choo

Ab-initio quantum chemistry with neural-network wavefunctions

Aug 26, 2022

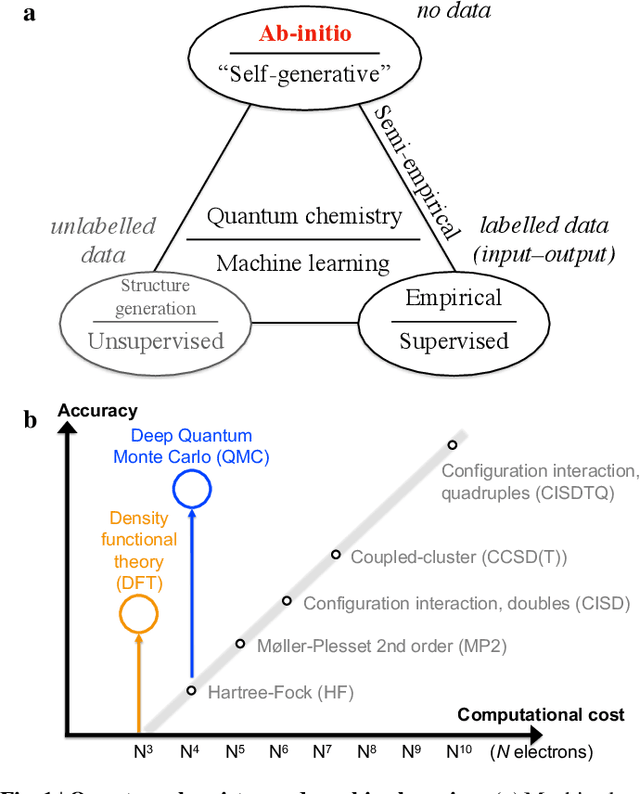

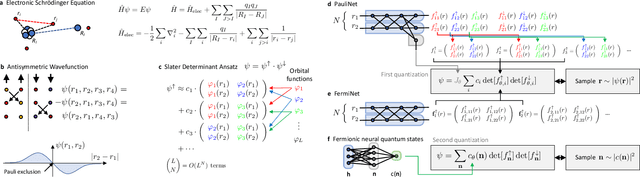

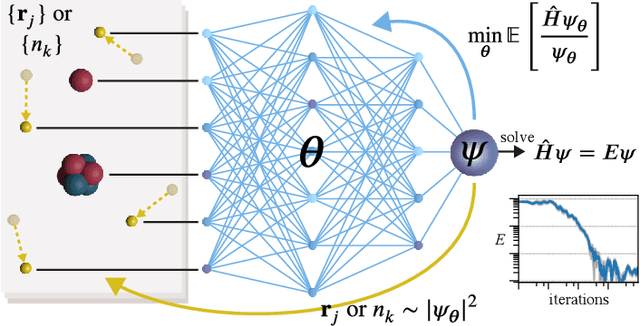

Abstract:Machine learning and specifically deep-learning methods have outperformed human capabilities in many pattern recognition and data processing problems, in game playing, and now also play an increasingly important role in scientific discovery. A key application of machine learning in the molecular sciences is to learn potential energy surfaces or force fields from ab-initio solutions of the electronic Schr\"odinger equation using datasets obtained with density functional theory, coupled cluster, or other quantum chemistry methods. Here we review a recent and complementary approach: using machine learning to aid the direct solution of quantum chemistry problems from first principles. Specifically, we focus on quantum Monte Carlo (QMC) methods that use neural network ansatz functions in order to solve the electronic Schr\"odinger equation, both in first and second quantization, computing ground and excited states, and generalizing over multiple nuclear configurations. Compared to existing quantum chemistry methods, these new deep QMC methods have the potential to generate highly accurate solutions of the Schr\"odinger equation at relatively modest computational cost.

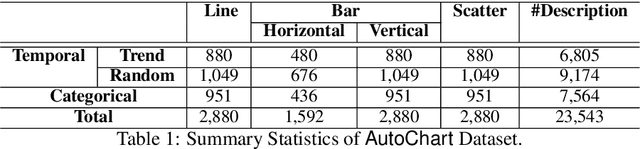

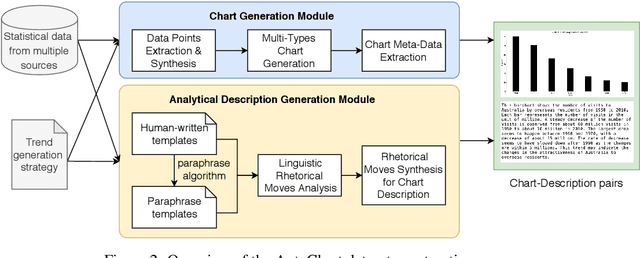

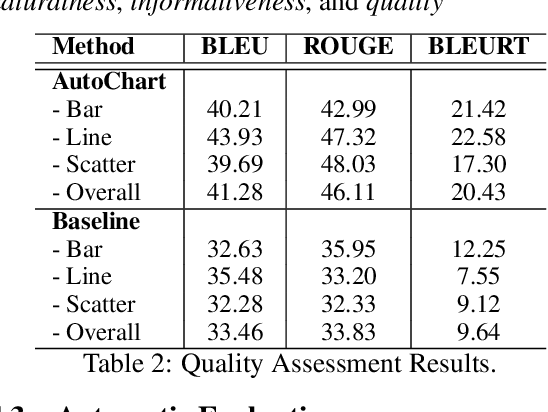

AutoChart: A Dataset for Chart-to-Text Generation Task

Aug 16, 2021

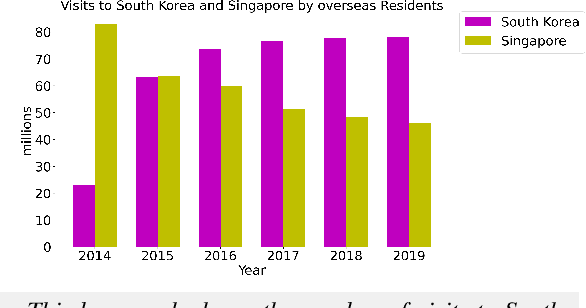

Abstract:The analytical description of charts is an exciting and important research area with many applications in academia and industry. Yet, this challenging task has received limited attention from the computational linguistics research community. This paper proposes \textsf{AutoChart}, a large dataset for the analytical description of charts, which aims to encourage more research into this important area. Specifically, we offer a novel framework that generates the charts and their analytical description automatically. We conducted extensive human and machine evaluations on the generated charts and descriptions and demonstrate that the generated texts are informative, coherent, and relevant to the corresponding charts.

Introduction to Machine Learning for the Sciences

Feb 08, 2021

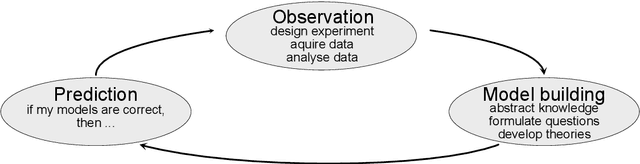

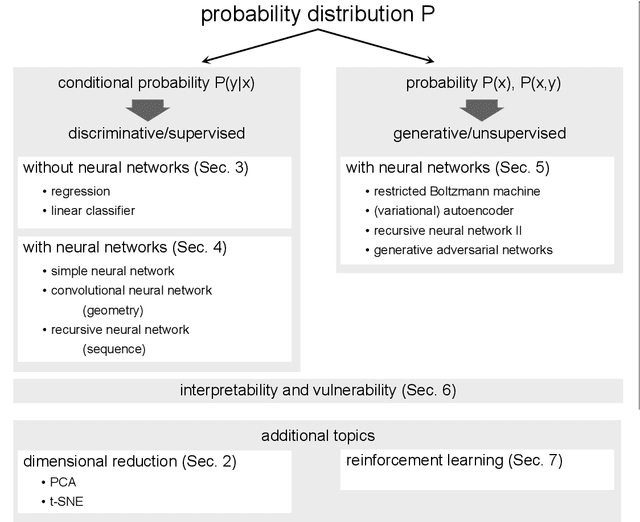

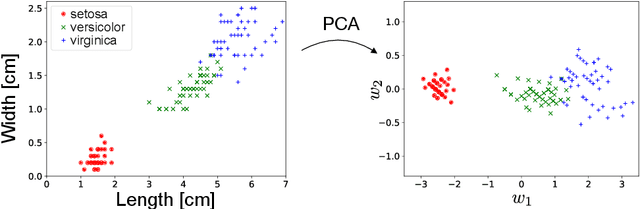

Abstract:This is an introductory machine learning course specifically developed with STEM students in mind. We discuss supervised, unsupervised, and reinforcement learning. The notes start with an exposition of machine learning methods without neural networks, such as principle component analysis, t-SNE, and linear regression. We continue with an introduction to both basic and advanced neural network structures such as conventional neural networks, (variational) autoencoders, generative adversarial networks, restricted Boltzmann machines, and recurrent neural networks. Questions of interpretability are discussed using the examples of dreaming and adversarial attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge