Kelsey Maass

A feasibility study of a hyperparameter tuning approach to automated inverse planning in radiotherapy

May 14, 2021

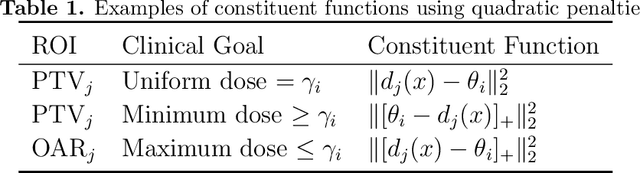

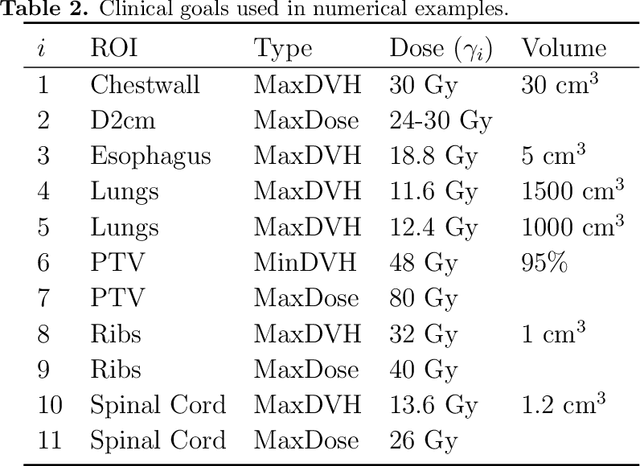

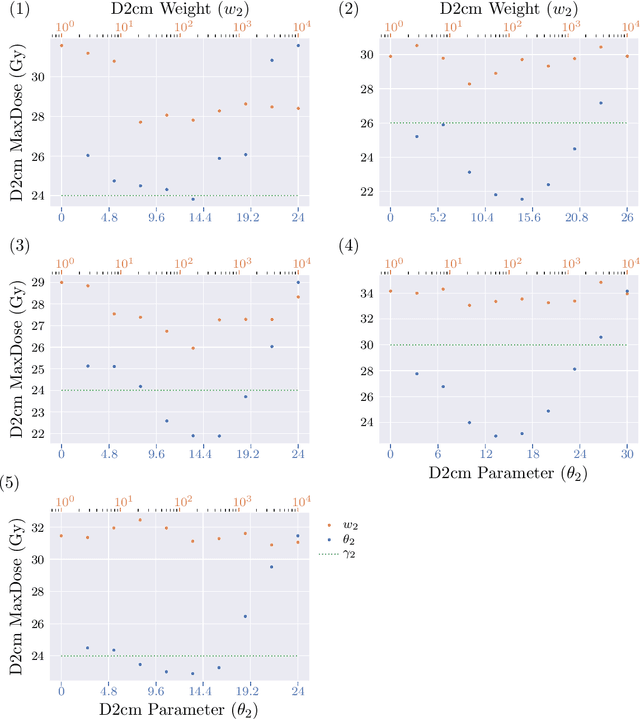

Abstract:Radiotherapy inverse planning requires treatment planners to modify multiple parameters in the objective function to produce clinically acceptable plans. Due to manual steps in this process, plan quality can vary widely depending on planning time available and planner's skills. The purpose of this study is to automate the inverse planning process to reduce active planning time while maintaining plan quality. We propose a hyperparameter tuning approach for automated inverse planning, where a treatment plan utility is maximized with respect to the limit dose parameters and weights of each organ-at-risk (OAR) objective. Using 6 patient cases, we investigated the impact of the choice of dose parameters, random and Bayesian search methods, and utility function form on planning time and plan quality. For given parameters, the plan was optimized in RayStation, using the scripting interface to obtain the dose distributions deliverable. We normalized all plans to have the same target coverage and compared the OAR dose metrics in the automatically generated plans with those in the manually generated clinical plans. Using 100 samples was found to produce satisfactory plan quality, and the average planning time was 2.3 hours. The OAR doses in the automatically generated plans were lower than the clinical plans by up to 76.8%. When the OAR doses were larger than the clinical plans, they were still between 0.57% above and 98.9% below the limit doses, indicating they are clinically acceptable. For a challenging case, a dimensionality reduction strategy produced a 92.9% higher utility using only 38.5% of the time needed to optimize over the original problem. This study demonstrates our hyperparameter tuning framework for automated inverse planning can significantly reduce the treatment planner's planning time with plan quality that is similar to or better than manually generated plans.

Street-level Travel-time Estimation via Aggregated Uber Data

Jan 13, 2020

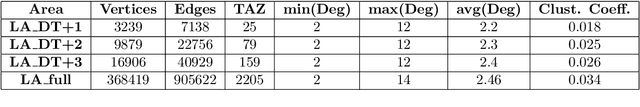

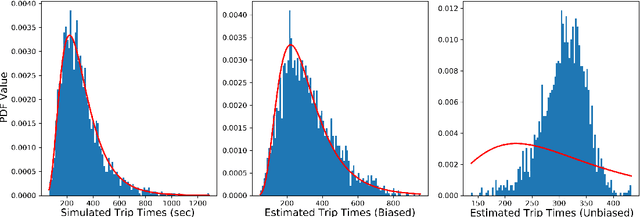

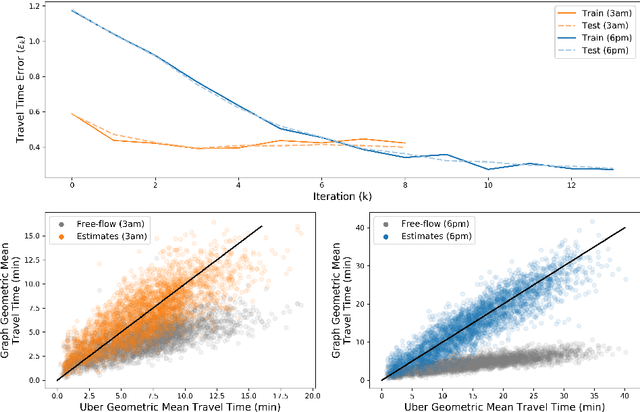

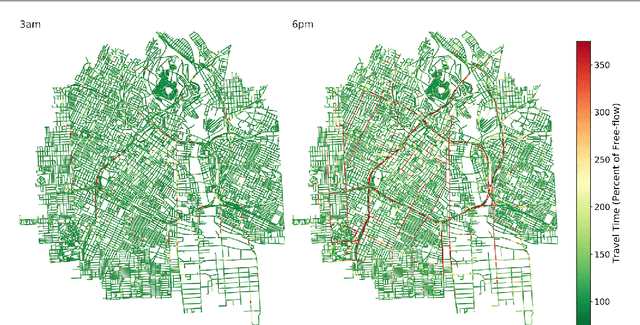

Abstract:Estimating temporal patterns in travel times along road segments in urban settings is of central importance to traffic engineers and city planners. In this work, we propose a methodology to leverage coarse-grained and aggregated travel time data to estimate the street-level travel times of a given metropolitan area. Our main focus is to estimate travel times along the arterial road segments where relevant data are often unavailable. The central idea of our approach is to leverage easy-to-obtain, aggregated data sets with broad spatial coverage, such as the data published by Uber Movement, as the fabric over which other expensive, fine-grained datasets, such as loop counter and probe data, can be overlaid. Our proposed methodology uses a graph representation of the road network and combines several techniques such as graph-based routing, trip sampling, graph sparsification, and least-squares optimization to estimate the street-level travel times. Using sampled trips and weighted shortest-path routing, we iteratively solve constrained least-squares problems to obtain the travel time estimates. We demonstrate our method on the Los Angeles metropolitan-area street network, where aggregated travel time data is available for trips between traffic analysis zones. Additionally, we present techniques to scale our approach via a novel graph pseudo-sparsification technique.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge