Keith A. Goatman

Conformal coronary calcification volume estimation with conditional coverage via histogram clustering

Jun 04, 2025Abstract:Incidental detection and quantification of coronary calcium in CT scans could lead to the early introduction of lifesaving clinical interventions. However, over-reporting could negatively affect patient wellbeing and unnecessarily burden the medical system. Therefore, careful considerations should be taken when automatically reporting coronary calcium scores. A cluster-based conditional conformal prediction framework is proposed to provide score intervals with calibrated coverage from trained segmentation networks without retraining. The proposed method was tuned and used to calibrate predictive intervals for 3D UNet models (deterministic, MCDropout and deep ensemble) reaching similar coverage with better triage metrics compared to conventional conformal prediction. Meaningful predictive intervals of calcium scores could help triage patients according to the confidence of their risk category prediction.

* IEEE 22nd International Symposium on Biomedical Imaging (ISBI)

Improving style transfer in dynamic contrast enhanced MRI using a spatio-temporal approach

Oct 03, 2023Abstract:Style transfer in DCE-MRI is a challenging task due to large variations in contrast enhancements across different tissues and time. Current unsupervised methods fail due to the wide variety of contrast enhancement and motion between the images in the series. We propose a new method that combines autoencoders to disentangle content and style with convolutional LSTMs to model predicted latent spaces along time and adaptive convolutions to tackle the localised nature of contrast enhancement. To evaluate our method, we propose a new metric that takes into account the contrast enhancement. Qualitative and quantitative analyses show that the proposed method outperforms the state of the art on two different datasets.

Can a single image processing algorithm work equally well across all phases of DCE-MRI?

Jun 22, 2023Abstract:Image segmentation and registration are said to be challenging when applied to dynamic contrast enhanced MRI sequences (DCE-MRI). The contrast agent causes rapid changes in intensity in the region of interest and elsewhere, which can lead to false positive predictions for segmentation tasks and confound the image registration similarity metric. While it is widely assumed that contrast changes increase the difficulty of these tasks, to our knowledge no work has quantified these effects. In this paper we examine the effect of training with different ratios of contrast enhanced (CE) data on two popular tasks: segmentation with nnU-Net and Mask R-CNN and registration using VoxelMorph and VTN. We experimented further by strategically using the available datasets through pretraining and fine tuning with different splits of data. We found that to create a generalisable model, pretraining with CE data and fine tuning with non-CE data gave the best result. This interesting find could be expanded to other deep learning based image processing tasks with DCE-MRI and provide significant improvements to the models performance.

Towards Tumour Graph Learning for Survival Prediction in Head & Neck Cancer Patients

Apr 17, 2023Abstract:With nearly one million new cases diagnosed worldwide in 2020, head \& neck cancer is a deadly and common malignity. There are challenges to decision making and treatment of such cancer, due to lesions in multiple locations and outcome variability between patients. Therefore, automated segmentation and prognosis estimation approaches can help ensure each patient gets the most effective treatment. This paper presents a framework to perform these functions on arbitrary field of view (FoV) PET and CT registered scans, thus approaching tasks 1 and 2 of the HECKTOR 2022 challenge as team \texttt{VokCow}. The method consists of three stages: localization, segmentation and survival prediction. First, the scans with arbitrary FoV are cropped to the head and neck region and a u-shaped convolutional neural network (CNN) is trained to segment the region of interest. Then, using the obtained regions, another CNN is combined with a support vector machine classifier to obtain the semantic segmentation of the tumours, which results in an aggregated Dice score of 0.57 in task 1. Finally, survival prediction is approached with an ensemble of Weibull accelerated failure times model and deep learning methods. In addition to patient health record data, we explore whether processing graphs of image patches centred at the tumours via graph convolutions can improve the prognostic predictions. A concordance index of 0.64 was achieved in the test set, ranking 6th in the challenge leaderboard for this task.

The Topology-Overlap Trade-Off in Retinal Arteriole-Venule Segmentation

Mar 31, 2023Abstract:Retinal fundus images can be an invaluable diagnosis tool for screening epidemic diseases like hypertension or diabetes. And they become especially useful when the arterioles and venules they depict are clearly identified and annotated. However, manual annotation of these vessels is extremely time demanding and taxing, which calls for automatic segmentation. Although convolutional neural networks can achieve high overlap between predictions and expert annotations, they often fail to produce topologically correct predictions of tubular structures. This situation is exacerbated by the bifurcation versus crossing ambiguity which causes classification mistakes. This paper shows that including a topology preserving term in the loss function improves the continuity of the segmented vessels, although at the expense of artery-vein misclassification and overall lower overlap metrics. However, we show that by including an orientation score guided convolutional module, based on the anisotropic single sided cake wavelet, we reduce such misclassification and further increase the topology correctness of the results. We evaluate our model on public datasets with conveniently chosen metrics to assess both overlap and topology correctness, showing that our model is able to produce results on par with state-of-the-art from the point of view of overlap, while increasing topological accuracy.

A Distance Oriented Kalman Filter Particle Swarm Optimizer Applied to Multi-Modality Image Registration

Mar 20, 2018

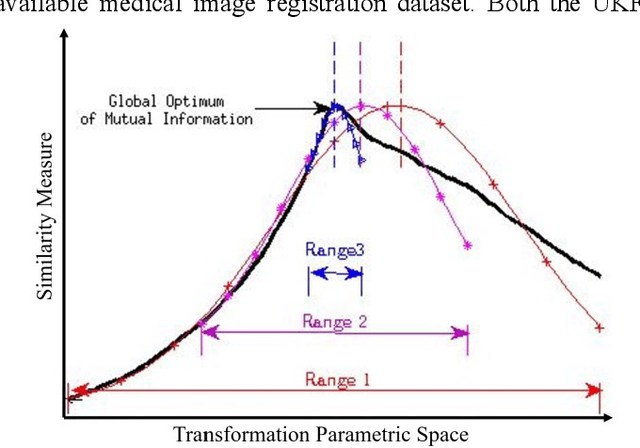

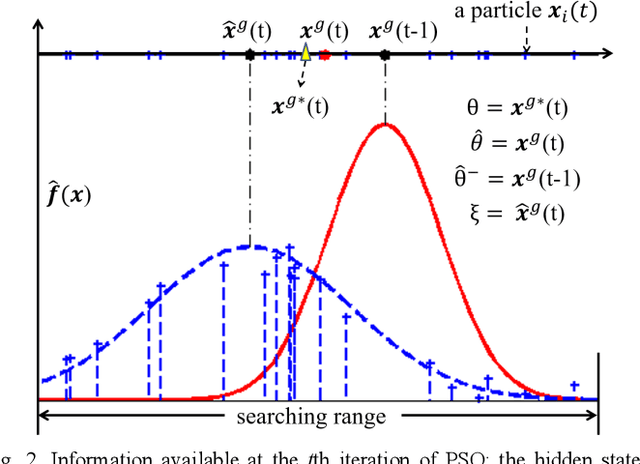

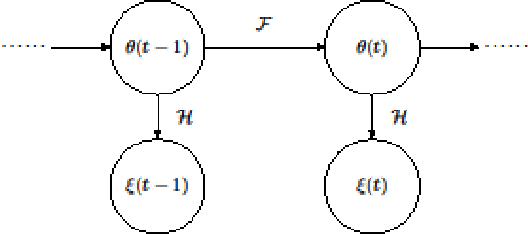

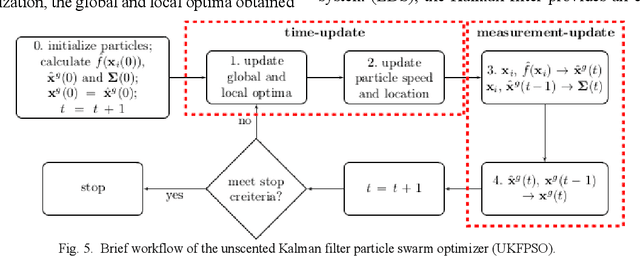

Abstract:In this paper we describe improvements to the particle swarm optimizer (PSO) made by inclusion of an unscented Kalman filter to guide particle motion. We demonstrate the effectiveness of the unscented Kalman filter PSO by comparing it with the original PSO algorithm and its variants designed to improve performance. The PSOs were tested firstly on a number of common synthetic benchmarking functions, and secondly applied to a practical three-dimensional image registration problem. The proposed methods displayed better performances for 4 out of 8 benchmark functions, and reduced the target registration errors by at least 2mm when registering down-sampled benchmark brain images. Our methods also demonstrated an ability to align images featuring motion related artefacts which all other methods failed to register. These new PSO methods provide a novel, efficient mechanism to integrate prior knowledge into each iteration of the optimization process, which can enhance the accuracy and speed of convergence in the application of medical image registration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge