Keikichi Hirose

CASA-Based Speaker Identification Using Cascaded GMM-CNN Classifier in Noisy and Emotional Talking Conditions

Feb 11, 2021

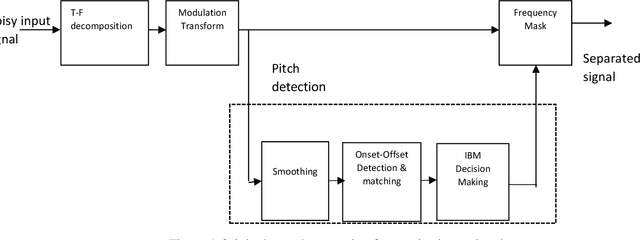

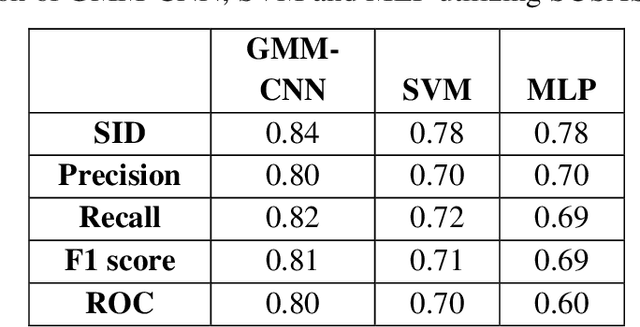

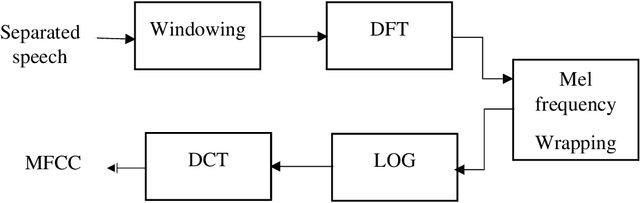

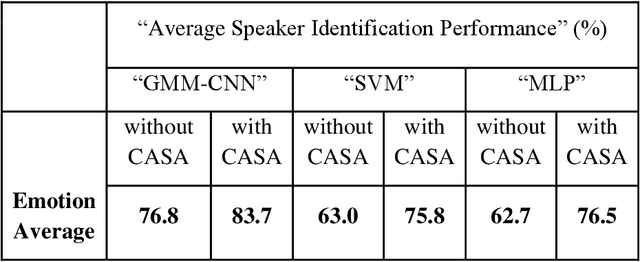

Abstract:This work aims at intensifying text-independent speaker identification performance in real application situations such as noisy and emotional talking conditions. This is achieved by incorporating two different modules: a Computational Auditory Scene Analysis CASA based pre-processing module for noise reduction and cascaded Gaussian Mixture Model Convolutional Neural Network GMM-CNN classifier for speaker identification followed by emotion recognition. This research proposes and evaluates a novel algorithm to improve the accuracy of speaker identification in emotional and highly-noise susceptible conditions. Experiments demonstrate that the proposed model yields promising results in comparison with other classifiers when Speech Under Simulated and Actual Stress SUSAS database, Emirati Speech Database ESD, the Ryerson Audio-Visual Database of Emotional Speech and Song RAVDESS database and the Fluent Speech Commands database are used in a noisy environment.

* Published in Applied Soft Computing journal

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge