Ke Shu

Cross-Modal Food Retrieval: Learning a Joint Embedding of Food Images and Recipes with Semantic Consistency and Attention Mechanism

Mar 09, 2020

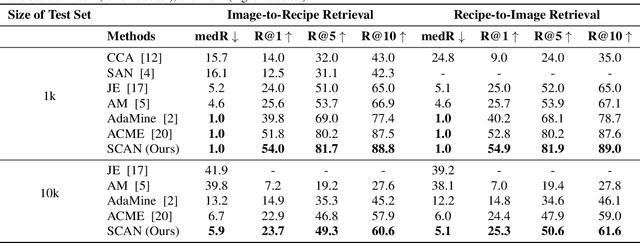

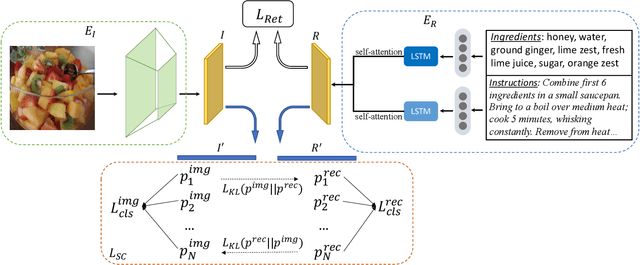

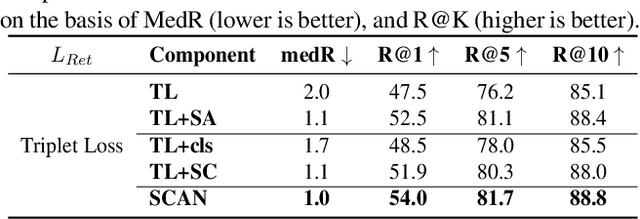

Abstract:Cross-modal food retrieval is an important task to perform analysis of food-related information, such as food images and cooking recipes. The goal is to learn an embedding of images and recipes in a common feature space, so that precise matching can be realized. Compared with existing cross-modal retrieval approaches, two major challenges in this specific problem are: 1) the large intra-class variance across cross-modal food data; and 2) the difficulties in obtaining discriminative recipe representations. To address these problems, we propose Semantic-Consistent and Attention-based Networks (SCAN), which regularize the embeddings of the two modalities by aligning output semantic probabilities. In addition, we exploit self-attention mechanism to improve the embedding of recipes. We evaluate the performance of the proposed method on the large-scale Recipe1M dataset, and the result shows that it outperforms the state-of-the-art.

RecipeGPT: Generative Pre-training Based Cooking Recipe Generation and Evaluation System

Mar 05, 2020

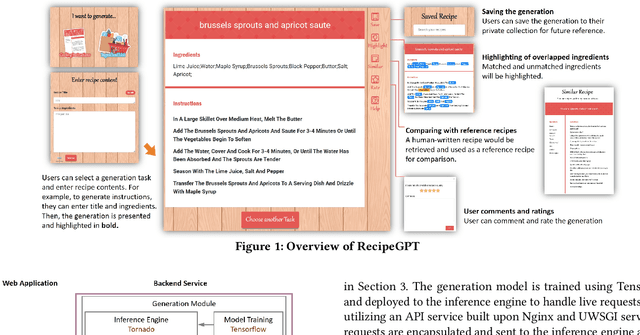

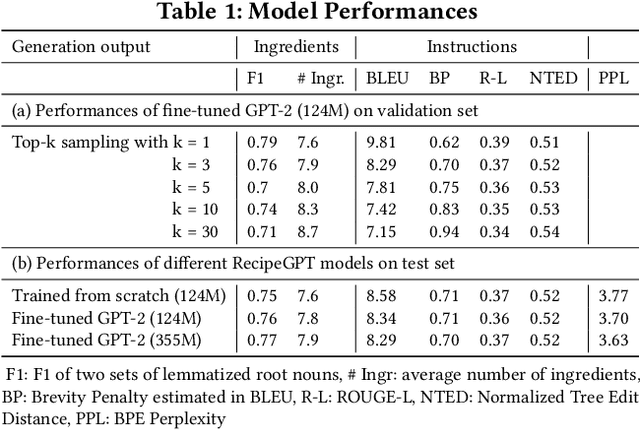

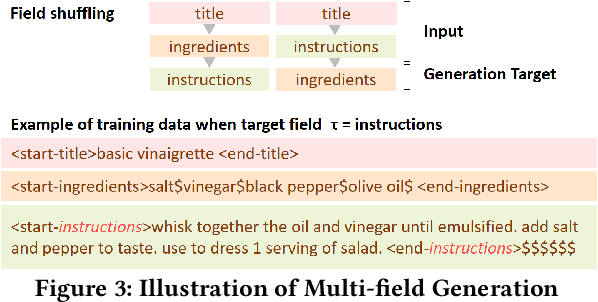

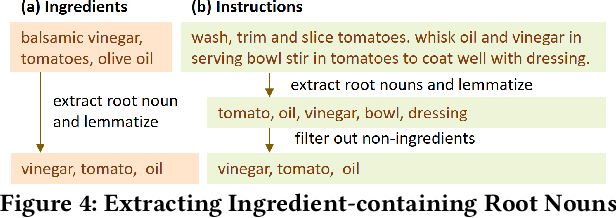

Abstract:Interests in the automatic generation of cooking recipes have been growing steadily over the past few years thanks to a large amount of online cooking recipes. We present RecipeGPT, a novel online recipe generation and evaluation system. The system provides two modes of text generations: (1) instruction generation from given recipe title and ingredients; and (2) ingredient generation from recipe title and cooking instructions. Its back-end text generation module comprises a generative pre-trained language model GPT-2 fine-tuned on a large cooking recipe dataset. Moreover, the recipe evaluation module allows the users to conveniently inspect the quality of the generated recipe contents and store the results for future reference. RecipeGPT can be accessed online at https://recipegpt.org/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge