Katherine Keith

Democratizing Machine Learning for Interdisciplinary Scholars: Report on Organizing the NLP+CSS Online Tutorial Series

Nov 29, 2022

Abstract:Many scientific fields -- including biology, health, education, and the social sciences -- use machine learning (ML) to help them analyze data at an unprecedented scale. However, ML researchers who develop advanced methods rarely provide detailed tutorials showing how to apply these methods. Existing tutorials are often costly to participants, presume extensive programming knowledge, and are not tailored to specific application fields. In an attempt to democratize ML methods, we organized a year-long, free, online tutorial series targeted at teaching advanced natural language processing (NLP) methods to computational social science (CSS) scholars. Two organizers worked with fifteen subject matter experts to develop one-hour presentations with hands-on Python code for a range of ML methods and use cases, from data pre-processing to analyzing temporal variation of language change. Although live participation was more limited than expected, a comparison of pre- and post-tutorial surveys showed an increase in participants' perceived knowledge of almost one point on a 7-point Likert scale. Furthermore, participants asked thoughtful questions during tutorials and engaged readily with tutorial content afterwards, as demonstrated by 10K~total views of posted tutorial recordings. In this report, we summarize our organizational efforts and distill five principles for democratizing ML+X tutorials. We hope future organizers improve upon these principles and continue to lower barriers to developing ML skills for researchers of all fields.

Fairkit, Fairkit, on the Wall, Who's the Fairest of Them All? Supporting Data Scientists in Training Fair Models

Dec 17, 2020

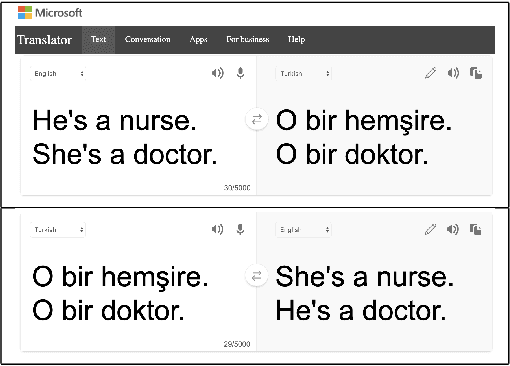

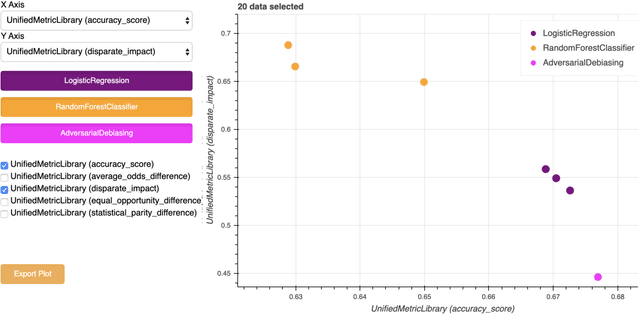

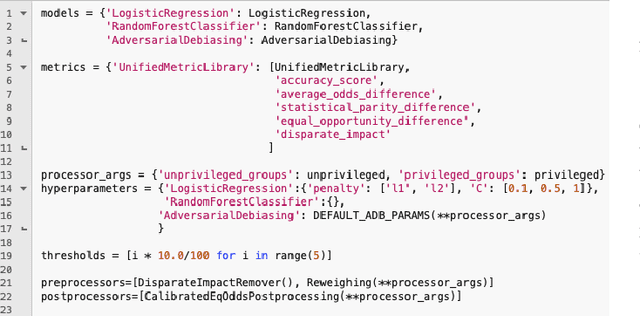

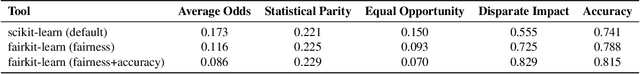

Abstract:Modern software relies heavily on data and machine learning, and affects decisions that shape our world. Unfortunately, recent studies have shown that because of biases in data, software systems frequently inject bias into their decisions, from producing better closed caption transcriptions of men's voices than of women's voices to overcharging people of color for financial loans. To address bias in machine learning, data scientists need tools that help them understand the trade-offs between model quality and fairness in their specific data domains. Toward that end, we present fairkit-learn, a toolkit for helping data scientists reason about and understand fairness. Fairkit-learn works with state-of-the-art machine learning tools and uses the same interfaces to ease adoption. It can evaluate thousands of models produced by multiple machine learning algorithms, hyperparameters, and data permutations, and compute and visualize a small Pareto-optimal set of models that describe the optimal trade-offs between fairness and quality. We evaluate fairkit-learn via a user study with 54 students, showing that students using fairkit-learn produce models that provide a better balance between fairness and quality than students using scikit-learn and IBM AI Fairness 360 toolkits. With fairkit-learn, users can select models that are up to 67% more fair and 10% more accurate than the models they are likely to train with scikit-learn.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge