Karl R. Gegenfurtner

Human-Aligned Evaluation of a Pixel-wise DNN Color Constancy Model

Feb 14, 2026Abstract:We previously investigated color constancy in photorealistic virtual reality (VR) and developed a Deep Neural Network (DNN) that predicts reflectance from rendered images. Here, we combine both approaches to compare and study a model and human performance with respect to established color constancy mechanisms: local surround, maximum flux and spatial mean. Rather than evaluating the model against physical ground truth, model performance was assessed using the same achromatic object selection task employed in the human experiments. The model, a ResNet based U-Net from our previous work, was pre-trained on rendered images to predict surface reflectance. We then applied transfer learning, fine-tuning only the network's decoder on images from the baseline VR condition. To parallel the human experiment, the model's output was used to perform the same achromatic object selection task across all conditions. Results show a strong correspondence between the model and human behavior. Both achieved high constancy under baseline conditions and showed similar, condition-dependent performance declines when the local surround or spatial mean color cues were removed.

Deep Neural Models for color discrimination and color constancy

Dec 28, 2020

Abstract:Color constancy is our ability to perceive constant colors across varying illuminations. Here, we trained deep neural networks to be color constant and evaluated their performance with varying cues. Inputs to the networks consisted of the cone excitations in 3D-rendered images of 2115 different 3D-shapes, with spectral reflectances of 1600 different Munsell chips, illuminated under 278 different natural illuminations. The models were trained to classify the reflectance of the objects. One network, Deep65, was trained under a fixed daylight D65 illumination, while DeepCC was trained under varying illuminations. Testing was done with 4 new illuminations with equally spaced CIEL*a*b* chromaticities, 2 along the daylight locus and 2 orthogonal to it. We found a high degree of color constancy for DeepCC, and constancy was higher along the daylight locus. When gradually removing cues from the scene, constancy decreased. High levels of color constancy were achieved with different DNN architectures. Both ResNets and classical ConvNets of varying degrees of complexity performed well. However, DeepCC, a convolutional network, represented colors along the 3 color dimensions of human color vision, while ResNets showed a more complex representation.

Paradox in Deep Neural Networks: Similar yet Different while Different yet Similar

Mar 12, 2019

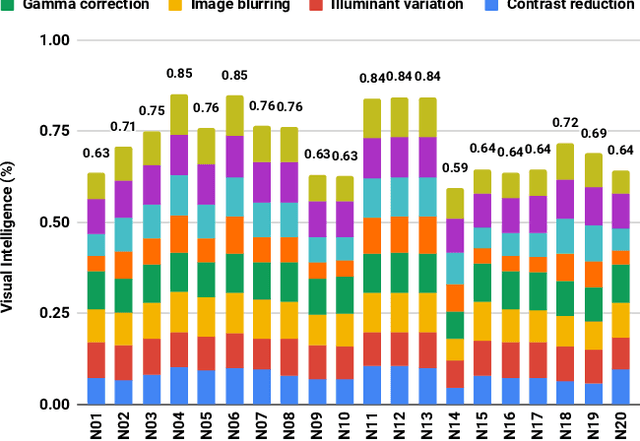

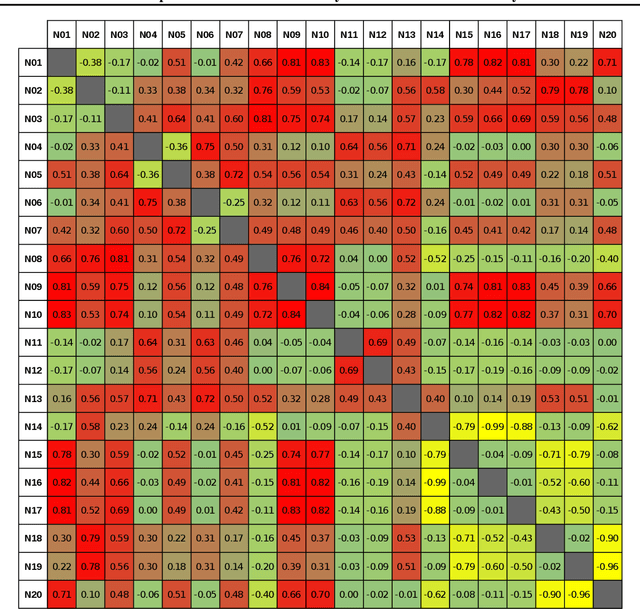

Abstract:Machine learning is advancing towards a data-science approach, implying a necessity to a line of investigation to divulge the knowledge learnt by deep neuronal networks. Limiting the comparison among networks merely to a predefined intelligent ability, according to ground truth, does not suffice, it should be associated with innate similarity of these artificial entities. Here, we analysed multiple instances of an identical architecture trained to classify objects in static images (CIFAR and ImageNet data sets). We evaluated the performance of the networks under various distortions and compared it to the intrinsic similarity between their constituent kernels. While we expected a close correspondence between these two measures, we observed a puzzling phenomenon. Pairs of networks whose kernels' weights are over 99.9% correlated can exhibit significantly different performances, yet other pairs with no correlation can reach quite compatible levels of performance. We show implications of this for transfer learning, and argue its importance in our general understanding of what intelligence is, whether natural or artificial.

Manifestation of Image Contrast in Deep Networks

Feb 12, 2019

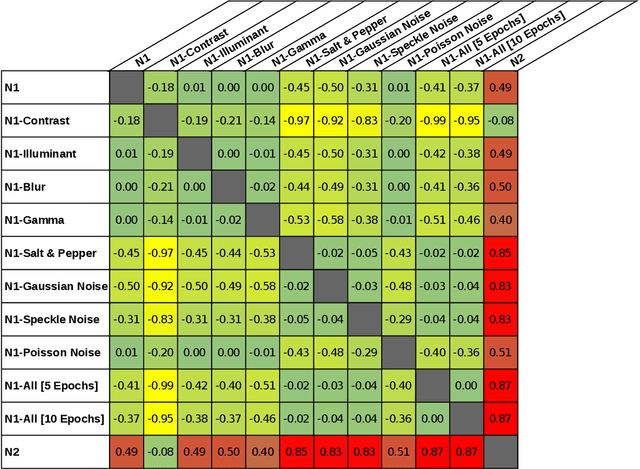

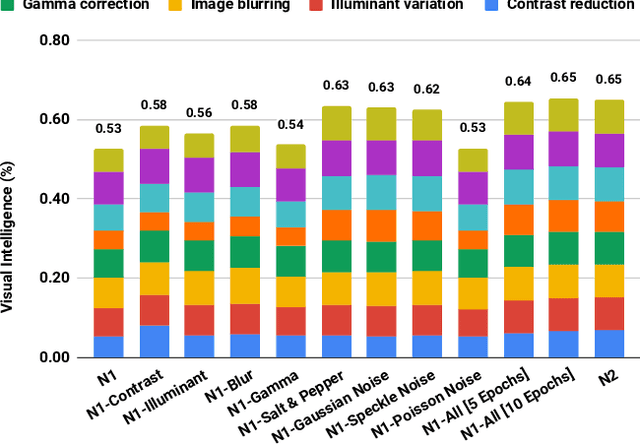

Abstract:Contrast is subject to dramatic changes across the visual field, depending on the source of light and scene configurations. Hence, the human visual system has evolved to be more sensitive to contrast than absolute luminance. This feature is equally desired for machine vision: the ability to recognise patterns even when aspects of them are transformed due to variation in local and global contrast. In this work, we thoroughly investigate the impact of image contrast on prominent deep convolutional networks, both during the training and testing phase. The results of conducted experiments testify to an evident deterioration in the accuracy of all state-of-the-art networks at low-contrast images. We demonstrate that "contrast-augmentation" is a sufficient condition to endow a network with invariance to contrast. This practice shows no negative side effects, quite the contrary, it might allow a model to refrain from other illuminance related over-fittings. This ability can also be achieved by a short fine-tuning procedure, which opens new lines of investigation on mechanisms involved in two networks whose weights are over 99.9% correlated, yet astonishingly produce utterly different outcomes. Our further analysis suggests that the optimisation algorithm is an influential factor, however with a significantly lower effect; and while the choice of an architecture manifests a negligible impact on this phenomenon, the first layers appear to be more critical.

How is Contrast Encoded in Deep Neural Networks?

Sep 05, 2018

Abstract:Contrast is a crucial factor in visual information processing. It is desired for a visual system - irrespective of being biological or artificial - to "perceive" the world robustly under large potential changes in illumination. In this work, we studied the responses of deep neural networks (DNN) to identical images at different levels of contrast. We analysed the activation of kernels in the convolutional layers of eight prominent networks with distinct architectures (e.g. VGG and Inception). The results of our experiments indicate that those networks with a higher tolerance to alteration of contrast have more than one convolutional layer prior to the first max-pooling operator. It appears that the last convolutional layer before the first max-pooling acts as a mitigator of contrast variation in input images. In our investigation, interestingly, we observed many similarities between the mechanisms of these DNNs and biological visual systems. These comparisons allow us to understand more profoundly the underlying mechanisms of a visual system that is grounded on the basis of "data-analysis".

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge