Kancharagunta Kishan Babu

Underwater Image Enhancement using Generative Adversarial Networks: A Survey

Jan 10, 2025Abstract:In recent years, there has been a surge of research focused on underwater image enhancement using Generative Adversarial Networks (GANs), driven by the need to overcome the challenges posed by underwater environments. Issues such as light attenuation, scattering, and color distortion severely degrade the quality of underwater images, limiting their use in critical applications. Generative Adversarial Networks (GANs) have emerged as a powerful tool for enhancing underwater photos due to their ability to learn complex transformations and generate realistic outputs. These advancements have been applied to real-world applications, including marine biology and ecosystem monitoring, coral reef health assessment, underwater archaeology, and autonomous underwater vehicle (AUV) navigation. This paper explores all major approaches to underwater image enhancement, from physical and physics-free models to Convolutional Neural Network (CNN)-based models and state-of-the-art GAN-based methods. It provides a comprehensive analysis of these methods, evaluation metrics, datasets, and loss functions, offering a holistic view of the field. Furthermore, the paper delves into the limitations and challenges faced by current methods, such as generalization issues, high computational demands, and dataset biases, while suggesting potential directions for future research.

PCSGAN: Perceptual Cyclic-Synthesized Generative Adversarial Networks for Thermal and NIR to Visible Image Transformation

Feb 13, 2020

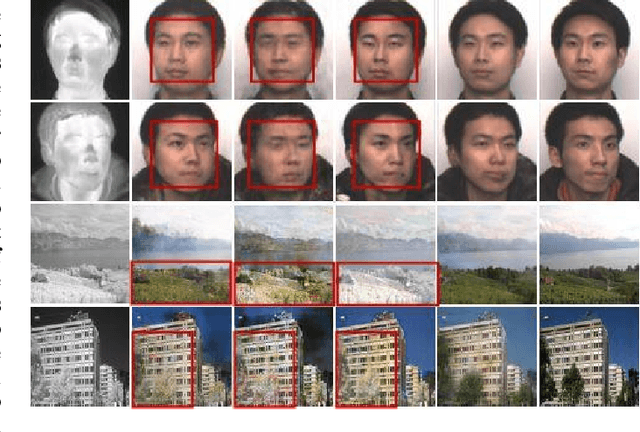

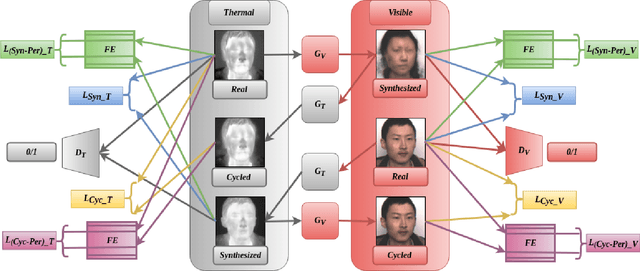

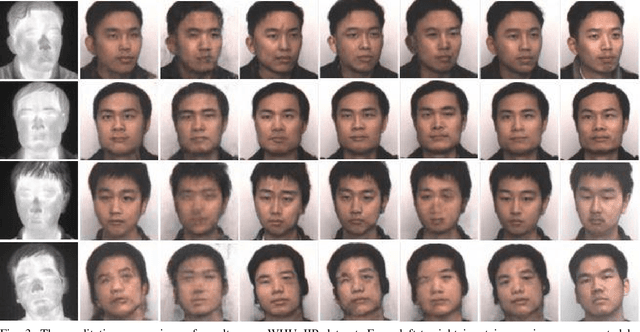

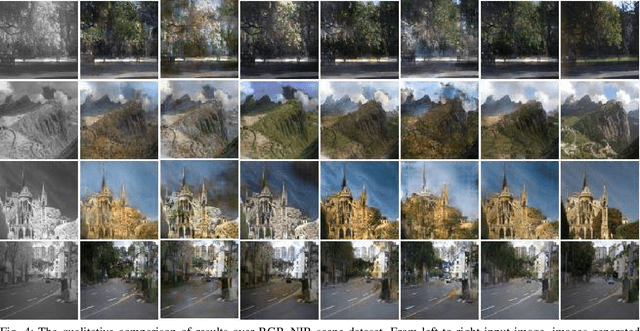

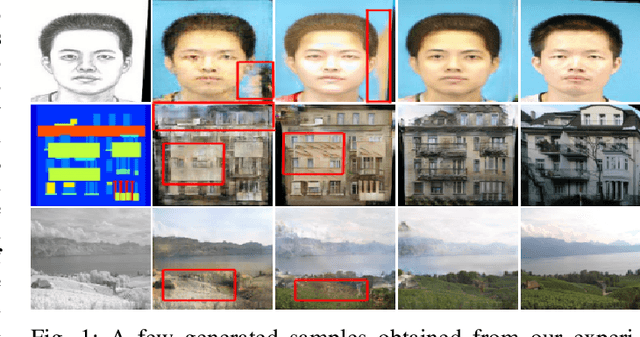

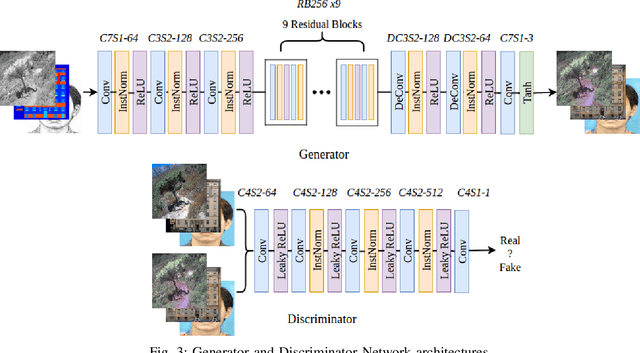

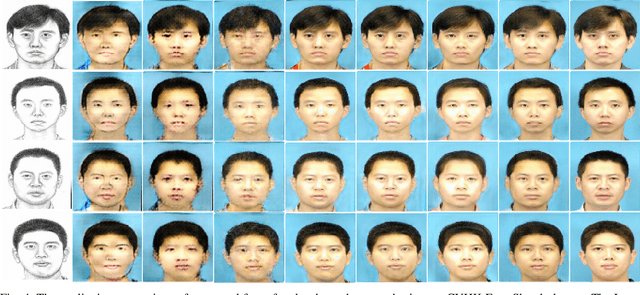

Abstract:In many real world scenarios, it is difficult to capture the images in the visible light spectrum (VIS) due to bad lighting conditions. However, the images can be captured in such scenarios using Near-Infrared (NIR) and Thermal (THM) cameras. The NIR and THM images contain the limited details. Thus, there is a need to transform the images from THM/NIR to VIS for better understanding. However, it is non-trivial task due to the large domain discrepancies and lack of abundant datasets. Nowadays, Generative Adversarial Network (GAN) is able to transform the images from one domain to another domain. Most of the available GAN based methods use the combination of the adversarial and the pixel-wise losses (like L1 or L2) as the objective function for training. The quality of transformed images in case of THM/NIR to VIS transformation is still not up to the mark using such objective function. Thus, better objective functions are needed to improve the quality, fine details and realism of the transformed images. A new model for THM/NIR to VIS image transformation called Perceptual Cyclic-Synthesized Generative Adversarial Network (PCSGAN) is introduced to address these issues. The PCSGAN uses the combination of the perceptual (i.e., feature based) losses along with the pixel-wise and the adversarial losses. Both the quantitative and qualitative measures are used to judge the performance of the PCSGAN model over the WHU-IIP face and the RGB-NIR scene datasets. The proposed PCSGAN outperforms the state-of-the-art image transformation models, including Pix2pix, DualGAN, CycleGAN, PS2GAN, and PAN in terms of the SSIM, MSE, PSNR and LPIPS evaluation measures. The code is available at: \url{https://github.com/KishanKancharagunta/PCSGAN}.

CDGAN: Cyclic Discriminative Generative Adversarial Networks for Image-to-Image Transformation

Jan 15, 2020

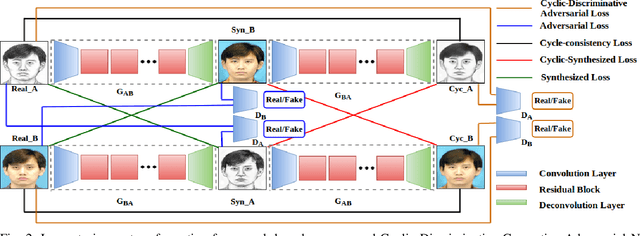

Abstract:Image-to-image transformation is a kind of problem, where the input image from one visual representation is transformed into the output image of another visual representation. Since 2014, Generative Adversarial Networks (GANs) have facilitated a new direction to tackle this problem by introducing the generator and the discriminator networks in its architecture. Many recent works, like Pix2Pix, CycleGAN, DualGAN, PS2MAN and CSGAN handled this problem with the required generator and discriminator networks and choice of the different losses that are used in the objective functions. In spite of these works, still there is a gap to fill in terms of both the quality of the images generated that should look more realistic and as much as close to the ground truth images. In this work, we introduce a new Image-to-Image Transformation network named Cyclic Discriminative Generative Adversarial Networks (CDGAN) that fills the above mentioned gaps. The proposed CDGAN generates high quality and more realistic images by incorporating the additional discriminator networks for cycled images in addition to the original architecture of the CycleGAN. To demonstrate the performance of the proposed CDGAN, it is tested over three different baseline image-to-image transformation datasets. The quantitative metrics such as pixel-wise similarity, structural level similarity and perceptual level similarity are used to judge the performance. Moreover, the qualitative results are also analyzed and compared with the state-of-the-art methods. The proposed CDGAN method clearly outperformed all the state-of-the-art methods when compared over the three baseline Image-to-Image transformation datasets.

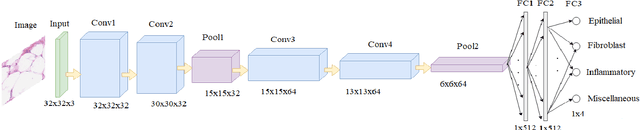

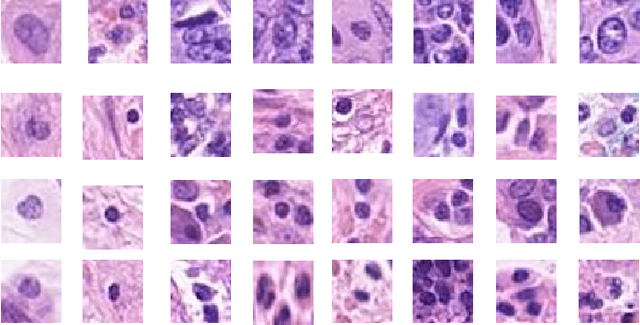

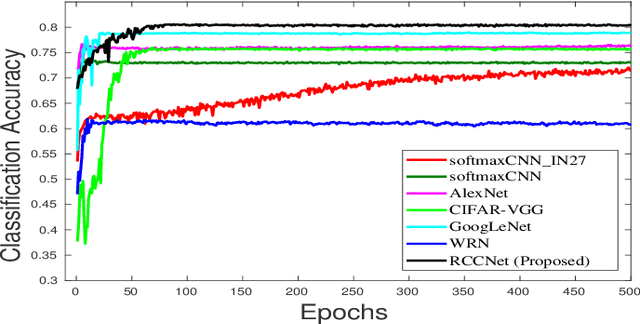

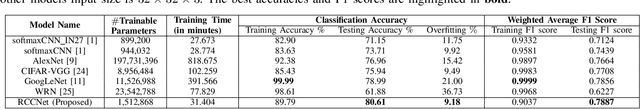

RCCNet: An Efficient Convolutional Neural Network for Histological Routine Colon Cancer Nuclei Classification

Oct 20, 2018

Abstract:Efficient and precise classification of histological cell nuclei is of utmost importance due to its potential applications in the field of medical image analysis. It would facilitate the medical practitioners to better understand and explore various factors for cancer treatment. The classification of histological cell nuclei is a challenging task due to the cellular heterogeneity. This paper proposes an efficient Convolutional Neural Network (CNN) based architecture for classification of histological routine colon cancer nuclei named as RCCNet. The main objective of this network is to keep the CNN model as simple as possible. The proposed RCCNet model consists of only 1,512,868 learnable parameters which are significantly less compared to the popular CNN models such as AlexNet, CIFARVGG, GoogLeNet, and WRN. The experiments are conducted over publicly available routine colon cancer histological dataset "CRCHistoPhenotypes". The results of the proposed RCCNet model are compared with five state-of-the-art CNN models in terms of the accuracy, weighted average F1 score and training time. The proposed method has achieved a classification accuracy of 80.61% and 0.7887 weighted average F1 score. The proposed RCCNet is more efficient and generalized terms of the training time and data over-fitting, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge