CDGAN: Cyclic Discriminative Generative Adversarial Networks for Image-to-Image Transformation

Paper and Code

Jan 15, 2020

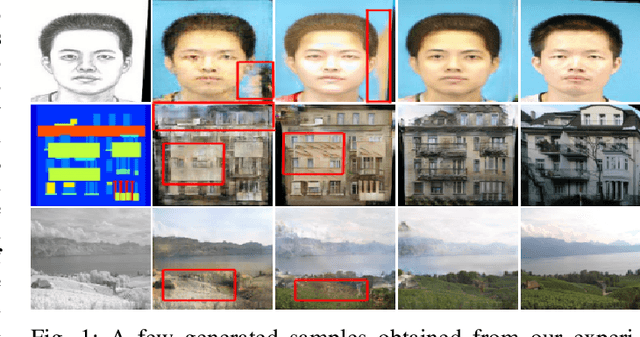

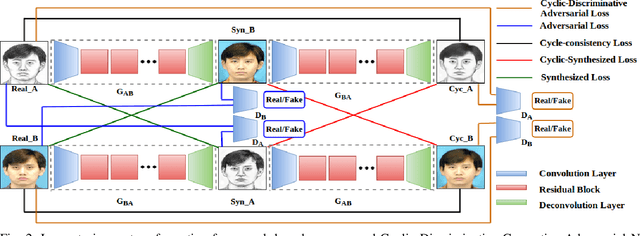

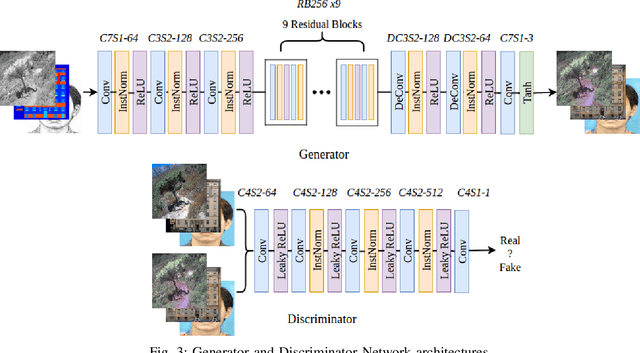

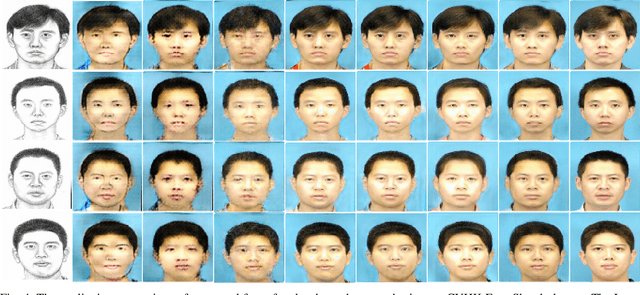

Image-to-image transformation is a kind of problem, where the input image from one visual representation is transformed into the output image of another visual representation. Since 2014, Generative Adversarial Networks (GANs) have facilitated a new direction to tackle this problem by introducing the generator and the discriminator networks in its architecture. Many recent works, like Pix2Pix, CycleGAN, DualGAN, PS2MAN and CSGAN handled this problem with the required generator and discriminator networks and choice of the different losses that are used in the objective functions. In spite of these works, still there is a gap to fill in terms of both the quality of the images generated that should look more realistic and as much as close to the ground truth images. In this work, we introduce a new Image-to-Image Transformation network named Cyclic Discriminative Generative Adversarial Networks (CDGAN) that fills the above mentioned gaps. The proposed CDGAN generates high quality and more realistic images by incorporating the additional discriminator networks for cycled images in addition to the original architecture of the CycleGAN. To demonstrate the performance of the proposed CDGAN, it is tested over three different baseline image-to-image transformation datasets. The quantitative metrics such as pixel-wise similarity, structural level similarity and perceptual level similarity are used to judge the performance. Moreover, the qualitative results are also analyzed and compared with the state-of-the-art methods. The proposed CDGAN method clearly outperformed all the state-of-the-art methods when compared over the three baseline Image-to-Image transformation datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge