Kai-Min Chung

Online TSP with Predictions

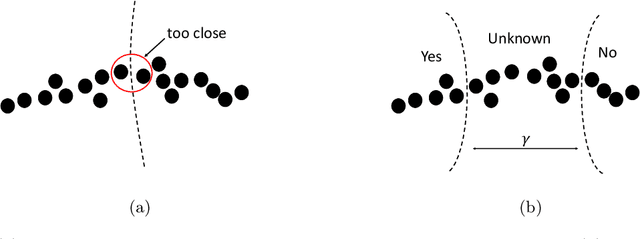

Jun 30, 2022Abstract:We initiate the study of online routing problems with predictions, inspired by recent exciting results in the area of learning-augmented algorithms. A learning-augmented online algorithm which incorporates predictions in a black-box manner to outperform existing algorithms if the predictions are accurate while otherwise maintaining theoretical guarantees even when the predictions are extremely erroneous is a popular framework for overcoming pessimistic worst-case competitive analysis. In this study, we particularly begin investigating the classical online traveling salesman problem (OLTSP), where future requests are augmented with predictions. Unlike the prediction models in other previous studies, each actual request in the OLTSP, associated with its arrival time and position, may not coincide with the predicted ones, which, as imagined, leads to a troublesome situation. Our main result is to study different prediction models and design algorithms to improve the best-known results in the different settings. Moreover, we generalize the proposed results to the online dial-a-ride problem.

On the Sample Complexity of PAC Learning Quantum Process

Oct 25, 2018

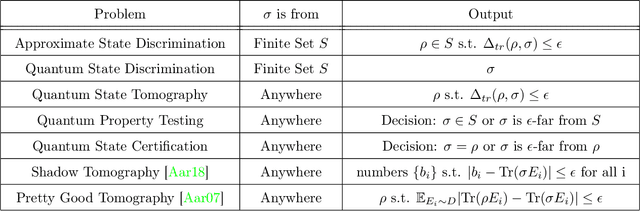

Abstract:We generalize the PAC (probably approximately correct) learning model to the quantum world by generalizing the concepts from classical functions to quantum processes, defining the problem of \emph{PAC learning quantum process}, and study its sample complexity. In the problem of PAC learning quantum process, we want to learn an $\epsilon$-approximate of an unknown quantum process $c^*$ from a known finite concept class $C$ with probability $1-\delta$ using samples $\{(x_1,c^*(x_1)),(x_2,c^*(x_2)),\dots\}$, where $\{x_1,x_2, \dots\}$ are computational basis states sampled from an unknown distribution $D$ and $\{c^*(x_1),c^*(x_2),\dots\}$ are the (possibly mixed) quantum states outputted by $c^*$. The special case of PAC-learning quantum process under constant input reduces to a natural problem which we named as approximate state discrimination, where we are given copies of an unknown quantum state $c^*$ from an known finite set $C$, and we want to learn with probability $1-\delta$ an $\epsilon$-approximate of $c^*$ with as few copies of $c^*$ as possible. We show that the problem of PAC learning quantum process can be solved with $$O\left(\frac{\log|C| + \log(1/ \delta)} { \epsilon^2}\right)$$ samples when the outputs are pure states and $$O\left(\frac{\log^3 |C|(\log |C|+\log(1/ \delta))} { \epsilon^2}\right)$$ samples if the outputs can be mixed. Some implications of our results are that we can PAC-learn a polynomial sized quantum circuit in polynomial samples and approximate state discrimination can be solved in polynomial samples even when concept class size $|C|$ is exponential in the number of qubits, an exponentially improvement over a full state tomography.

On the Algorithmic Power of Spiking Neural Networks

Mar 28, 2018

Abstract:Spiking Neural Networks (SNN) are mathematical models in neuroscience to describe the dynamics among a set of neurons which interact with each other by firing spike signals to each other. Interestingly, recent works observed that for an integrate-and-fire model, when configured appropriately (e.g., after the parameters are learned properly), the neurons' firing rate, i.e., converges to an optimal solution of Lasso and certain quadratic optimization problems. Thus, SNN can be viewed as a natural algorithm for solving such convex optimization problems. However, theoretical understanding of SNN algorithms remains limited. In particular, only the convergence result for the Lasso problem is known, but the bounds of the convergence rate remain unknown. Therefore, we do not know any explicit complexity bounds for SNN algorithms. In this work, we investigate the algorithmic power of the integrate-and-fire SNN model after the parameters are properly learned/configured. In particular, we explore what algorithms SNN can implement. We start by formulating a clean discrete-time SNN model to facilitate the algorithmic study. We consider two SNN dynamics and obtain the following results. * We first consider an arguably simplest SNN dynamics with a threshold spiking rule, which we call simple SNN. We show that simple SNN solves the least square problem for a matrix $A\in\mathbb{R}^{m\times n}$ and vector $\mathbf{b} \in \mathbb{R}^m$ with timestep complexity $O(\kappa n/\epsilon)$. * For the under-determined case, we observe that simple SNN may solve the $\ell_1$ minimization problem using an interesting primal-dual algorithm, which solves the dual problem by a gradient-based algorithm while updates the primal solution along the way. We analyze a variant dynamics and use simulation to serve as partial evidence to support the conjecture.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge