Kai Morino

Model-Size Reduction for Reservoir Computing by Concatenating Internal States Through Time

Jun 11, 2020

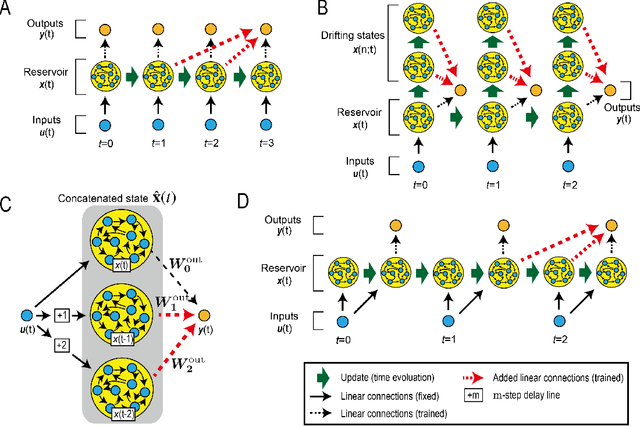

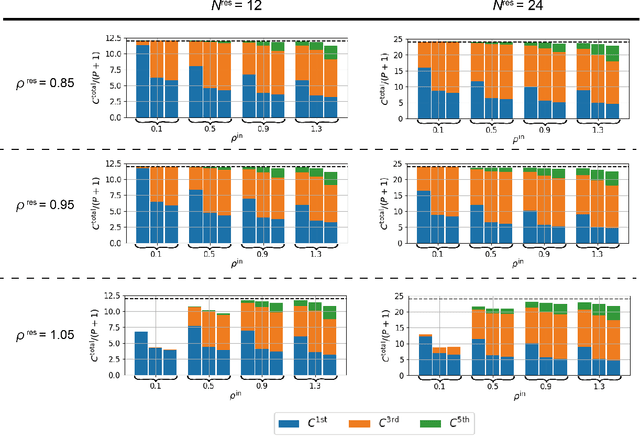

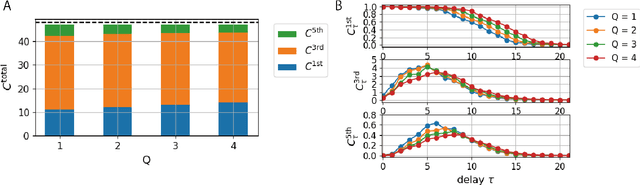

Abstract:Reservoir computing (RC) is a machine learning algorithm that can learn complex time series from data very rapidly based on the use of high-dimensional dynamical systems, such as random networks of neurons, called "reservoirs." To implement RC in edge computing, it is highly important to reduce the amount of computational resources that RC requires. In this study, we propose methods that reduce the size of the reservoir by inputting the past or drifting states of the reservoir to the output layer at the current time step. These proposed methods are analyzed based on information processing capacity, which is a performance measure of RC proposed by Dambre et al. (2012). In addition, we evaluate the effectiveness of the proposed methods on time-series prediction tasks: the generalized Henon-map and NARMA. On these tasks, we found that the proposed methods were able to reduce the size of the reservoir up to one tenth without a substantial increase in regression error. Because the applications of the proposed methods are not limited to a specific network structure of the reservoir, the proposed methods could further improve the energy efficiency of RC-based systems, such as FPGAs and photonic systems.

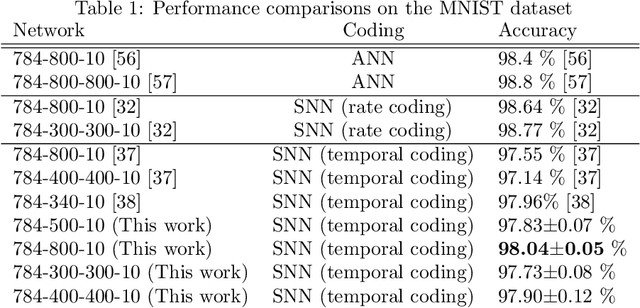

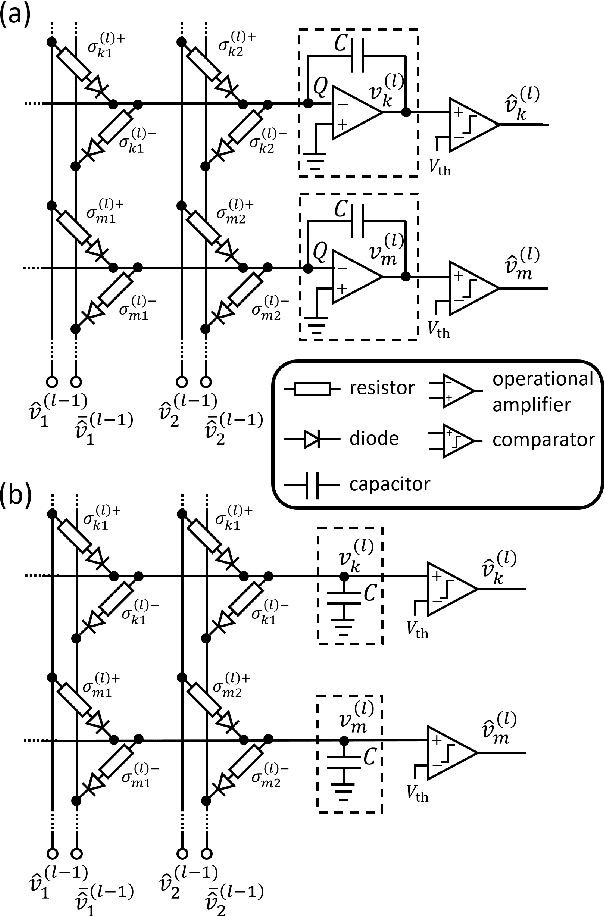

A Supervised Learning Algorithm for Multilayer Spiking Neural Networks Based on Temporal Coding Toward Energy-Efficient VLSI Processor Design

Jan 08, 2020

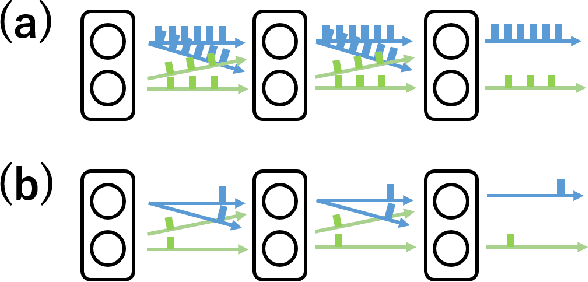

Abstract:Spiking neural networks (SNNs) are brain-inspired mathematical models with the ability to process information in the form of spikes. SNNs are expected to provide not only new machine-learning algorithms, but also energy-efficient computational models when implemented in VLSI circuits. In this paper, we propose a novel supervised learning algorithm for SNNs based on temporal coding. A spiking neuron in this algorithm is designed to facilitate analog VLSI implementations with analog resistive memory, by which ultra-high energy efficiency can be achieved. We also propose several techniques to improve the performance on a recognition task, and show that the classification accuracy of the proposed algorithm is as high as that of the state-of-the-art temporal coding SNN algorithms on the MNIST dataset. Finally, we discuss the robustness of the proposed SNNs against variations that arise from the device manufacturing process and are unavoidable in analog VLSI implementation. We also propose a technique to suppress the effects of variations in the manufacturing process on the recognition performance.

Predicting Glaucoma Visual Field Loss by Hierarchically Aggregating Clustering-based Predictors

Mar 23, 2016

Abstract:This study addresses the issue of predicting the glaucomatous visual field loss from patient disease datasets. Our goal is to accurately predict the progress of the disease in individual patients. As very few measurements are available for each patient, it is difficult to produce good predictors for individuals. A recently proposed clustering-based method enhances the power of prediction using patient data with similar spatiotemporal patterns. Each patient is categorized into a cluster of patients, and a predictive model is constructed using all of the data in the class. Predictions are highly dependent on the quality of clustering, but it is difficult to identify the best clustering method. Thus, we propose a method for aggregating cluster-based predictors to obtain better prediction accuracy than from a single cluster-based prediction. Further, the method shows very high performances by hierarchically aggregating experts generated from several cluster-based methods. We use real datasets to demonstrate that our method performs significantly better than conventional clustering-based and patient-wise regression methods, because the hierarchical aggregating strategy has a mechanism whereby good predictors in a small community can thrive.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge