K S Sesh Kumar

Submodular Kernels for Efficient Rankings

May 26, 2021

Abstract:Many algorithms for ranked data become computationally intractable as the number of objects grows due to complex geometric structure induced by rankings. An additional challenge is posed by partial rankings, i.e. rankings in which the preference is only known for a subset of all objects. For these reasons, state-of-the-art methods cannot scale to real-world applications, such as recommender systems. We address this challenge by exploiting geometric structure of ranked data and additional available information about the objects to derive a submodular kernel for ranking. The submodular kernel combines the efficiency of submodular optimization with the theoretical properties of kernel-based methods. We demonstrate that the submodular kernel drastically reduces the computational cost compared to state-of-the-art kernels and scales well to large datasets while attaining good empirical performance.

Sliced Multi-Marginal Optimal Transport

Feb 14, 2021

Abstract:We study multi-marginal optimal transport, a generalization of optimal transport that allows us to define discrepancies between multiple measures. It provides a framework to solve multi-task learning problems and to perform barycentric averaging. However, multi-marginal distances between multiple measures are typically challenging to compute because they require estimating a transport plan with $N^P$ variables. In this paper, we address this issue in the following way: 1) we efficiently solve the one-dimensional multi-marginal Monge-Wasserstein problem for a classical cost function in closed form, and 2) we propose a higher-dimensional multi-marginal discrepancy via slicing and study its generalized metric properties. We show that computing the sliced multi-marginal discrepancy is massively scalable for a large number of probability measures with support as large as $10^7$ samples. Our approach can be applied to solving problems such as barycentric averaging, multi-task density estimation and multi-task reinforcement learning.

Fast Decomposable Submodular Function Minimization using Constrained Total Variation

May 27, 2019

Abstract:We consider the problem of minimizing the sum of submodular set functions assuming minimization oracles of each summand function. Most existing approaches reformulate the problem as the convex minimization of the sum of the corresponding Lov\'asz extensions and the squared Euclidean norm, leading to algorithms requiring total variation oracles of the summand functions; without further assumptions, these more complex oracles require many calls to the simpler minimization oracles often available in practice. In this paper, we consider a modified convex problem requiring constrained version of the total variation oracles that can be solved with significantly fewer calls to the simple minimization oracles. We support our claims by showing results on graph cuts for 2D and 3D graphs

Differentially Private Empirical Risk Minimization with Sparsity-Inducing Norms

May 13, 2019

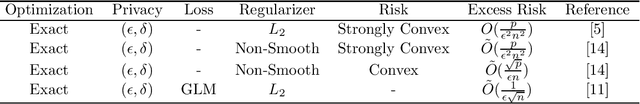

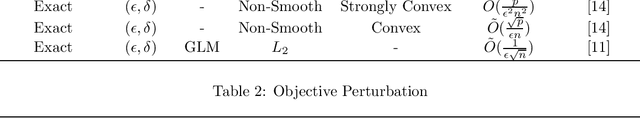

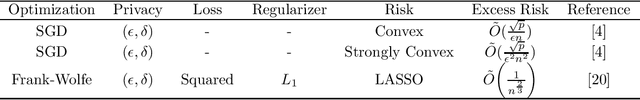

Abstract:Differential privacy is concerned about the prediction quality while measuring the privacy impact on individuals whose information is contained in the data. We consider differentially private risk minimization problems with regularizers that induce structured sparsity. These regularizers are known to be convex but they are often non-differentiable. We analyze the standard differentially private algorithms, such as output perturbation, Frank-Wolfe and objective perturbation. Output perturbation is a differentially private algorithm that is known to perform well for minimizing risks that are strongly convex. Previous works have derived excess risk bounds that are independent of the dimensionality. In this paper, we assume a particular class of convex but non-smooth regularizers that induce structured sparsity and loss functions for generalized linear models. We also consider differentially private Frank-Wolfe algorithms to optimize the dual of the risk minimization problem. We derive excess risk bounds for both these algorithms. Both the bounds depend on the Gaussian width of the unit ball of the dual norm. We also show that objective perturbation of the risk minimization problems is equivalent to the output perturbation of a dual optimization problem. This is the first work that analyzes the dual optimization problems of risk minimization problems in the context of differential privacy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge