Justin Luke

Real-time Control of Electric Autonomous Mobility-on-Demand Systems via Graph Reinforcement Learning

Nov 09, 2023

Abstract:Operators of Electric Autonomous Mobility-on-Demand (E-AMoD) fleets need to make several real-time decisions such as matching available cars to ride requests, rebalancing idle cars to areas of high demand, and charging vehicles to ensure sufficient range. While this problem can be posed as a linear program that optimizes flows over a space-charge-time graph, the size of the resulting optimization problem does not allow for real-time implementation in realistic settings. In this work, we present the E-AMoD control problem through the lens of reinforcement learning and propose a graph network-based framework to achieve drastically improved scalability and superior performance over heuristics. Specifically, we adopt a bi-level formulation where we (1) leverage a graph network-based RL agent to specify a desired next state in the space-charge graph, and (2) solve more tractable linear programs to best achieve the desired state while ensuring feasibility. Experiments using real-world data from San Francisco and New York City show that our approach achieves up to 89% of the profits of the theoretically-optimal solution while achieving more than a 100x speedup in computational time. Furthermore, our approach outperforms the best domain-specific heuristics with comparable runtimes, with an increase in profits by up to 3x. Finally, we highlight promising zero-shot transfer capabilities of our learned policy on tasks such as inter-city generalization and service area expansion, thus showing the utility, scalability, and flexibility of our framework.

Joint Optimization of Autonomous Electric Vehicle Fleet Operations and Charging Station Siting

Jul 21, 2021

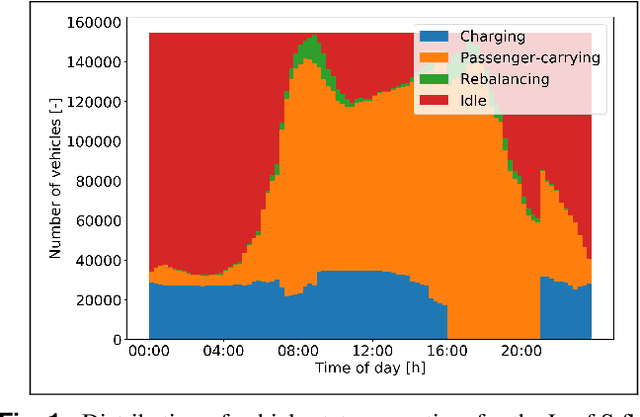

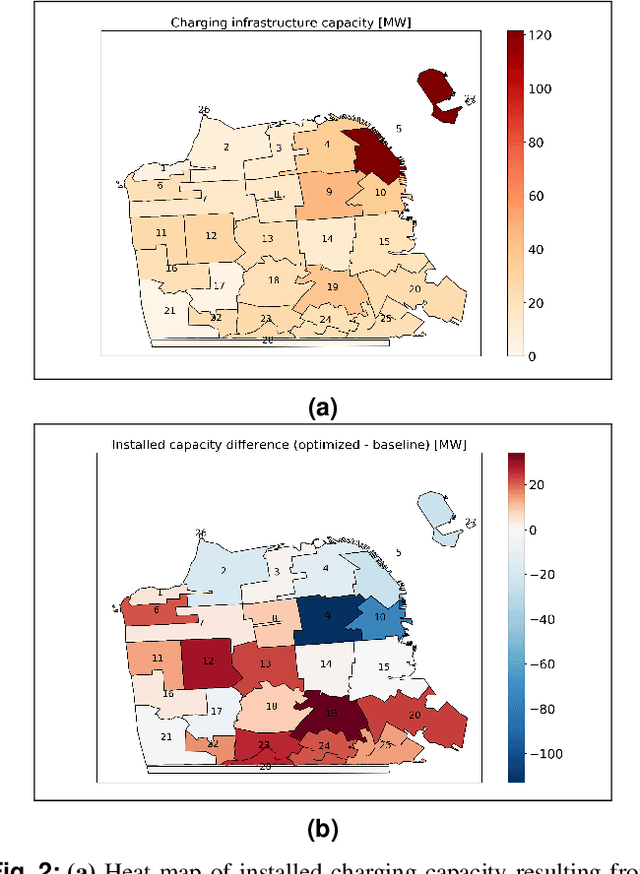

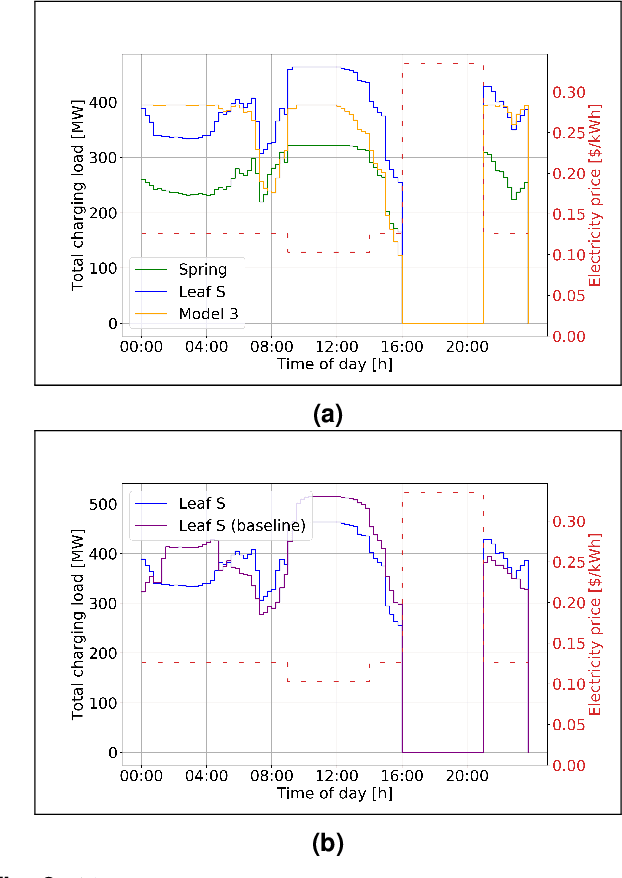

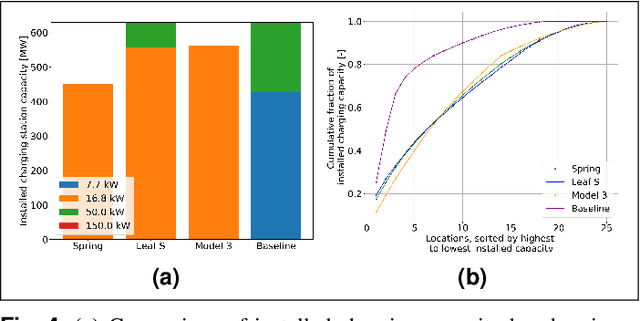

Abstract:Charging infrastructure is the coupling link between power and transportation networks, thus determining charging station siting is necessary for planning of power and transportation systems. While previous works have either optimized for charging station siting given historic travel behavior, or optimized fleet routing and charging given an assumed placement of the stations, this paper introduces a linear program that optimizes for station siting and macroscopic fleet operations in a joint fashion. Given an electricity retail rate and a set of travel demand requests, the optimization minimizes total cost for an autonomous EV fleet comprising of travel costs, station procurement costs, fleet procurement costs, and electricity costs, including demand charges. Specifically, the optimization returns the number of charging plugs for each charging rate (e.g., Level 2, DC fast charging) at each candidate location, as well as the optimal routing and charging of the fleet. From a case-study of an electric vehicle fleet operating in San Francisco, our results show that, albeit with range limitations, small EVs with low procurement costs and high energy efficiencies are the most cost-effective in terms of total ownership costs. Furthermore, the optimal siting of charging stations is more spatially distributed than the current siting of stations, consisting mainly of high-power Level 2 AC stations (16.8 kW) with a small share of DC fast charging stations and no standard 7.7kW Level 2 stations. Optimal siting reduces the total costs, empty vehicle travel, and peak charging load by up to 10%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge