Junfu Chen

AutoRefine: From Trajectories to Reusable Expertise for Continual LLM Agent Refinement

Jan 30, 2026Abstract:Large language model agents often fail to accumulate knowledge from experience, treating each task as an independent challenge. Recent methods extract experience as flattened textual knowledge, which cannot capture procedural logic of complex subtasks. They also lack maintenance mechanisms, causing repository degradation as experience accumulates. We introduce AutoRefine, a framework that extracts and maintains dual-form Experience Patterns from agent execution histories. For procedural subtasks, we extract specialized subagents with independent reasoning and memory. For static knowledge, we extract skill patterns as guidelines or code snippets. A continuous maintenance mechanism scores, prunes, and merges patterns to prevent repository degradation. Evaluated on ALFWorld, ScienceWorld, and TravelPlanner, AutoRefine achieves 98.4%, 70.4%, and 27.1% respectively, with 20-73% step reductions. On TravelPlanner, automatic extraction exceeds manually designed systems (27.1% vs 12.1%), demonstrating its ability to capture procedural coordination.

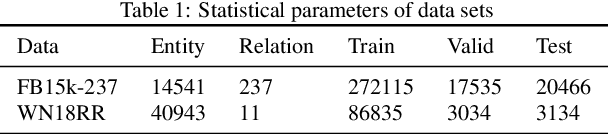

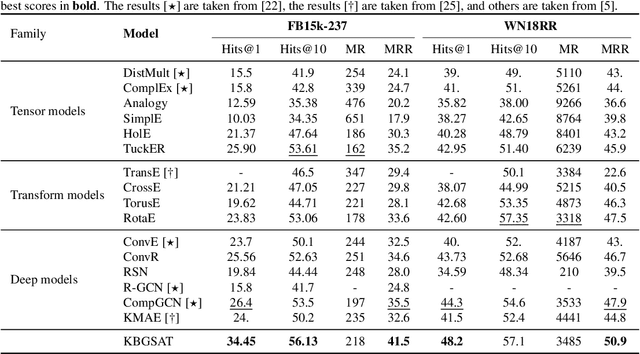

Semi-supervised Training for Knowledge Base Graph Self-attention Networks on Link Prediction

Sep 03, 2022

Abstract:The task of link prediction aims to solve the problem of incomplete knowledge caused by the difficulty of collecting facts from the real world. GCNs-based models are widely applied to solve link prediction problems due to their sophistication, but GCNs-based models are suffering from two problems in the structure and training process. 1) The transformation methods of GCN layers become increasingly complex in GCN-based knowledge representation models; 2) Due to the incompleteness of the knowledge graph collection process, there are many uncollected true facts in the labeled negative samples. Therefore, this paper investigates the characteristic of the information aggregation coefficient (self-attention) of adjacent nodes and redesigns the self-attention mechanism of the GAT structure. Meanwhile, inspired by human thinking habits, we designed a semi-supervised self-training method over pre-trained models. Experimental results on the benchmark datasets FB15k-237 and WN18RR show that our proposed self-attention mechanism and semi-supervised self-training method can effectively improve the performance of the link prediction task. If you look at FB15k-237, for example, the proposed method improves Hits@1 by about 30%.

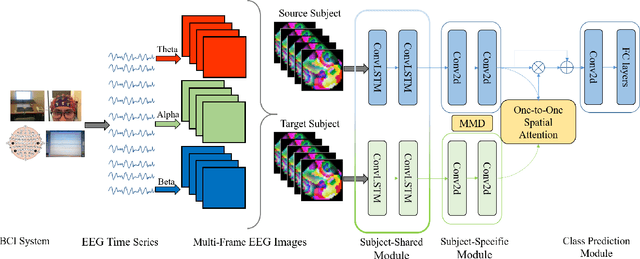

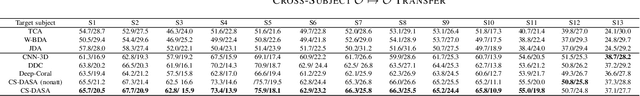

Cross-Subject Domain Adaptation for Multi-Frame EEG Images

Jun 12, 2021

Abstract:Working memory (WM) is a basic part of human cognition, which plays an important role in the study of human cognitive load. Among various brain imaging techniques, electroencephalography has shown its advantage on easy access and reliability. However, one of the critical challenges is that individual difference may cause the ineffective results, especially when the established model meets an unfamiliar subject. In this work, we propose a cross-subject deep adaptation model with spatial attention (CS-DASA) to generalize the workload classifications across subjects. First, we transform time-series EEG data into multi-frame EEG images incorporating more spatio-temporal information. First, the subject-shared module in CS-DASA receives multi-frame EEG image data from both source and target subjects and learns the common feature representations. Then, in subject-specific module, the maximum mean discrepancy is implemented to measure the domain distribution divergence in a reproducing kernel Hilbert space, which can add an effective penalty loss for domain adaptation. Additionally, the subject-to-subject spatial attention mechanism is employed to focus on the most discriminative spatial feature in EEG image data. Experiments conducted on a public WM EEG dataset containing 13 subjects show that the proposed model is capable of achieve better performance than existing state-of-the art methods.

Remote sensing image fusion based on Bayesian GAN

Sep 20, 2020

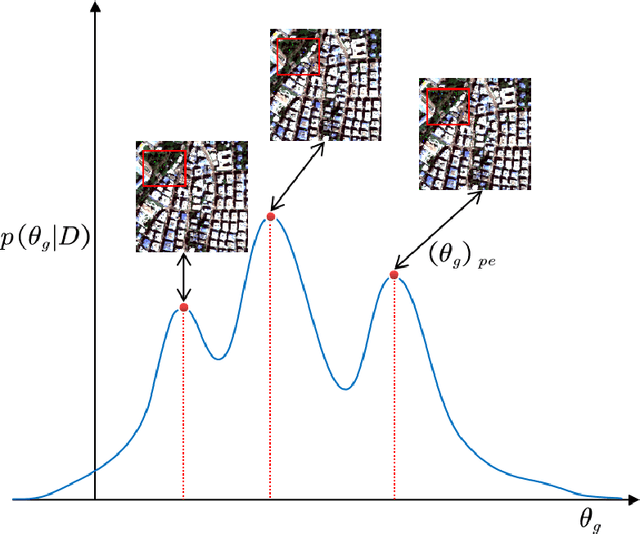

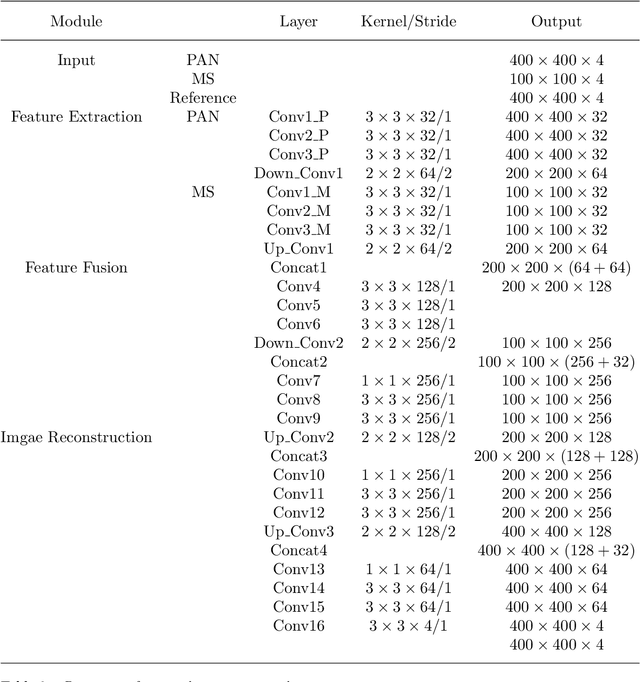

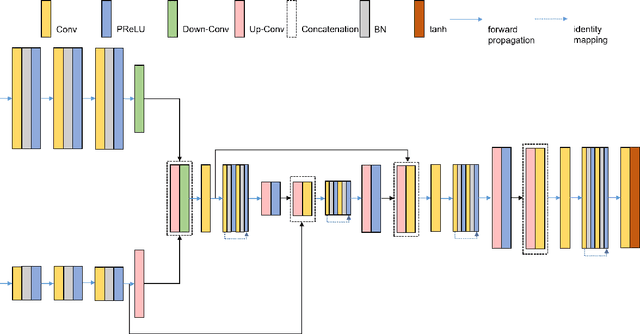

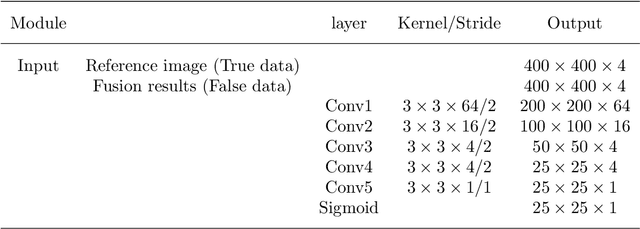

Abstract:Remote sensing image fusion technology (pan-sharpening) is an important means to improve the information capacity of remote sensing images. Inspired by the efficient arameter space posteriori sampling of Bayesian neural networks, in this paper we propose a Bayesian Generative Adversarial Network based on Preconditioned Stochastic Gradient Langevin Dynamics (PGSLD-BGAN) to improve pan-sharpening tasks. Unlike many traditional generative models that consider only one optimal solution (might be locally optimal), the proposed PGSLD-BGAN performs Bayesian inference on the network parameters, and explore the generator posteriori distribution, which assists selecting the appropriate generator parameters. First, we build a two-stream generator network with PAN and MS images as input, which consists of three parts: feature extraction, feature fusion and image reconstruction. Then, we leverage Markov discriminator to enhance the ability of generator to reconstruct the fusion image, so that the result image can retain more details. Finally, introducing Preconditioned Stochastic Gradient Langevin Dynamics policy, we perform Bayesian inference on the generator network. Experiments on QuickBird and WorldView datasets show that the model proposed in this paper can effectively fuse PAN and MS images, and be competitive with even superior to state of the arts in terms of subjective and objective metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge