Julien Peloton

The Tiny Time-series Transformer: Low-latency High-throughput Classification of Astronomical Transients using Deep Model Compression

Mar 15, 2023Abstract:A new golden age in astronomy is upon us, dominated by data. Large astronomical surveys are broadcasting unprecedented rates of information, demanding machine learning as a critical component in modern scientific pipelines to handle the deluge of data. The upcoming Legacy Survey of Space and Time (LSST) of the Vera C. Rubin Observatory will raise the big-data bar for time-domain astronomy, with an expected 10 million alerts per-night, and generating many petabytes of data over the lifetime of the survey. Fast and efficient classification algorithms that can operate in real-time, yet robustly and accurately, are needed for time-critical events where additional resources can be sought for follow-up analyses. In order to handle such data, state-of-the-art deep learning architectures coupled with tools that leverage modern hardware accelerators are essential. We showcase how the use of modern deep compression methods can achieve a $18\times$ reduction in model size, whilst preserving classification performance. We also show that in addition to the deep compression techniques, careful choice of file formats can improve inference latency, and thereby throughput of alerts, on the order of $8\times$ for local processing, and $5\times$ in a live production setting. To test this in a live setting, we deploy this optimised version of the original time-series transformer, t2, into the community alert broking system of FINK on real Zwicky Transient Facility (ZTF) alert data, and compare throughput performance with other science modules that exist in FINK. The results shown herein emphasise the time-series transformer's suitability for real-time classification at LSST scale, and beyond, and introduce deep model compression as a fundamental tool for improving deploy-ability and scalable inference of deep learning models for transient classification.

Finding active galactic nuclei through Fink

Nov 20, 2022

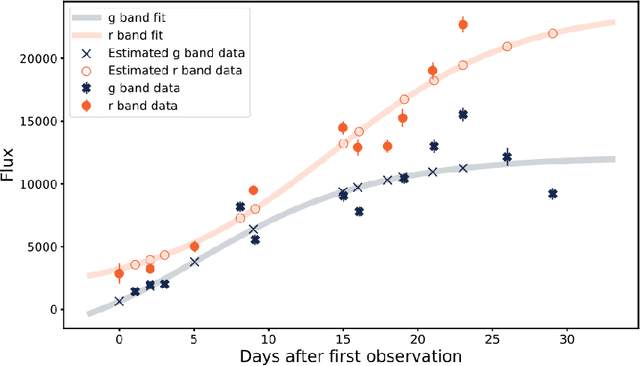

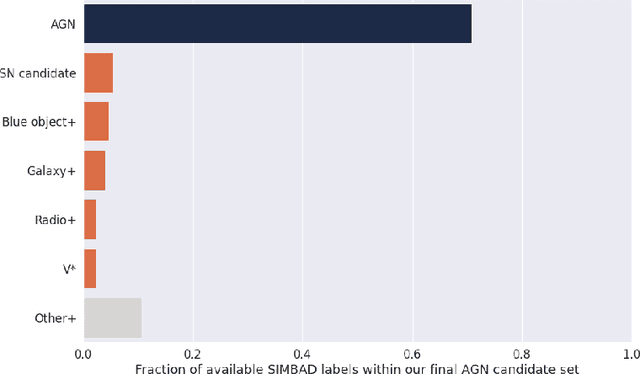

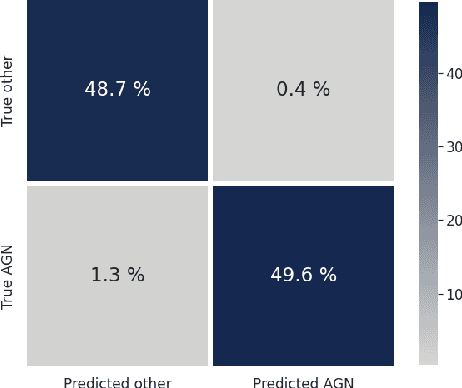

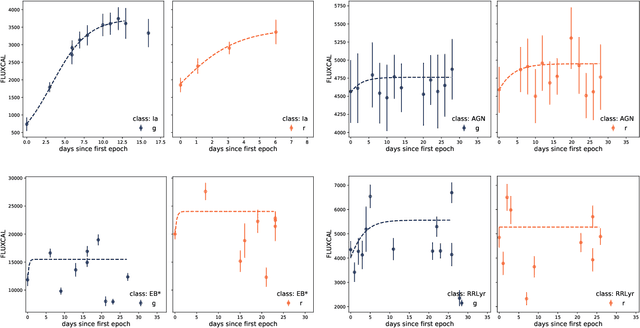

Abstract:We present the Active Galactic Nuclei (AGN) classifier as currently implemented within the Fink broker. Features were built upon summary statistics of available photometric points, as well as color estimation enabled by symbolic regression. The learning stage includes an active learning loop, used to build an optimized training sample from labels reported in astronomical catalogs. Using this method to classify real alerts from the Zwicky Transient Facility (ZTF), we achieved 98.0% accuracy, 93.8% precision and 88.5% recall. We also describe the modifications necessary to enable processing data from the upcoming Vera C. Rubin Observatory Large Survey of Space and Time (LSST), and apply them to the training sample of the Extended LSST Astronomical Time-series Classification Challenge (ELAsTiCC). Results show that our designed feature space enables high performances of traditional machine learning algorithms in this binary classification task.

Fink: early supernovae Ia classification using active learning

Nov 22, 2021

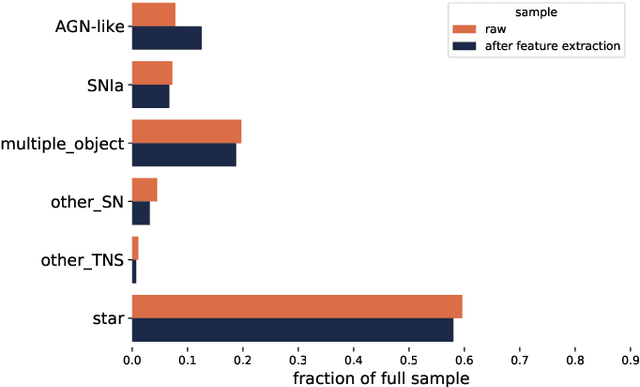

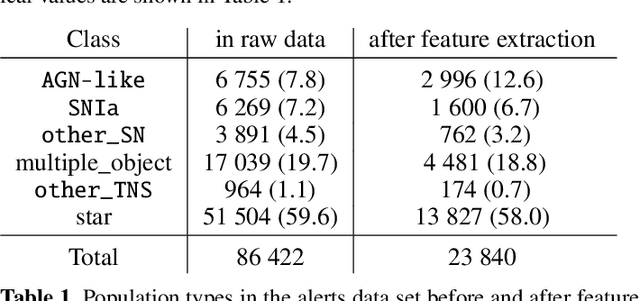

Abstract:We describe how the Fink broker early supernova Ia classifier optimizes its ML classifications by employing an active learning (AL) strategy. We demonstrate the feasibility of implementation of such strategies in the current Zwicky Transient Facility (ZTF) public alert data stream. We compare the performance of two AL strategies: uncertainty sampling and random sampling. Our pipeline consists of 3 stages: feature extraction, classification and learning strategy. Starting from an initial sample of 10 alerts (5 SN Ia and 5 non-Ia), we let the algorithm identify which alert should be added to the training sample. The system is allowed to evolve through 300 iterations. Our data set consists of 23 840 alerts from the ZTF with confirmed classification via cross-match with SIMBAD database and the Transient name server (TNS), 1 600 of which were SNe Ia (1 021 unique objects). The data configuration, after the learning cycle was completed, consists of 310 alerts for training and 23 530 for testing. Averaging over 100 realizations, the classifier achieved 89% purity and 54% efficiency. From 01/November/2020 to 31/October/2021 Fink has applied its early supernova Ia module to the ZTF stream and communicated promising SN Ia candidates to the TNS. From the 535 spectroscopically classified Fink candidates, 459 (86%) were proven to be SNe Ia. Our results confirm the effectiveness of active learning strategies for guiding the construction of optimal training samples for astronomical classifiers. It demonstrates in real data that the performance of learning algorithms can be highly improved without the need of extra computational resources or overwhelmingly large training samples. This is, to our knowledge, the first application of AL to real alerts data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge