Julian Strohmayer

DATTA: Domain-Adversarial Test-Time Adaptation for Cross-Domain WiFi-Based Human Activity Recognition

Nov 20, 2024

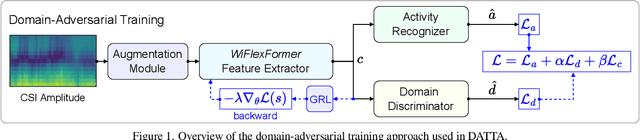

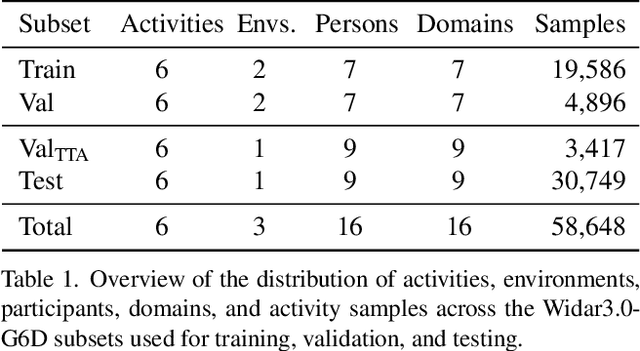

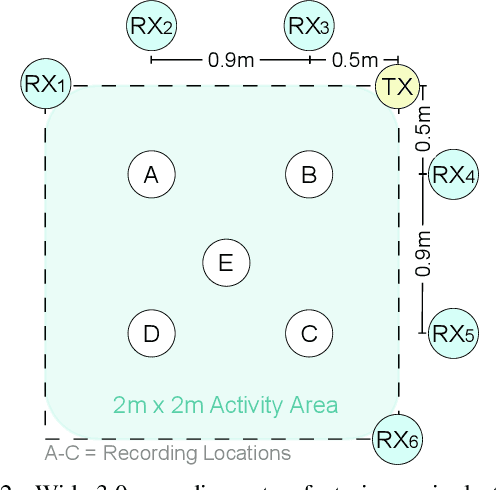

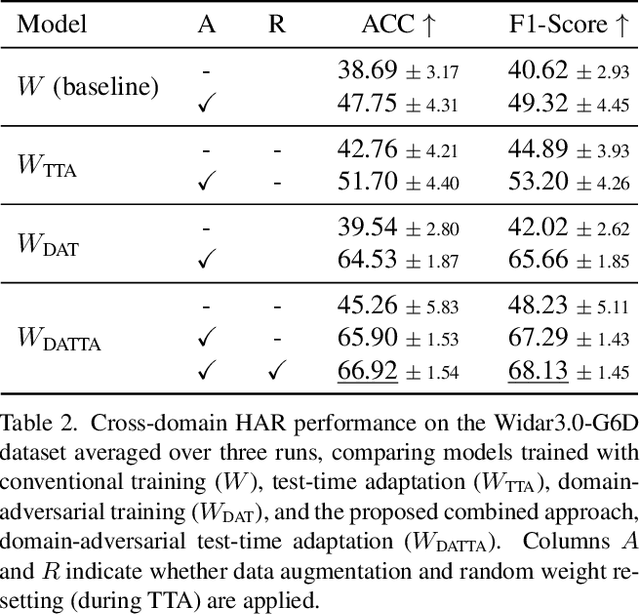

Abstract:Cross-domain generalization is an open problem in WiFi-based sensing due to variations in environments, devices, and subjects, causing domain shifts in channel state information. To address this, we propose Domain-Adversarial Test-Time Adaptation (DATTA), a novel framework combining domain-adversarial training (DAT), test-time adaptation (TTA), and weight resetting to facilitate adaptation to unseen target domains and to prevent catastrophic forgetting. DATTA is integrated into a lightweight, flexible architecture optimized for speed. We conduct a comprehensive evaluation of DATTA, including an ablation study on all key components using publicly available data, and verify its suitability for real-time applications such as human activity recognition. When combining a SotA video-based variant of TTA with WiFi-based DAT and comparing it to DATTA, our method achieves an 8.1% higher F1-Score. The PyTorch implementation of DATTA is publicly available at: https://github.com/StrohmayerJ/DATTA.

WiFlexFormer: Efficient WiFi-Based Person-Centric Sensing

Nov 06, 2024

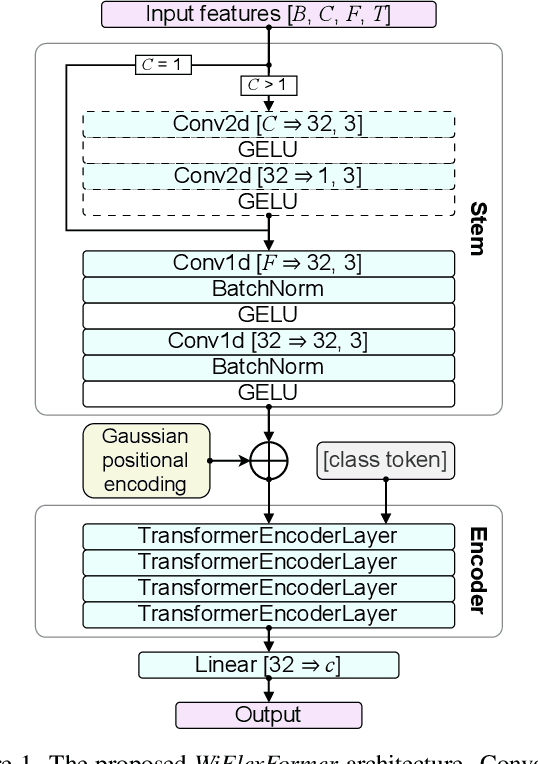

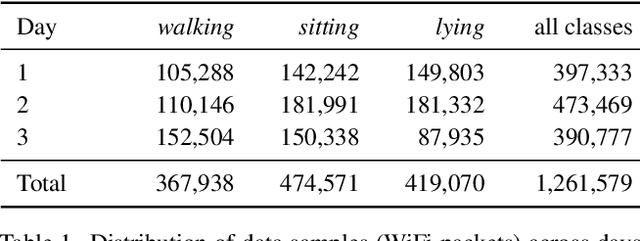

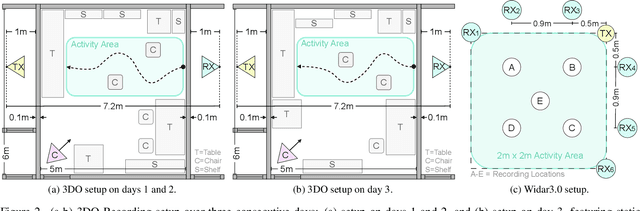

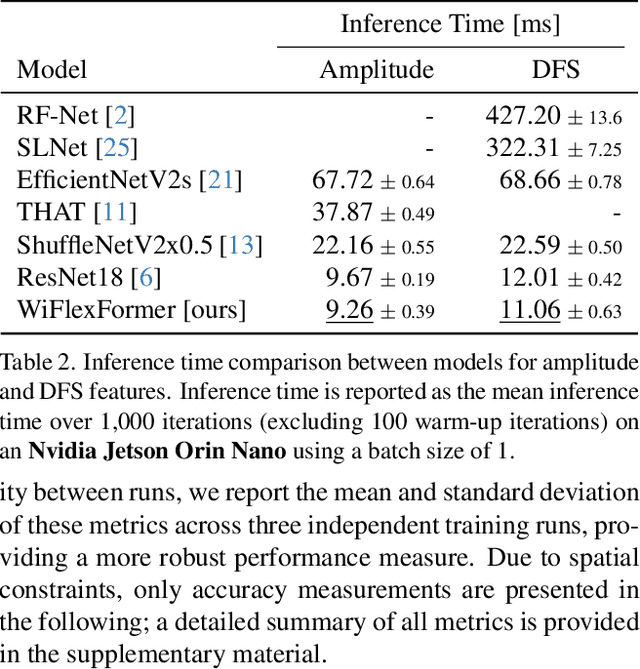

Abstract:We propose WiFlexFormer, a highly efficient Transformer-based architecture designed for WiFi Channel State Information (CSI)-based person-centric sensing. We benchmark WiFlexFormer against state-of-the-art vision and specialized architectures for processing radio frequency data and demonstrate that it achieves comparable Human Activity Recognition (HAR) performance while offering a significantly lower parameter count and faster inference times. With an inference time of just 10 ms on an Nvidia Jetson Orin Nano, WiFlexFormer is optimized for real-time inference. Additionally, its low parameter count contributes to improved cross-domain generalization, where it often outperforms larger models. Our comprehensive evaluation shows that WiFlexFormer is a potential solution for efficient, scalable WiFi-based sensing applications. The PyTorch implementation of WiFlexFormer is publicly available at: https://github.com/StrohmayerJ/WiFlexFormer.

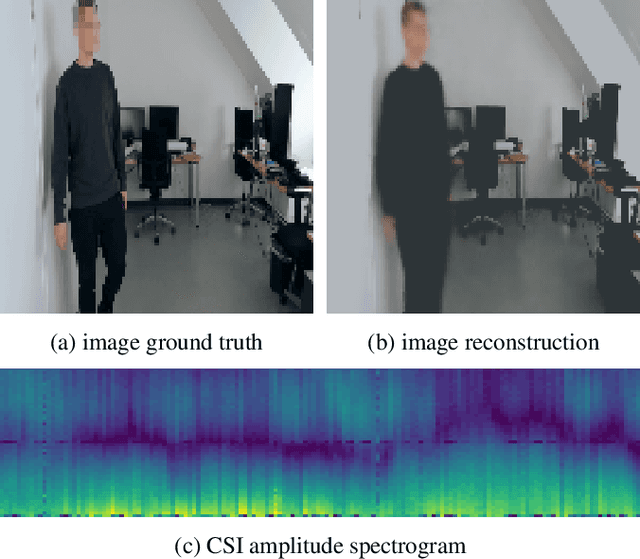

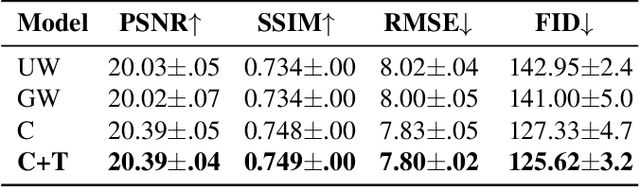

Through-Wall Imaging based on WiFi Channel State Information

Jan 30, 2024

Abstract:This work presents a seminal approach for synthesizing images from WiFi Channel State Information (CSI) in through-wall scenarios. Leveraging the strengths of WiFi, such as cost-effectiveness, illumination invariance, and wall-penetrating capabilities, our approach enables visual monitoring of indoor environments beyond room boundaries and without the need for cameras. More generally, it improves the interpretability of WiFi CSI by unlocking the option to perform image-based downstream tasks, e.g., visual activity recognition. In order to achieve this crossmodal translation from WiFi CSI to images, we rely on a multimodal Variational Autoencoder (VAE) adapted to our problem specifics. We extensively evaluate our proposed methodology through an ablation study on architecture configuration and a quantitative/qualitative assessment of reconstructed images. Our results demonstrate the viability of our method and highlight its potential for practical applications.

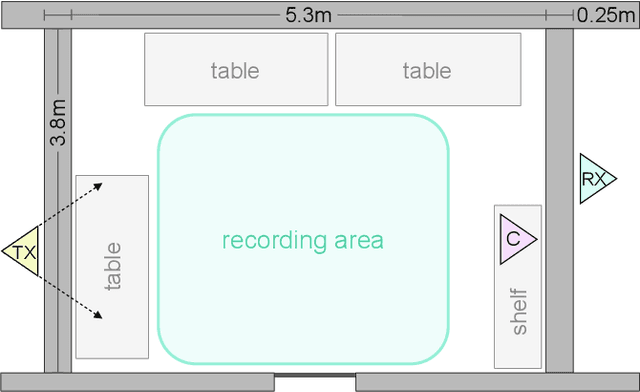

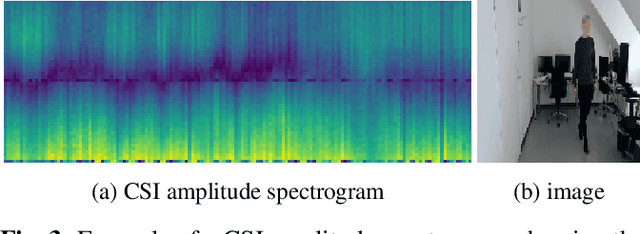

Directional Antenna Systems for Long-Range Through-Wall Human Activity Recognition

Jan 01, 2024

Abstract:WiFi Channel State Information (CSI)-based human activity recognition (HAR) enables contactless, long-range sensing in spatially constrained environments while preserving visual privacy. However, despite the presence of numerous WiFi-enabled devices around us, few expose CSI to users, resulting in a lack of sensing hardware options. Variants of the Espressif ESP32 have emerged as potential low-cost and easy-to-deploy solutions for WiFi CSI-based HAR. In this work, four ESP32-S3-based 2.4GHz directional antenna systems are evaluated for their ability to facilitate long-range through-wall HAR. Two promising systems are proposed, one of which combines the ESP32-S3 with a directional biquad antenna. This combination represents, to the best of our knowledge, the first demonstration of such a system in WiFi-based HAR. The second system relies on the built-in printed inverted-F antenna (PIFA) of the ESP32-S3 and achieves directionality through a plane reflector. In a comprehensive evaluation of line-of-sight (LOS) and non-line-of-sight (NLOS) HAR performance, both systems are deployed in an office environment spanning a distance of 18 meters across five rooms. In this experimental setup, the Wallhack1.8k dataset, comprising 1806 CSI amplitude spectrograms of human activities, is collected and made publicly available. Based on Wallhack1.8k, we train activity recognition models using the EfficientNetV2 architecture to assess system performance in LOS and NLOS scenarios. For the core NLOS activity recognition problem, the biquad antenna and PIFA-based systems achieve accuracies of 92.0$\pm$3.5 and 86.8$\pm$4.7, respectively, demonstrating the feasibility of long-range through-wall HAR with the proposed systems.

Data Augmentation Techniques for Cross-Domain WiFi CSI-based Human Activity Recognition

Jan 01, 2024Abstract:The recognition of human activities based on WiFi Channel State Information (CSI) enables contactless and visual privacy-preserving sensing in indoor environments. However, poor model generalization, due to varying environmental conditions and sensing hardware, is a well-known problem in this space. To address this issue, in this work, data augmentation techniques commonly used in image-based learning are applied to WiFi CSI to investigate their effects on model generalization performance in cross-scenario and cross-system settings. In particular, we focus on the generalization between line-of-sight (LOS) and non-line-of-sight (NLOS) through-wall scenarios, as well as on the generalization between different antenna systems, which remains under-explored. We collect and make publicly available a dataset of CSI amplitude spectrograms of human activities. Utilizing this data, an ablation study is conducted in which activity recognition models based on the EfficientNetV2 architecture are trained, allowing us to assess the effects of each augmentation on model generalization performance. The gathered results show that specific combinations of simple data augmentation techniques applied to CSI amplitude data can significantly improve cross-scenario and cross-system generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge