Juhyun Kim

The Division of Physics Mathematics and Astronomy, Caltech

Two-argument activation functions learn soft XOR operations like cortical neurons

Oct 15, 2021

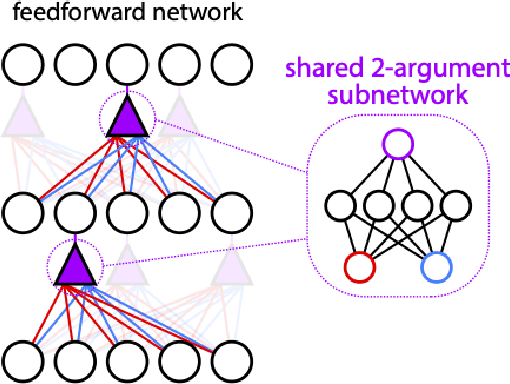

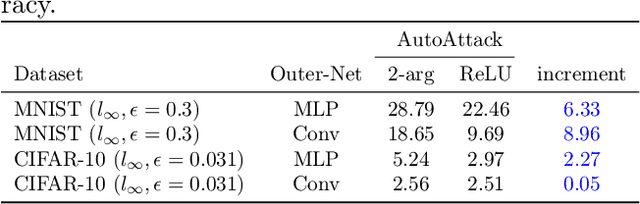

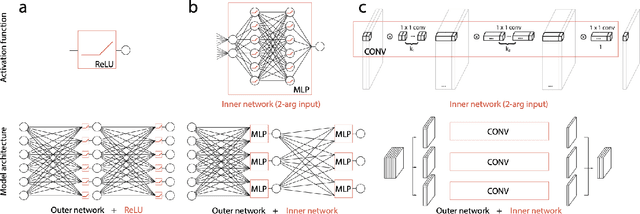

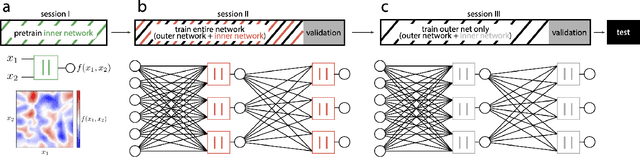

Abstract:Neurons in the brain are complex machines with distinct functional compartments that interact nonlinearly. In contrast, neurons in artificial neural networks abstract away this complexity, typically down to a scalar activation function of a weighted sum of inputs. Here we emulate more biologically realistic neurons by learning canonical activation functions with two input arguments, analogous to basal and apical dendrites. We use a network-in-network architecture where each neuron is modeled as a multilayer perceptron with two inputs and a single output. This inner perceptron is shared by all units in the outer network. Remarkably, the resultant nonlinearities often produce soft XOR functions, consistent with recent experimental observations about interactions between inputs in human cortical neurons. When hyperparameters are optimized, networks with these nonlinearities learn faster and perform better than conventional ReLU nonlinearities with matched parameter counts, and they are more robust to natural and adversarial perturbations.

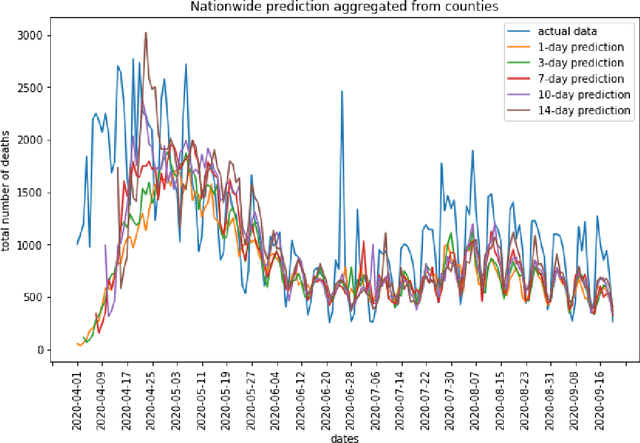

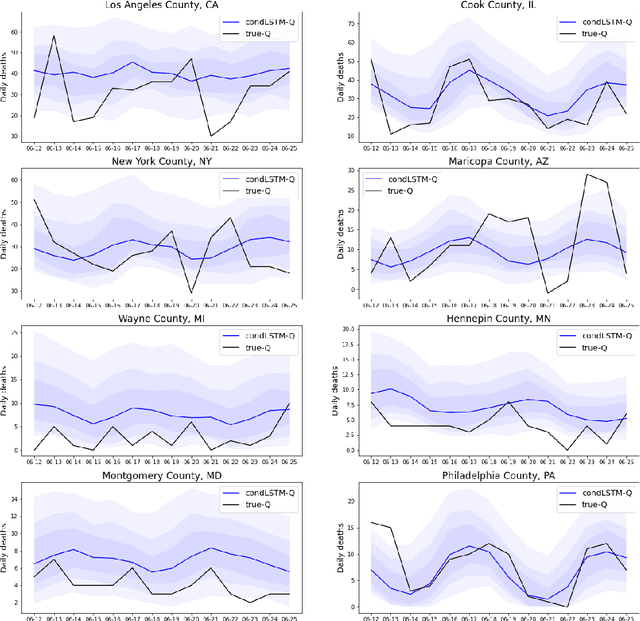

condLSTM-Q: A novel deep learning model for predicting Covid-19 mortality in fine geographical Scale

Nov 23, 2020

Abstract:Predictive models with a focus on different spatial-temporal scales benefit governments and healthcare systems to combat the COVID-19 pandemic. Here we present the conditional Long Short-Term Memory networks with Quantile output (condLSTM-Q), a well-performing model for making quantile predictions on COVID-19 death tolls at the county level with a two-week forecast window. This fine geographical scale is a rare but useful feature in publicly available predictive models, which would especially benefit state-level officials to coordinate resources within the state. The quantile predictions from condLSTM-Q inform people about the distribution of the predicted death tolls, allowing better evaluation of possible trajectories of the severity. Given the scalability and generalizability of neural network models, this model could incorporate additional data sources with ease, and could be further developed to generate other useful predictions such as new cases or hospitalizations intuitively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge