Juhung Park

DIFFnet: Diffusion parameter mapping network generalized for input diffusion gradient schemes and bvalues

Feb 04, 2021

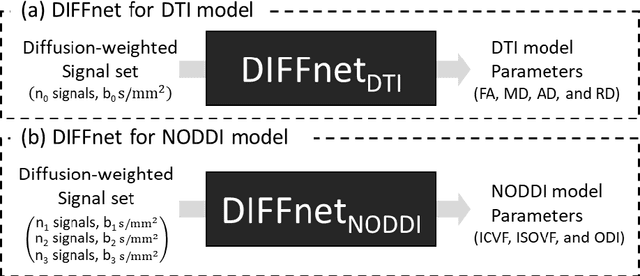

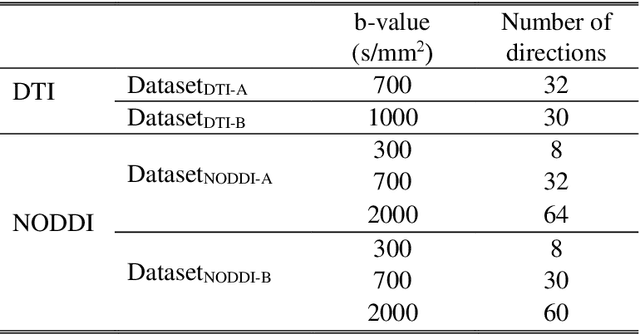

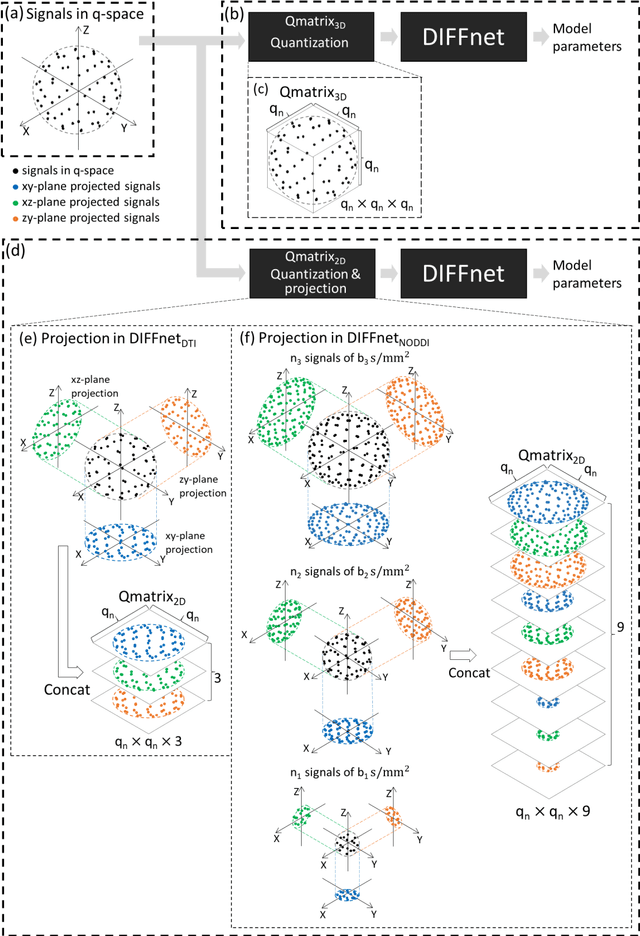

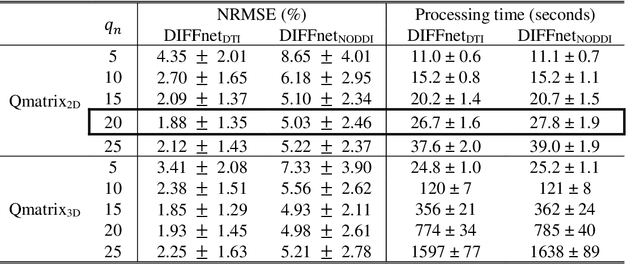

Abstract:In MRI, deep neural networks have been proposed to reconstruct diffusion model parameters. However, the inputs of the networks were designed for a specific diffusion gradient scheme (i.e., diffusion gradient directions and numbers) and a specific b-value that are the same as the training data. In this study, a new deep neural network, referred to as DIFFnet, is developed to function as a generalized reconstruction tool of the diffusion-weighted signals for various gradient schemes and b-values. For generalization, diffusion signals are normalized in a q-space and then projected and quantized, producing a matrix (Qmatrix) as an input for the network. To demonstrate the validity of this approach, DIFFnet is evaluated for diffusion tensor imaging (DIFFnetDTI) and for neurite orientation dispersion and density imaging (DIFFnetNODDI). In each model, two datasets with different gradient schemes and b-values are tested. The results demonstrate accurate reconstruction of the diffusion parameters at substantially reduced processing time (approximately 8.7 times and 2240 times faster processing time than conventional methods in DTI and NODDI, respectively; less than 4% mean normalized root-mean-square errors (NRMSE) in DTI and less than 8% in NODDI). The generalization capability of the networks was further validated using reduced numbers of diffusion signals from the datasets. Different from previously proposed deep neural networks, DIFFnet does not require any specific gradient scheme and b-value for its input. As a result, it can be adopted as an online reconstruction tool for various complex diffusion imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge