Juan D. Tardos

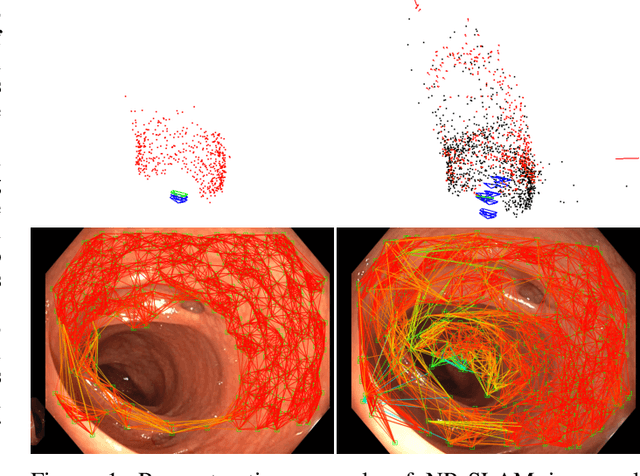

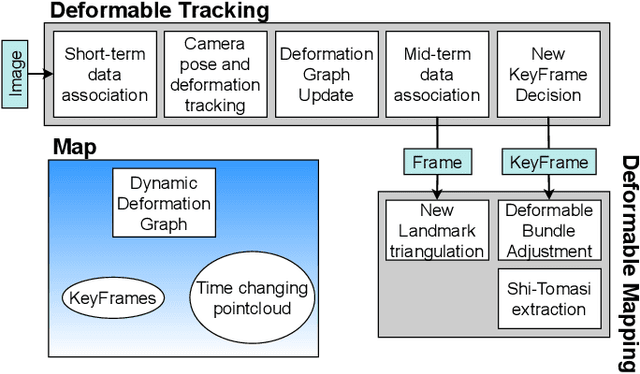

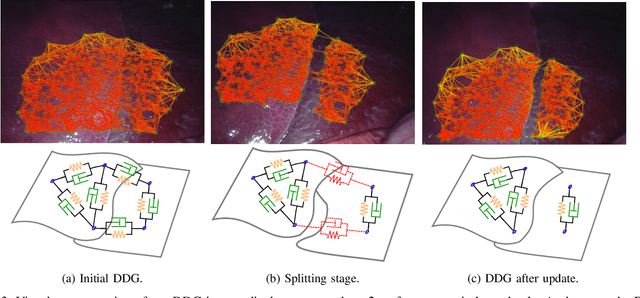

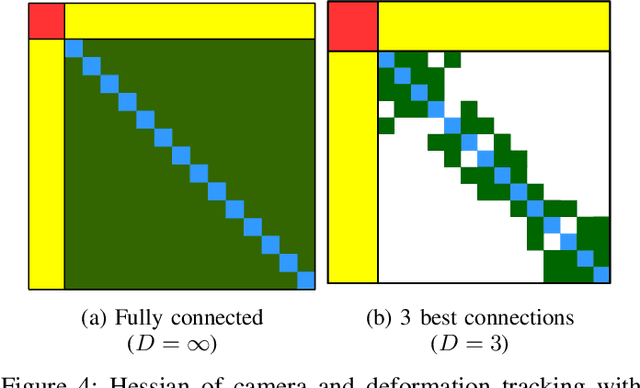

NR-SLAM: Non-Rigid Monocular SLAM

Aug 01, 2023

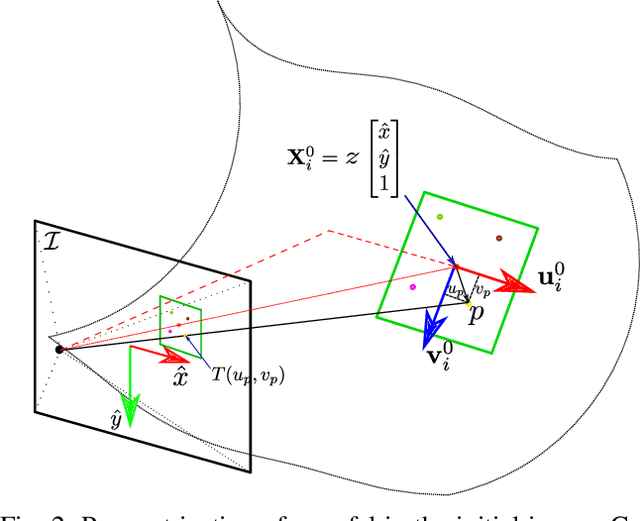

Abstract:In this paper we present NR-SLAM, a novel non-rigid monocular SLAM system founded on the combination of a Dynamic Deformation Graph with a Visco-Elastic deformation model. The former enables our system to represent the dynamics of the deforming environment as the camera explores, while the later allows us to model general deformations in a simple way. The presented system is able to automatically initialize and extend a map modeled by a sparse point cloud in deforming environments, that is refined with a sliding-window Deformable Bundle Adjustment. This map serves as base for the estimation of the camera motion and deformation and enables us to represent arbitrary surface topologies, overcoming the limitations of previous methods. To assess the performance of our system in challenging deforming scenarios, we evaluate it in several representative medical datasets. In our experiments, NR-SLAM outperforms previous deformable SLAM systems, achieving millimeter reconstruction accuracy and bringing automated medical intervention closer. For the benefit of the community, we make the source code public.

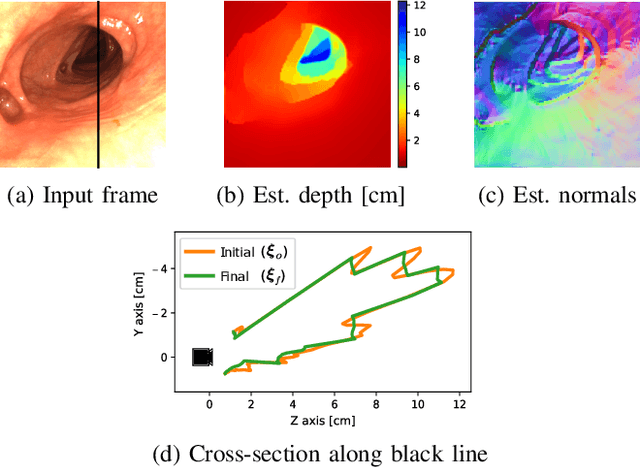

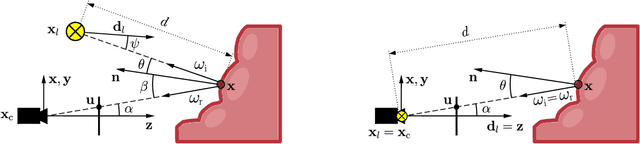

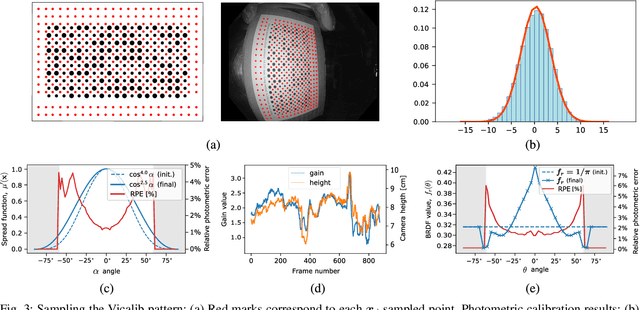

Photometric single-view dense 3D reconstruction in endoscopy

Apr 19, 2022

Abstract:Visual SLAM inside the human body will open the way to computer-assisted navigation in endoscopy. However, due to space limitations, medical endoscopes only provide monocular images, leading to systems lacking true scale. In this paper, we exploit the controlled lighting in colonoscopy to achieve the first in-vivo 3D reconstruction of the human colon using photometric stereo on a calibrated monocular endoscope. Our method works in a real medical environment, providing both a suitable in-place calibration procedure and a depth estimation technique adapted to the colon's tubular geometry. We validate our method on simulated colonoscopies, obtaining a mean error of 7% on depth estimation, which is below 3 mm on average. Our qualitative results on the EndoMapper dataset show that the method is able to correctly estimate the colon shape in real human colonoscopies, paving the ground for true-scale monocular SLAM in endoscopy.

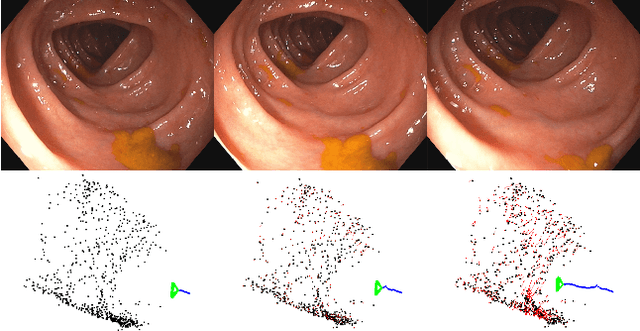

Tracking monocular camera pose and deformation for SLAM inside the human body

Apr 18, 2022

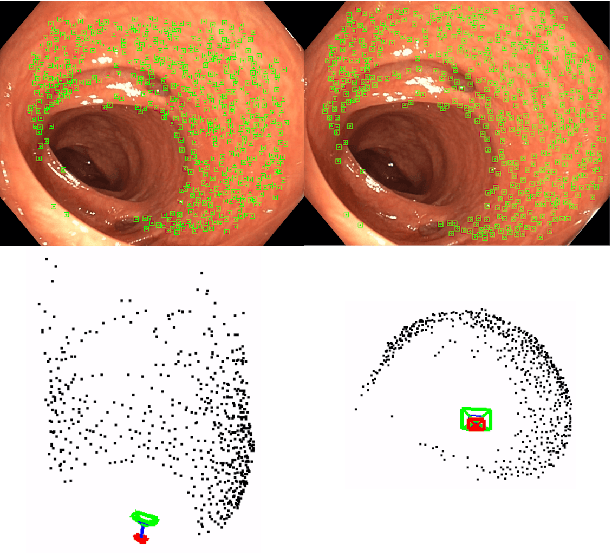

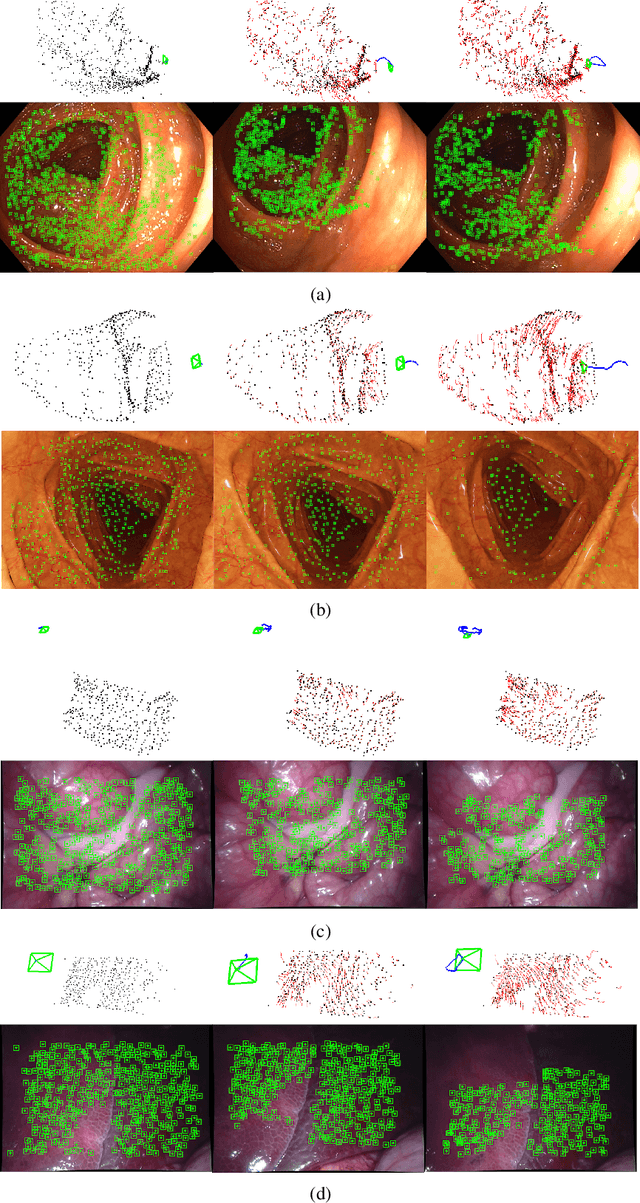

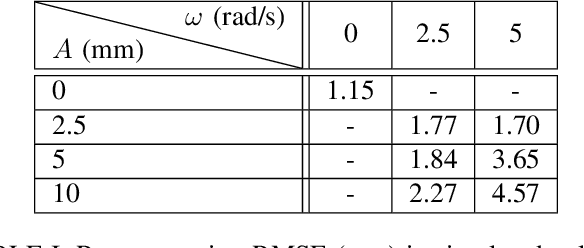

Abstract:Monocular SLAM in deformable scenes will open the way to multiple medical applications like computer-assisted navigation in endoscopy, automatic drug delivery or autonomous robotic surgery. In this paper we propose a novel method to simultaneously track the camera pose and the 3D scene deformation, without any assumption about environment topology or shape. The method uses an illumination-invariant photometric method to track image features and estimates camera motion and deformation combining reprojection error with spatial and temporal regularization of deformations. Our results in simulated colonoscopies show the method's accuracy and robustness in complex scenes under increasing levels of deformation. Our qualitative results in human colonoscopies from Endomapper dataset show that the method is able to successfully cope with the challenges of real endoscopies: deformations, low texture and strong illumination changes. We also compare with previous tracking methods in simpler scenarios from Hamlyn dataset where we obtain competitive performance, without needing any topological assumption.

Direct and Sparse Deformable Tracking

Sep 15, 2021

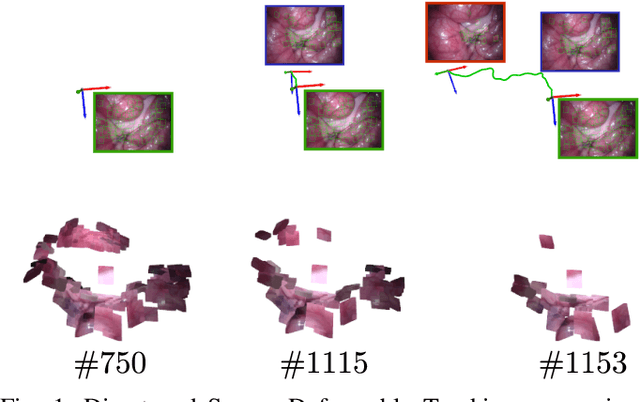

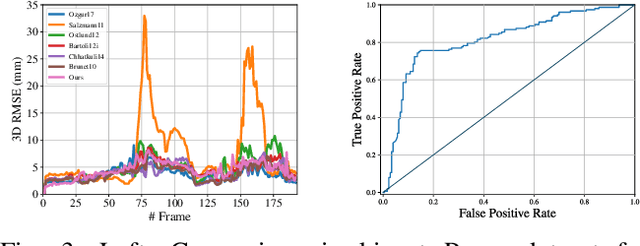

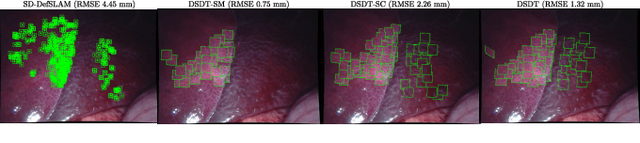

Abstract:Deformable Monocular SLAM algorithms recover the localization of a camera in an unknown deformable environment. Current approaches use a template-based deformable tracking to recover the camera pose and the deformation of the map. These template-based methods use an underlying global deformation model. In this paper, we introduce a novel deformable camera tracking method with a local deformation model for each point. Each map point is defined as a single textured surfel that moves independently of the other map points. Thanks to a direct photometric error cost function, we can track the position and orientation of the surfel without an explicit global deformation model. In our experiments, we validate the proposed system and observe that our local deformation model estimates more accurately and robustly the targeted deformations of the map in both laboratory-controlled experiments and in-body scenarios undergoing non-isometric deformations, with changing topology or discontinuities.

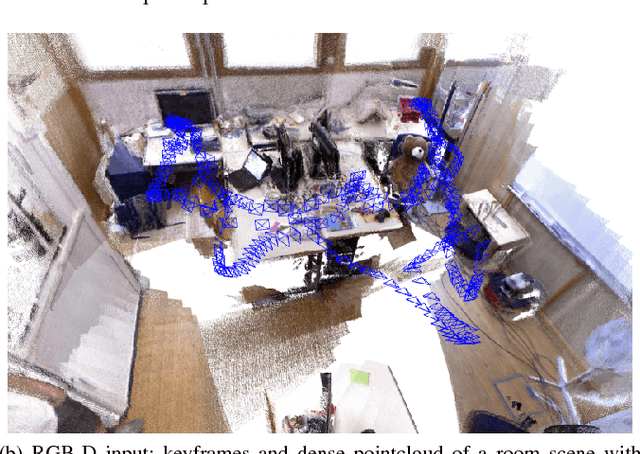

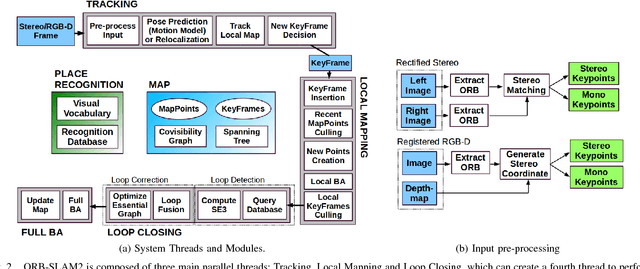

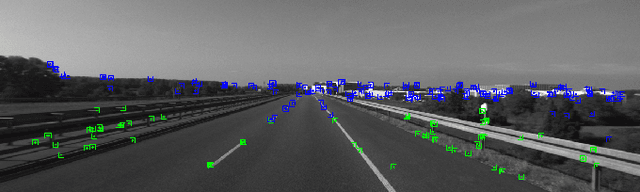

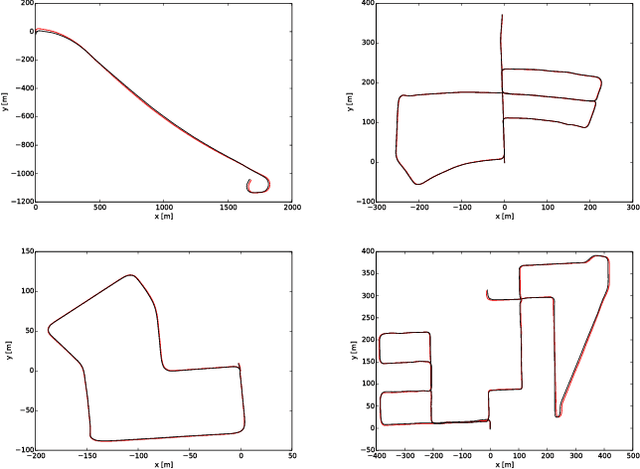

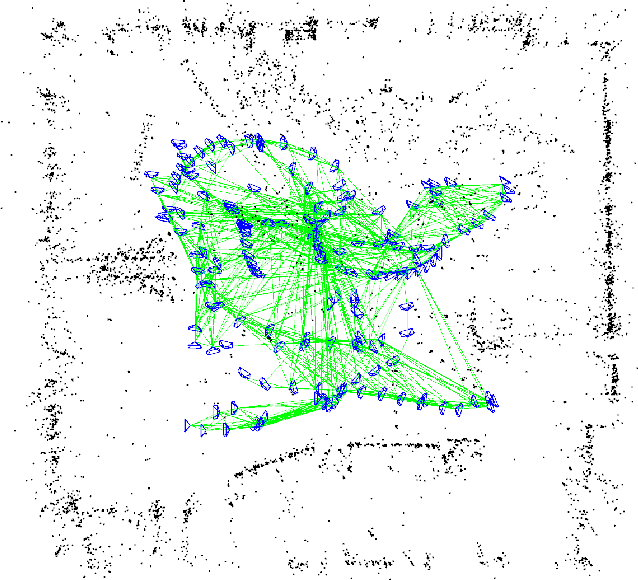

ORB-SLAM2: an Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras

Jun 19, 2017

Abstract:We present ORB-SLAM2 a complete SLAM system for monocular, stereo and RGB-D cameras, including map reuse, loop closing and relocalization capabilities. The system works in real-time on standard CPUs in a wide variety of environments from small hand-held indoors sequences, to drones flying in industrial environments and cars driving around a city. Our back-end based on bundle adjustment with monocular and stereo observations allows for accurate trajectory estimation with metric scale. Our system includes a lightweight localization mode that leverages visual odometry tracks for unmapped regions and matches to map points that allow for zero-drift localization. The evaluation on 29 popular public sequences shows that our method achieves state-of-the-art accuracy, being in most cases the most accurate SLAM solution. We publish the source code, not only for the benefit of the SLAM community, but with the aim of being an out-of-the-box SLAM solution for researchers in other fields.

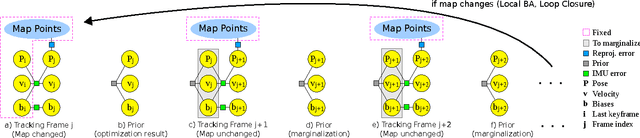

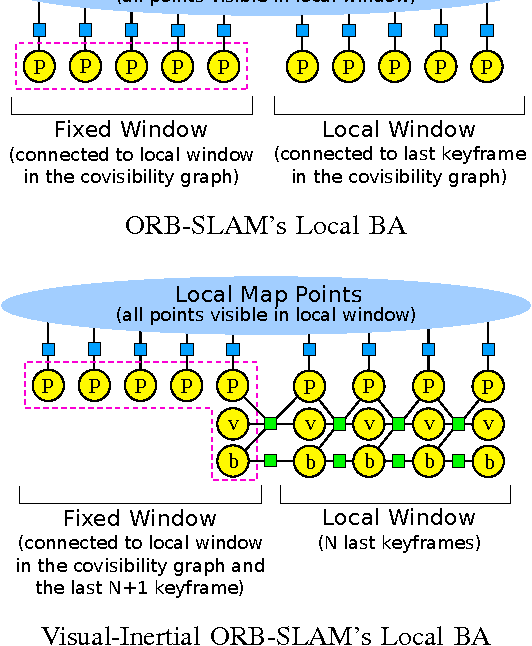

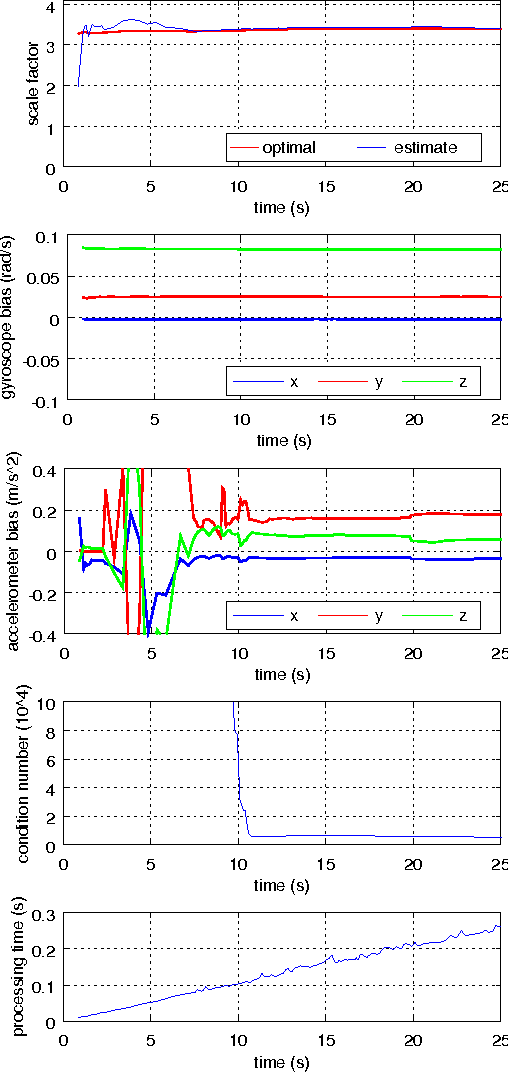

Visual-Inertial Monocular SLAM with Map Reuse

Jan 17, 2017

Abstract:In recent years there have been excellent results in Visual-Inertial Odometry techniques, which aim to compute the incremental motion of the sensor with high accuracy and robustness. However these approaches lack the capability to close loops, and trajectory estimation accumulates drift even if the sensor is continually revisiting the same place. In this work we present a novel tightly-coupled Visual-Inertial Simultaneous Localization and Mapping system that is able to close loops and reuse its map to achieve zero-drift localization in already mapped areas. While our approach can be applied to any camera configuration, we address here the most general problem of a monocular camera, with its well-known scale ambiguity. We also propose a novel IMU initialization method, which computes the scale, the gravity direction, the velocity, and gyroscope and accelerometer biases, in a few seconds with high accuracy. We test our system in the 11 sequences of a recent micro-aerial vehicle public dataset achieving a typical scale factor error of 1% and centimeter precision. We compare to the state-of-the-art in visual-inertial odometry in sequences with revisiting, proving the better accuracy of our method due to map reuse and no drift accumulation.

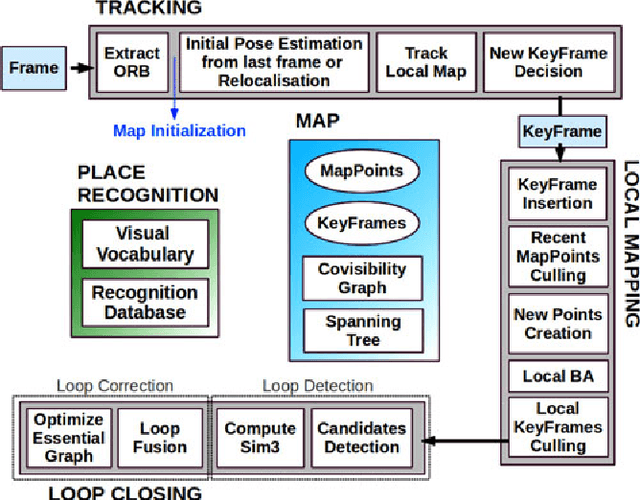

ORB-SLAM: a Versatile and Accurate Monocular SLAM System

Sep 18, 2015

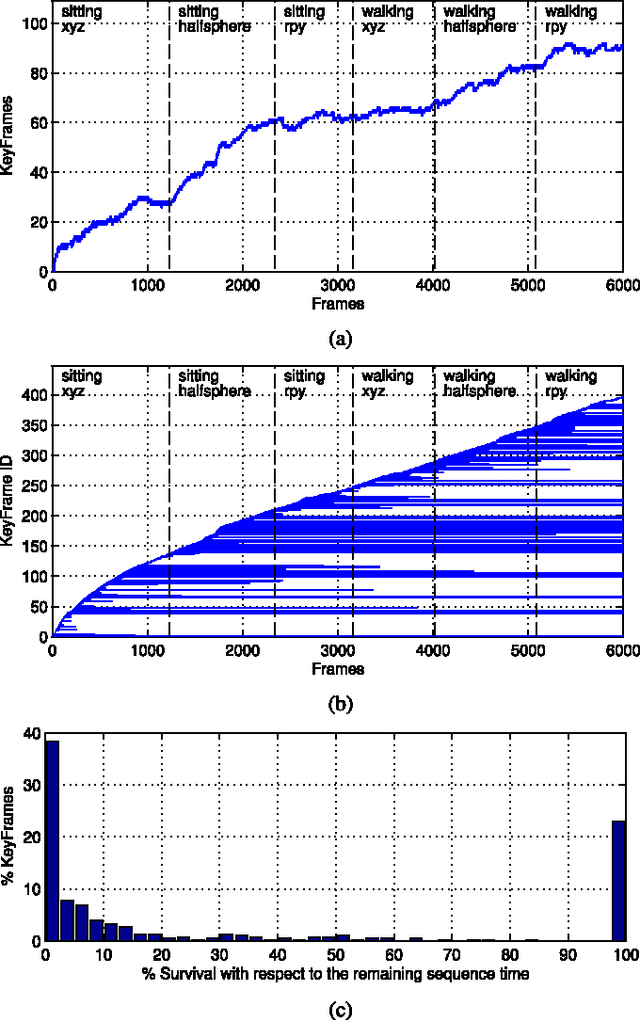

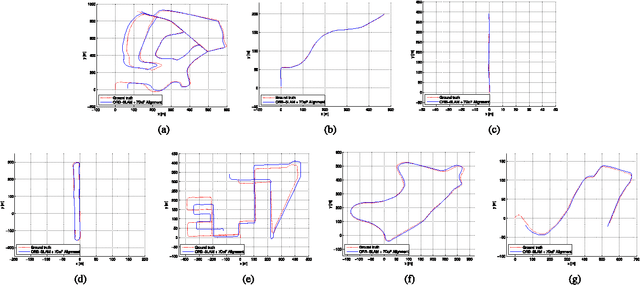

Abstract:This paper presents ORB-SLAM, a feature-based monocular SLAM system that operates in real time, in small and large, indoor and outdoor environments. The system is robust to severe motion clutter, allows wide baseline loop closing and relocalization, and includes full automatic initialization. Building on excellent algorithms of recent years, we designed from scratch a novel system that uses the same features for all SLAM tasks: tracking, mapping, relocalization, and loop closing. A survival of the fittest strategy that selects the points and keyframes of the reconstruction leads to excellent robustness and generates a compact and trackable map that only grows if the scene content changes, allowing lifelong operation. We present an exhaustive evaluation in 27 sequences from the most popular datasets. ORB-SLAM achieves unprecedented performance with respect to other state-of-the-art monocular SLAM approaches. For the benefit of the community, we make the source code public.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge