Josue Casco-Rodriguez

[Re] The Discriminative Kalman Filter for Bayesian Filtering with Nonlinear and Non-Gaussian Observation Models

Jan 24, 2024Abstract:Kalman filters provide a straightforward and interpretable means to estimate hidden or latent variables, and have found numerous applications in control, robotics, signal processing, and machine learning. One such application is neural decoding for neuroprostheses. In 2020, Burkhart et al. thoroughly evaluated their new version of the Kalman filter that leverages Bayes' theorem to improve filter performance for highly non-linear or non-Gaussian observation models. This work provides an open-source Python alternative to the authors' MATLAB algorithm. Specifically, we reproduce their most salient results for neuroscientific contexts and further examine the efficacy of their filter using multiple random seeds and previously unused trials from the authors' dataset. All experiments were performed offline on a single computer.

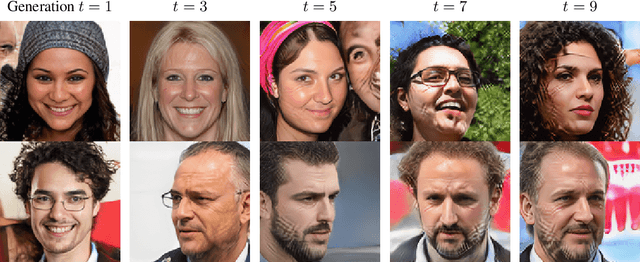

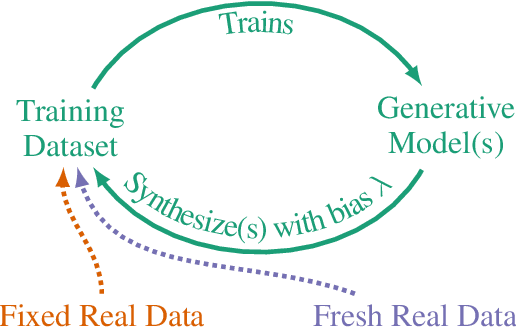

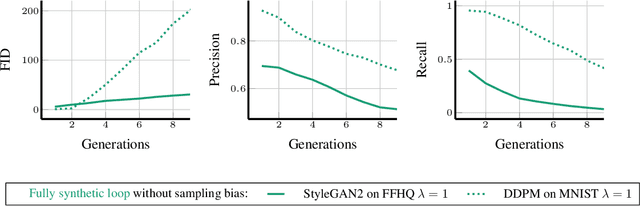

Self-Consuming Generative Models Go MAD

Jul 04, 2023

Abstract:Seismic advances in generative AI algorithms for imagery, text, and other data types has led to the temptation to use synthetic data to train next-generation models. Repeating this process creates an autophagous (self-consuming) loop whose properties are poorly understood. We conduct a thorough analytical and empirical analysis using state-of-the-art generative image models of three families of autophagous loops that differ in how fixed or fresh real training data is available through the generations of training and in whether the samples from previous generation models have been biased to trade off data quality versus diversity. Our primary conclusion across all scenarios is that without enough fresh real data in each generation of an autophagous loop, future generative models are doomed to have their quality (precision) or diversity (recall) progressively decrease. We term this condition Model Autophagy Disorder (MAD), making analogy to mad cow disease.

A Comprehensive Review of Spiking Neural Networks: Interpretation, Optimization, Efficiency, and Best Practices

Mar 21, 2023Abstract:Biological neural networks continue to inspire breakthroughs in neural network performance. And yet, one key area of neural computation that has been under-appreciated and under-investigated is biologically plausible, energy-efficient spiking neural networks, whose potential is especially attractive for low-power, mobile, or otherwise hardware-constrained settings. We present a literature review of recent developments in the interpretation, optimization, efficiency, and accuracy of spiking neural networks. Key contributions include identification, discussion, and comparison of cutting-edge methods in spiking neural network optimization, energy-efficiency, and evaluation, starting from first principles so as to be accessible to new practitioners.

Rock Guitar Tablature Generation via Natural Language Processing

Jan 12, 2023Abstract:Deep learning has recently empowered and democratized generative modeling of images and text, with additional concurrent works exploring the possibility of generating more complex forms of data, such as audio. However, the high dimensionality, long-range dependencies, and lack of standardized datasets currently makes generative modeling of audio and music very challenging. We propose to model music as a series of discrete notes upon which we can use autoregressive natural language processing techniques for successful generative modeling. While previous works used similar pipelines on data such as sheet music and MIDI, we aim to extend such approaches to the under-studied medium of guitar tablature. Specifically, we develop the first work to our knowledge that models one specific genre as guitar tablature: heavy rock. Unlike other works in guitar tablature generation, we have a freely available public demo at https://huggingface.co/spaces/josuelmet/Metal_Music_Interpolator

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge