Joshua L. Pulsipher

Department of Chemical Engineering, University of Waterloo, Waterloo, Canada

A Digital Twin Simulator of a Pastillation Process with Applications to Automatic Control based on Computer Vision

Mar 18, 2025Abstract:We present a digital-twin simulator for a pastillation process. The simulation framework produces realistic thermal image data of the process that is used to train computer vision-based soft sensors based on convolutional neural networks (CNNs); the soft sensors produce output signals for temperature and product flow rate that enable real-time monitoring and feedback control. Pastillation technologies are high-throughput devices that are used in a broad range of industries; these processes face operational challenges such as real-time identification of clog locations (faults) in the rotating shell and the automatic, real-time adjustment of conveyor belt speed and operating conditions to stabilize output. The proposed simulator is able to capture this behavior and generates realistic data that can be used to benchmark different algorithms for image processing and different control architectures. We present a case study to illustrate the capabilities; the study explores behavior over a range of equipment sizes, clog locations, and clog duration. A feedback controller (tuned using Bayesian optimization) is used to adjust the conveyor belt speed based on the CNN output signal to achieve the desired process outputs.

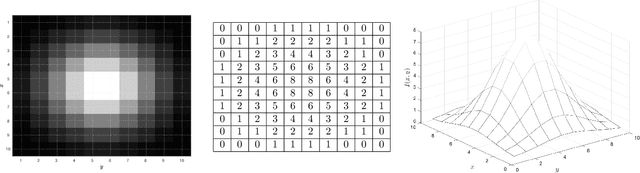

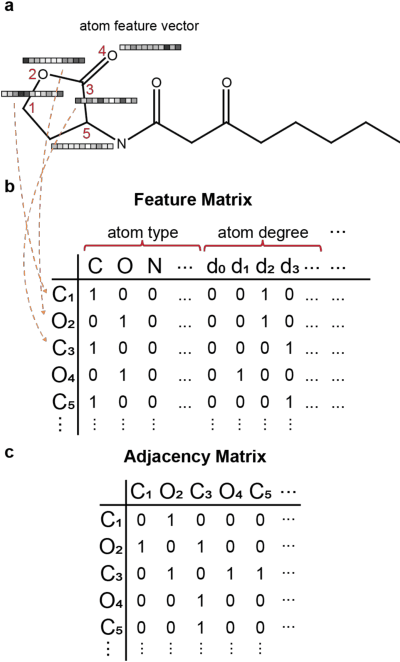

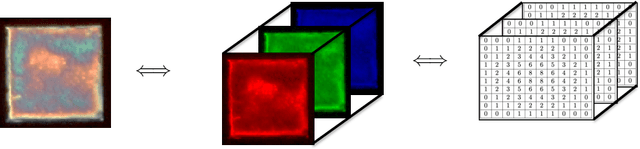

Convolutional Neural Networks: Basic Concepts and Applications in Manufacturing

Oct 14, 2022

Abstract:We discuss basic concepts of convolutional neural networks (CNNs) and outline uses in manufacturing. We begin by discussing how different types of data objects commonly encountered in manufacturing (e.g., time series, images, micrographs, videos, spectra, molecular structures) can be represented in a flexible manner using tensors and graphs. We then discuss how CNNs use convolution operations to extract informative features (e.g., geometric patterns and textures) from the such representations to predict emergent properties and phenomena and/or to identify anomalies. We also discuss how CNNs can exploit color as a key source of information, which enables the use of modern computer vision hardware (e.g., infrared, thermal, and hyperspectral cameras). We illustrate the concepts using diverse case studies arising in spectral analysis, molecule design, sensor design, image-based control, and multivariate process monitoring.

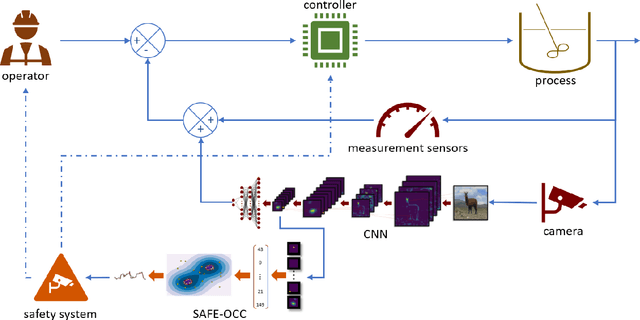

SAFE-OCC: A Novelty Detection Framework for Convolutional Neural Network Sensors and its Application in Process Control

Feb 03, 2022

Abstract:We present a novelty detection framework for Convolutional Neural Network (CNN) sensors that we call Sensor-Activated Feature Extraction One-Class Classification (SAFE-OCC). We show that this framework enables the safe use of computer vision sensors in process control architectures. Emergent control applications use CNN models to map visual data to a state signal that can be interpreted by the controller. Incorporating such sensors introduces a significant system operation vulnerability because CNN sensors can exhibit high prediction errors when exposed to novel (abnormal) visual data. Unfortunately, identifying such novelties in real-time is nontrivial. To address this issue, the SAFE-OCC framework leverages the convolutional blocks of the CNN to create an effective feature space to conduct novelty detection using a desired one-class classification technique. This approach engenders a feature space that directly corresponds to that used by the CNN sensor and avoids the need to derive an independent latent space. We demonstrate the effectiveness of SAFE-OCC via simulated control environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge