Joseph Rynkiewicz

SAMM

Neural Networks for Complex Data

Oct 24, 2012

Abstract:Artificial neural networks are simple and efficient machine learning tools. Defined originally in the traditional setting of simple vector data, neural network models have evolved to address more and more difficulties of complex real world problems, ranging from time evolving data to sophisticated data structures such as graphs and functions. This paper summarizes advances on those themes from the last decade, with a focus on results obtained by members of the SAMM team of Universit\'e Paris 1

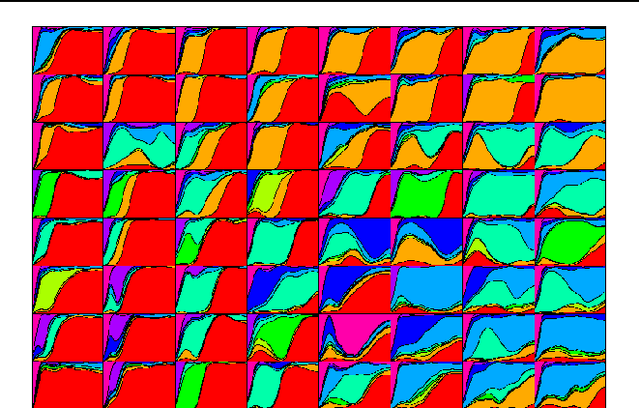

Self Organizing Map algorithm and distortion measure

Feb 21, 2008

Abstract:We study the statistical meaning of the minimization of distortion measure and the relation between the equilibrium points of the SOM algorithm and the minima of distortion measure. If we assume that the observations and the map lie in an compact Euclidean space, we prove the strong consistency of the map which almost minimizes the empirical distortion. Moreover, after calculating the derivatives of the theoretical distortion measure, we show that the points minimizing this measure and the equilibria of the Kohonen map do not match in general. We illustrate, with a simple example, how this occurs.

Efficient Estimation of Multidimensional Regression Model with Multilayer Perceptron

Feb 21, 2008

Abstract:This work concerns estimation of multidimensional nonlinear regression models using multilayer perceptron (MLP). The main problem with such model is that we have to know the covariance matrix of the noise to get optimal estimator. however we show that, if we choose as cost function the logarithm of the determinant of the empirical error covariance matrix, we get an asymptotically optimal estimator.

Testing the number of parameters with multidimensional MLP

Feb 21, 2008Abstract:This work concerns testing the number of parameters in one hidden layer multilayer perceptron (MLP). For this purpose we assume that we have identifiable models, up to a finite group of transformations on the weights, this is for example the case when the number of hidden units is know. In this framework, we show that we get a simple asymptotic distribution, if we use the logarithm of the determinant of the empirical error covariance matrix as cost function.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge