Jose Guivant

Registration between Point Cloud Streams and Sequential Bounding Boxes via Gradient Descent

Sep 14, 2024Abstract:In this paper, we propose an algorithm for registering sequential bounding boxes with point cloud streams. Unlike popular point cloud registration techniques, the alignment of the point cloud and the bounding box can rely on the properties of the bounding box, such as size, shape, and temporal information, which provides substantial support and performance gains. Motivated by this, we propose a new approach to tackle this problem. Specifically, we model the registration process through an overall objective function that includes the final goal and all constraints. We then optimize the function using gradient descent. Our experiments show that the proposed method performs remarkably well with a 40\% improvement in IoU and demonstrates more robust registration between point cloud streams and sequential bounding boxes

Efficient and accurate object detection with simultaneous classification and tracking

Jul 04, 2020

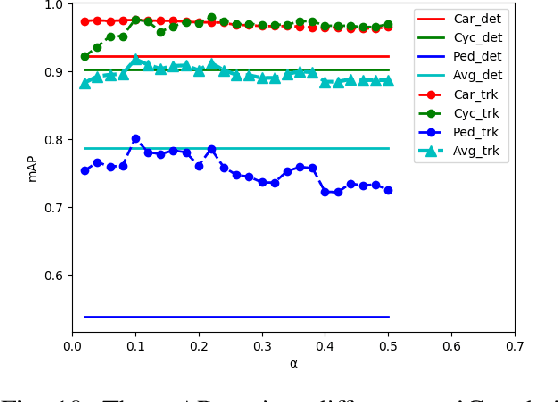

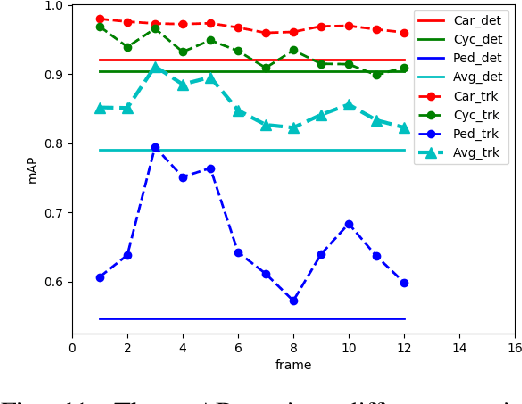

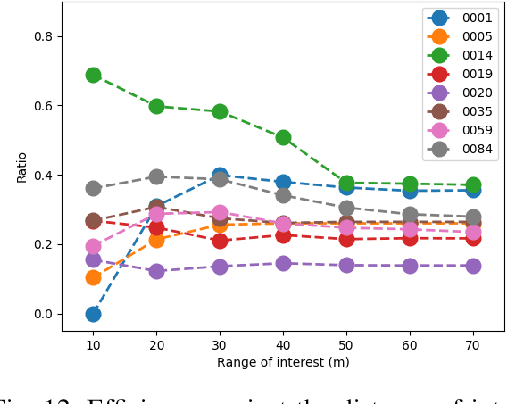

Abstract:Interacting with the environment, such as object detection and tracking, is a crucial ability of mobile robots. Besides high accuracy, efficiency in terms of processing effort and energy consumption are also desirable. To satisfy both requirements, we propose a detection framework based on simultaneous classification and tracking in the point stream. In this framework, a tracker performs data association in sequences of the point cloud, guiding the detector to avoid redundant processing (i.e. classifying already-known objects). For objects whose classification is not sufficiently certain, a fusion model is designed to fuse selected key observations that provide different perspectives across the tracking span. Therefore, performance (accuracy and efficiency of detection) can be enhanced. This method is particularly suitable for detecting and tracking moving objects, a process that would require expensive computations if solved using conventional procedures. Experiments were conducted on the benchmark dataset, and the results showed that the proposed method outperforms original tracking-by-detection approaches in both efficiency and accuracy.

Real-time 3D object proposal generation and classification under limited processing resources

Mar 24, 2020

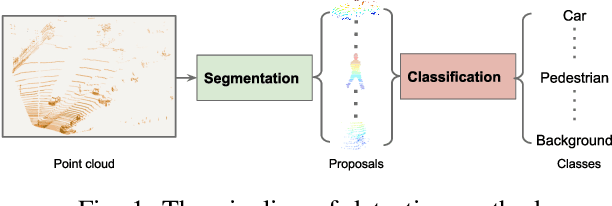

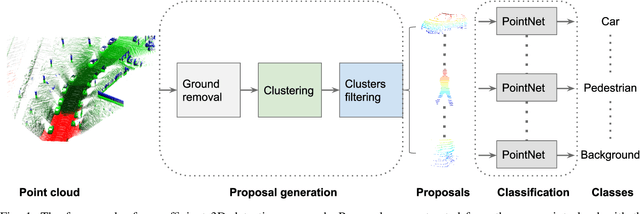

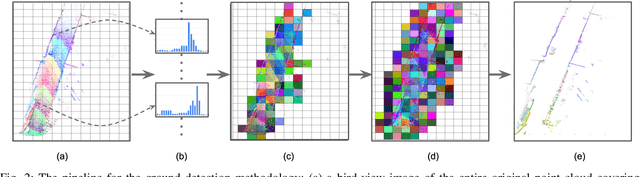

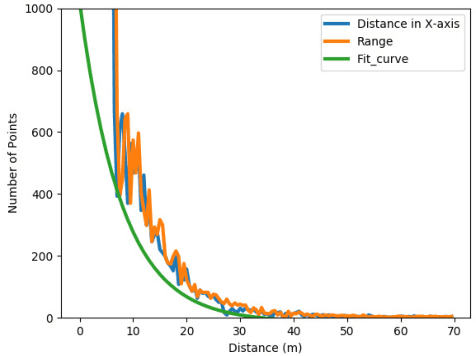

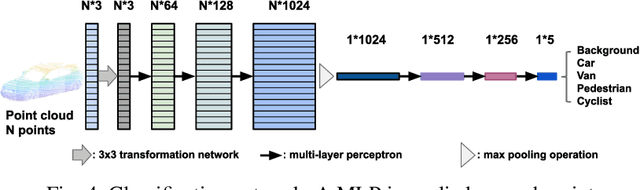

Abstract:The task of detecting 3D objects is important to various robotic applications. The existing deep learning-based detection techniques have achieved impressive performance. However, these techniques are limited to run with a graphics processing unit (GPU) in a real-time environment. To achieve real-time 3D object detection with limited computational resources for robots, we propose an efficient detection method consisting of 3D proposal generation and classification. The proposal generation is mainly based on point segmentation, while the proposal classification is performed by a lightweight convolution neural network (CNN) model. To validate our method, KITTI datasets are utilized. The experimental results demonstrate the capability of proposed real-time 3D object detection method from the point cloud with a competitive performance of object recall and classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge