Jorge Lobo

The Role of Foundation Models in Neuro-Symbolic Learning and Reasoning

Feb 02, 2024Abstract:Neuro-Symbolic AI (NeSy) holds promise to ensure the safe deployment of AI systems, as interpretable symbolic techniques provide formal behaviour guarantees. The challenge is how to effectively integrate neural and symbolic computation, to enable learning and reasoning from raw data. Existing pipelines that train the neural and symbolic components sequentially require extensive labelling, whereas end-to-end approaches are limited in terms of scalability, due to the combinatorial explosion in the symbol grounding problem. In this paper, we leverage the implicit knowledge within foundation models to enhance the performance in NeSy tasks, whilst reducing the amount of data labelling and manual engineering. We introduce a new architecture, called NeSyGPT, which fine-tunes a vision-language foundation model to extract symbolic features from raw data, before learning a highly expressive answer set program to solve a downstream task. Our comprehensive evaluation demonstrates that NeSyGPT has superior accuracy over various baselines, and can scale to complex NeSy tasks. Finally, we highlight the effective use of a large language model to generate the programmatic interface between the neural and symbolic components, significantly reducing the amount of manual engineering required.

Inductive Learning of Complex Knowledge from Raw Data

May 25, 2022

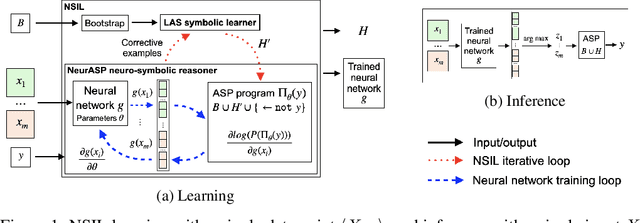

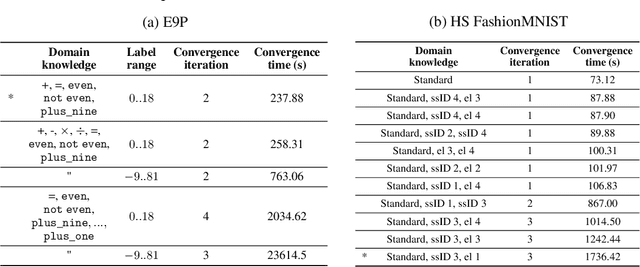

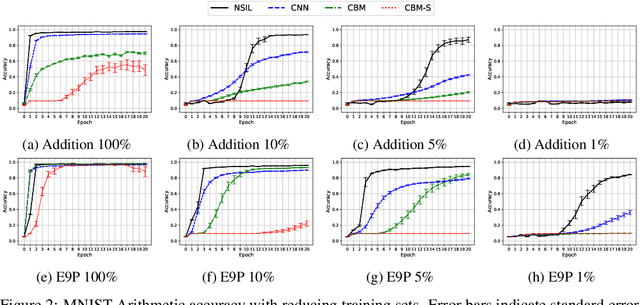

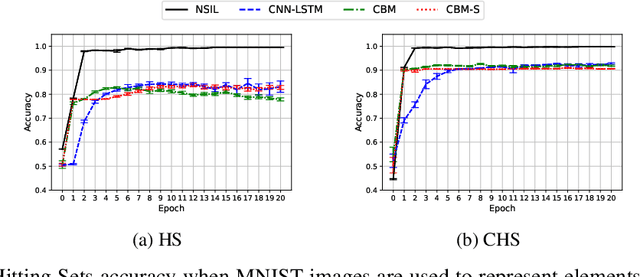

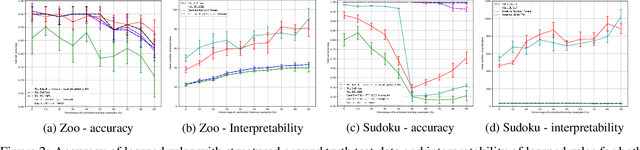

Abstract:One of the ultimate goals of Artificial Intelligence is to learn generalised and human-interpretable knowledge from raw data. Neuro-symbolic reasoning approaches partly tackle this problem by improving the training of a neural network using a manually engineered symbolic knowledge base. In the case where symbolic knowledge is learned from raw data, this knowledge lacks the expressivity required to solve complex problems. In this paper, we introduce Neuro-Symbolic Inductive Learner (NSIL), an approach that trains a neural network to extract latent concepts from raw data, whilst learning symbolic knowledge that solves complex problems, defined in terms of these latent concepts. The novelty of our approach is a method for biasing a symbolic learner to learn improved knowledge, based on the in-training performance of both neural and symbolic components. We evaluate NSIL on two problem domains that require learning knowledge with different levels of complexity, and demonstrate that NSIL learns knowledge that is not possible to learn with other neuro-symbolic systems, whilst outperforming baseline models in terms of accuracy and data efficiency.

FF-NSL: Feed-Forward Neural-Symbolic Learner

Jul 02, 2021

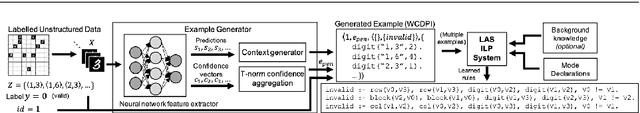

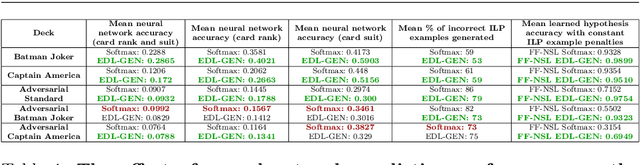

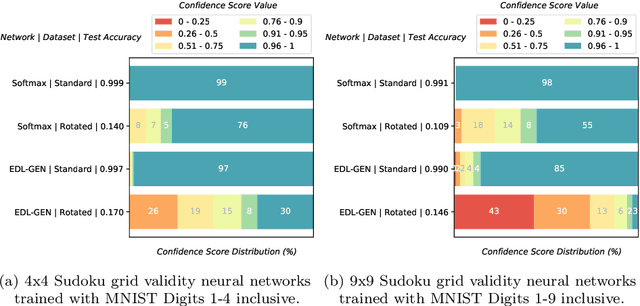

Abstract:Inductive Logic Programming (ILP) aims to learn generalised, interpretable hypotheses in a data-efficient manner. However, current ILP systems require training examples to be specified in a structured logical form. To address this problem, this paper proposes a neural-symbolic learning framework, called Feed-Forward Neural-Symbolic Learner (FF-NSL), that integrates state-of-the-art ILP systems, based on the Answer Set semantics, with Neural Networks (NNs), in order to learn interpretable hypotheses from labelled unstructured data. To demonstrate the generality and robustness of FF-NSL, we use two datasets subject to distributional shifts, for which pre-trained NNs may give incorrect predictions with high confidence. Experimental results show that FF-NSL outperforms tree-based and neural-based approaches by learning more accurate and interpretable hypotheses with fewer examples.

NSL: Hybrid Interpretable Learning From Noisy Raw Data

Dec 09, 2020

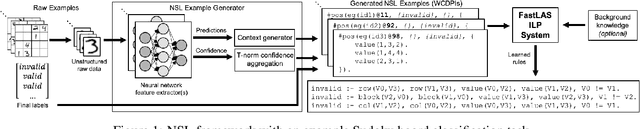

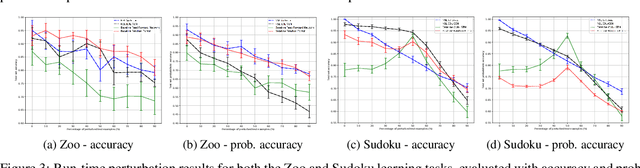

Abstract:Inductive Logic Programming (ILP) systems learn generalised, interpretable rules in a data-efficient manner utilising existing background knowledge. However, current ILP systems require training examples to be specified in a structured logical format. Neural networks learn from unstructured data, although their learned models may be difficult to interpret and are vulnerable to data perturbations at run-time. This paper introduces a hybrid neural-symbolic learning framework, called NSL, that learns interpretable rules from labelled unstructured data. NSL combines pre-trained neural networks for feature extraction with FastLAS, a state-of-the-art ILP system for rule learning under the answer set semantics. Features extracted by the neural components define the structured context of labelled examples and the confidence of the neural predictions determines the level of noise of the examples. Using the scoring function of FastLAS, NSL searches for short, interpretable rules that generalise over such noisy examples. We evaluate our framework on propositional and first-order classification tasks using the MNIST dataset as raw data. Specifically, we demonstrate that NSL is able to learn robust rules from perturbed MNIST data and achieve comparable or superior accuracy when compared to neural network and random forest baselines whilst being more general and interpretable.

Knowledge And The Action Description Language A

Apr 24, 2004

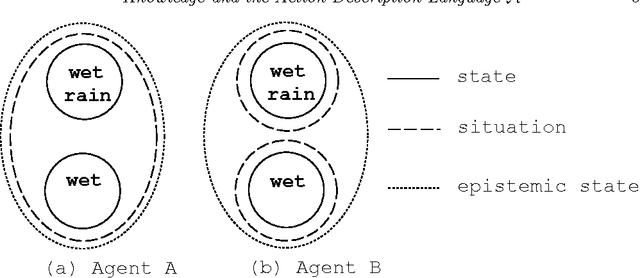

Abstract:We introduce Ak, an extension of the action description language A (Gelfond and Lifschitz, 1993) to handle actions which affect knowledge. We use sensing actions to increase an agent's knowledge of the world and non-deterministic actions to remove knowledge. We include complex plans involving conditionals and loops in our query language for hypothetical reasoning. We also present a translation of Ak domain descriptions into epistemic logic programs.

* Appeared in Theory and Practice of Logic Programming, vol. 1, no. 2, 2001

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge