FF-NSL: Feed-Forward Neural-Symbolic Learner

Paper and Code

Jul 02, 2021

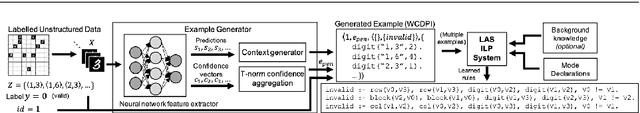

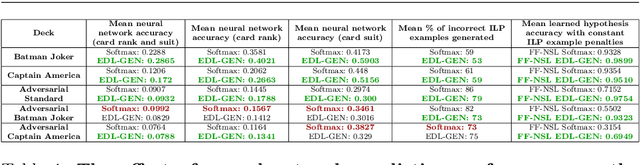

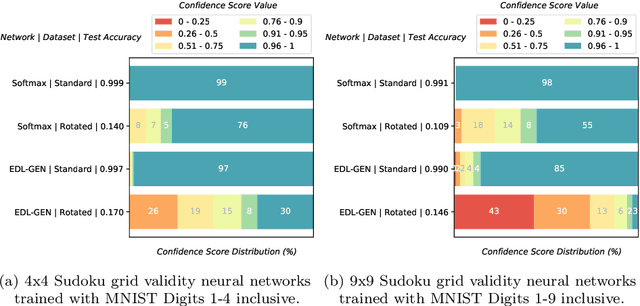

Inductive Logic Programming (ILP) aims to learn generalised, interpretable hypotheses in a data-efficient manner. However, current ILP systems require training examples to be specified in a structured logical form. To address this problem, this paper proposes a neural-symbolic learning framework, called Feed-Forward Neural-Symbolic Learner (FF-NSL), that integrates state-of-the-art ILP systems, based on the Answer Set semantics, with Neural Networks (NNs), in order to learn interpretable hypotheses from labelled unstructured data. To demonstrate the generality and robustness of FF-NSL, we use two datasets subject to distributional shifts, for which pre-trained NNs may give incorrect predictions with high confidence. Experimental results show that FF-NSL outperforms tree-based and neural-based approaches by learning more accurate and interpretable hypotheses with fewer examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge