Jonas Falkner

Supervised Permutation Invariant Networks for Solving the CVRP with Bounded Fleet Size

Jan 05, 2022

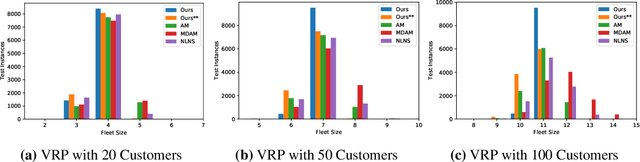

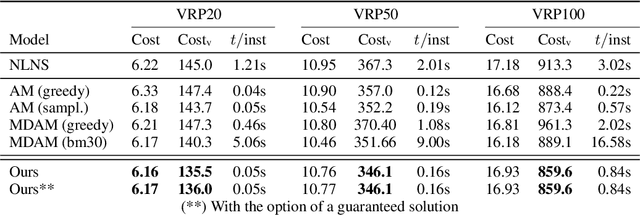

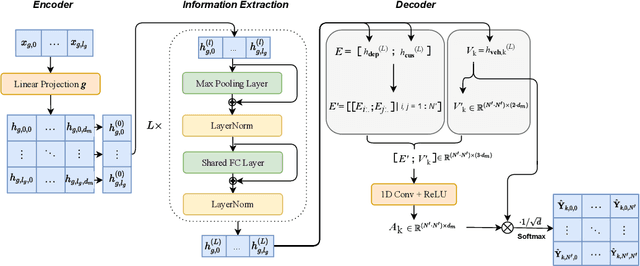

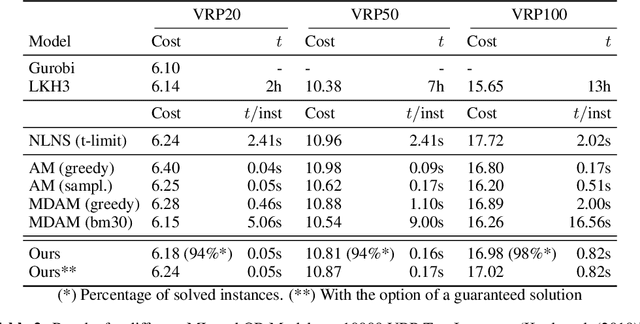

Abstract:Learning to solve combinatorial optimization problems, such as the vehicle routing problem, offers great computational advantages over classical operations research solvers and heuristics. The recently developed deep reinforcement learning approaches either improve an initially given solution iteratively or sequentially construct a set of individual tours. However, most of the existing learning-based approaches are not able to work for a fixed number of vehicles and thus bypass the complex assignment problem of the customers onto an apriori given number of available vehicles. On the other hand, this makes them less suitable for real applications, as many logistic service providers rely on solutions provided for a specific bounded fleet size and cannot accommodate short term changes to the number of vehicles. In contrast we propose a powerful supervised deep learning framework that constructs a complete tour plan from scratch while respecting an apriori fixed number of available vehicles. In combination with an efficient post-processing scheme, our supervised approach is not only much faster and easier to train but also achieves competitive results that incorporate the practical aspect of vehicle costs. In thorough controlled experiments we compare our method to multiple state-of-the-art approaches where we demonstrate stable performance, while utilizing less vehicles and shed some light on existent inconsistencies in the experimentation protocols of the related work.

Improving Hyperparameter Optimization by Planning Ahead

Oct 15, 2021

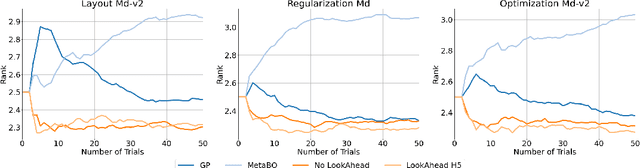

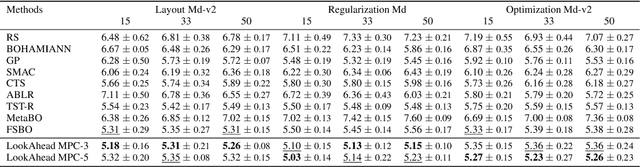

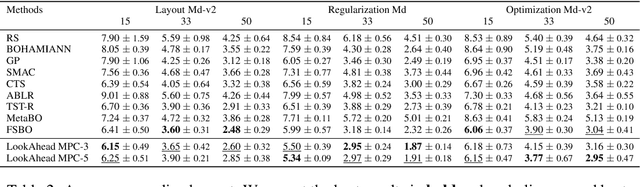

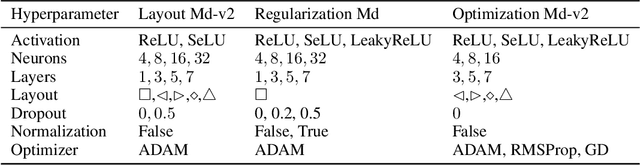

Abstract:Hyperparameter optimization (HPO) is generally treated as a bi-level optimization problem that involves fitting a (probabilistic) surrogate model to a set of observed hyperparameter responses, e.g. validation loss, and consequently maximizing an acquisition function using a surrogate model to identify good hyperparameter candidates for evaluation. The choice of a surrogate and/or acquisition function can be further improved via knowledge transfer across related tasks. In this paper, we propose a novel transfer learning approach, defined within the context of model-based reinforcement learning, where we represent the surrogate as an ensemble of probabilistic models that allows trajectory sampling. We further propose a new variant of model predictive control which employs a simple look-ahead strategy as a policy that optimizes a sequence of actions, representing hyperparameter candidates to expedite HPO. Our experiments on three meta-datasets comparing to state-of-the-art HPO algorithms including a model-free reinforcement learning approach show that the proposed method can outperform all baselines by exploiting a simple planning-based policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge