John Mansfield

Looking forward: Linguistic theory and methods

Feb 25, 2025Abstract:This chapter examines current developments in linguistic theory and methods, focusing on the increasing integration of computational, cognitive, and evolutionary perspectives. We highlight four major themes shaping contemporary linguistics: (1) the explicit testing of hypotheses about symbolic representation, such as efficiency, locality, and conceptual semantic grounding; (2) the impact of artificial neural networks on theoretical debates and linguistic analysis; (3) the importance of intersubjectivity in linguistic theory; and (4) the growth of evolutionary linguistics. By connecting linguistics with computer science, psychology, neuroscience, and biology, we provide a forward-looking perspective on the changing landscape of linguistic research.

Leveraging neural representations for facilitating access to untranscribed speech from endangered languages

Mar 26, 2021

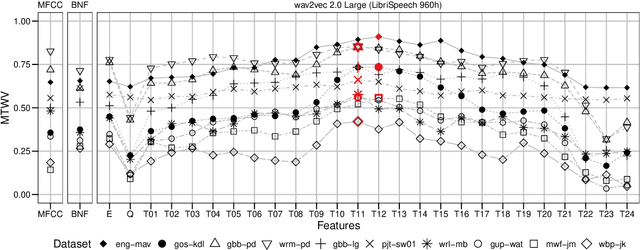

Abstract:For languages with insufficient resources to train speech recognition systems, query-by-example spoken term detection (QbE-STD) offers a way of accessing an untranscribed speech corpus by helping identify regions where spoken query terms occur. Yet retrieval performance can be poor when the query and corpus are spoken by different speakers and produced in different recording conditions. Using data selected from a variety of speakers and recording conditions from 7 Australian Aboriginal languages and a regional variety of Dutch, all of which are endangered or vulnerable, we evaluated whether QbE-STD performance on these languages could be improved by leveraging representations extracted from the pre-trained English wav2vec 2.0 model. Compared to the use of Mel-frequency cepstral coefficients and bottleneck features, we find that representations from the middle layers of the wav2vec 2.0 Transformer offer large gains in task performance (between 56% and 86%). While features extracted using the pre-trained English model yielded improved detection on all the evaluation languages, better detection performance was associated with the evaluation language's phonological similarity to English.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge