John Glossner

An Overview of the Drone Open-Source Ecosystem

Oct 05, 2021

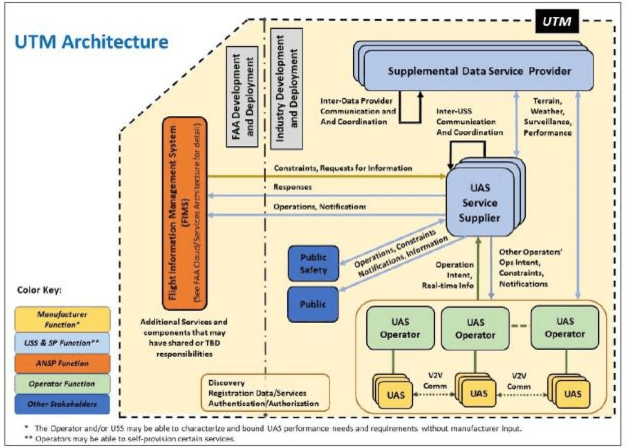

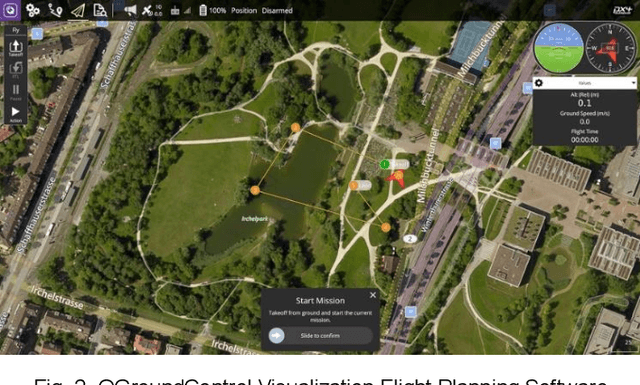

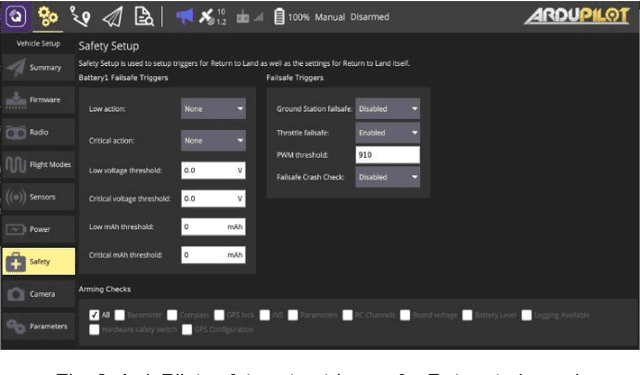

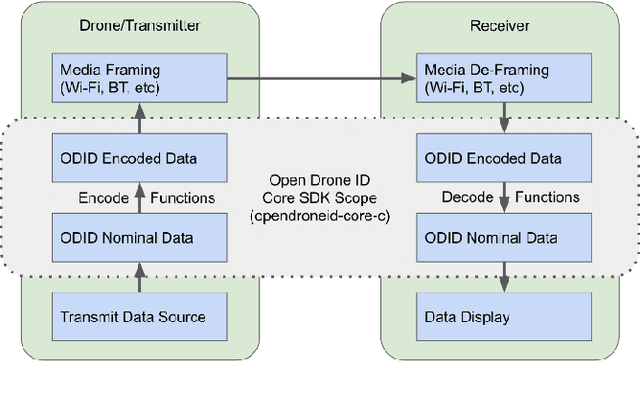

Abstract:Unmanned aerial systems capable of beyond visual line of sight operation can be organized into a top-down hierarchy of layers including flight supervision, command and control, simulation of systems, operating systems, and physical hardware. Flight supervision includes unmanned air traffic management, flight planning, authorization, and remote identification. Command and control ensure drones can be piloted safely. Simulation of systems concerns how drones may react to different environments and how changing conditions and information provide input to a piloting system. Electronic hardware controlling drone operation is typically accessed using an operating system. Each layer in the hierarchy has an ecosystem of open-source solutions. In this brief survey we describe representative open-source examples for each level of the hierarchy.

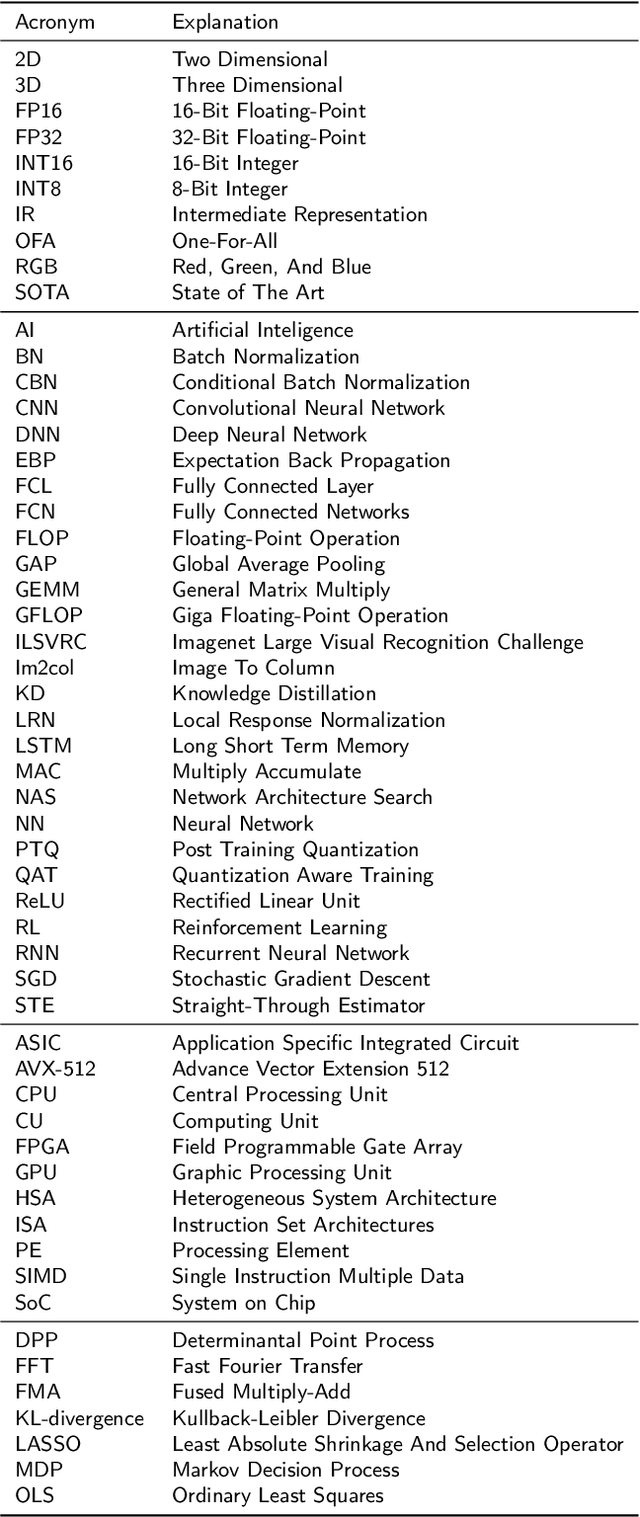

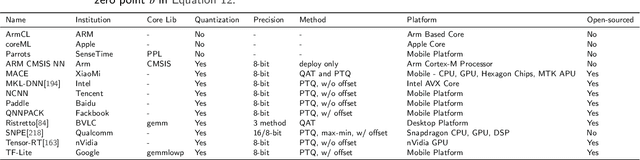

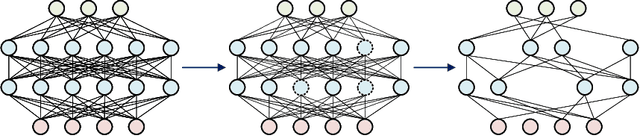

Pruning and Quantization for Deep Neural Network Acceleration: A Survey

Jan 24, 2021

Abstract:Deep neural networks have been applied in many applications exhibiting extraordinary abilities in the field of computer vision. However, complex network architectures challenge efficient real-time deployment and require significant computation resources and energy costs. These challenges can be overcome through optimizations such as network compression. This paper provides a survey on two types of network compression: pruning and quantization. We compare current techniques, analyze their strengths and weaknesses, provide guidance for compressing networks, and discuss possible future compression techniques.

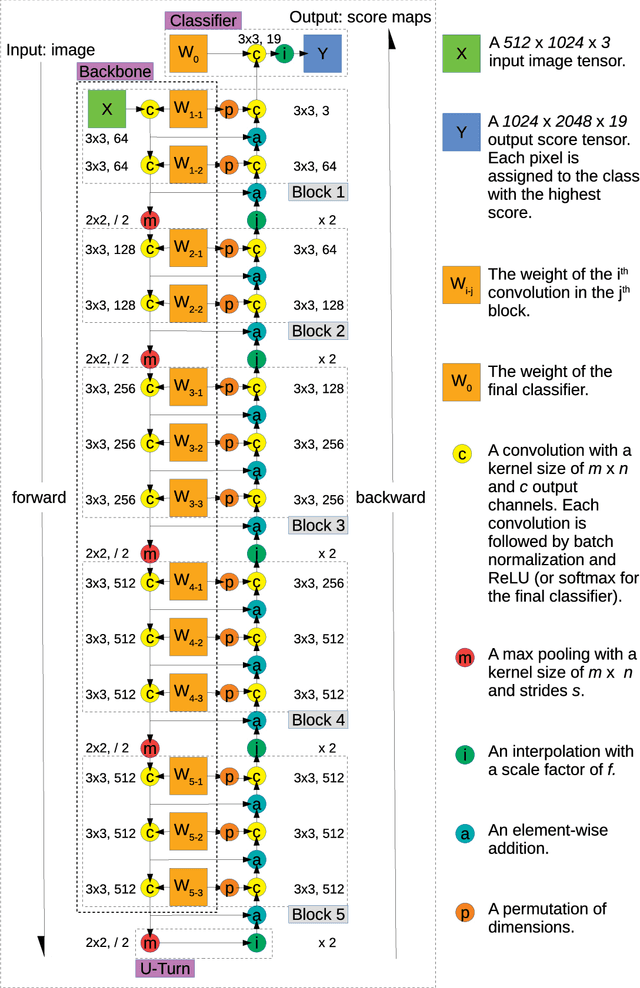

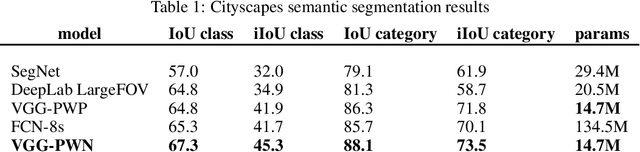

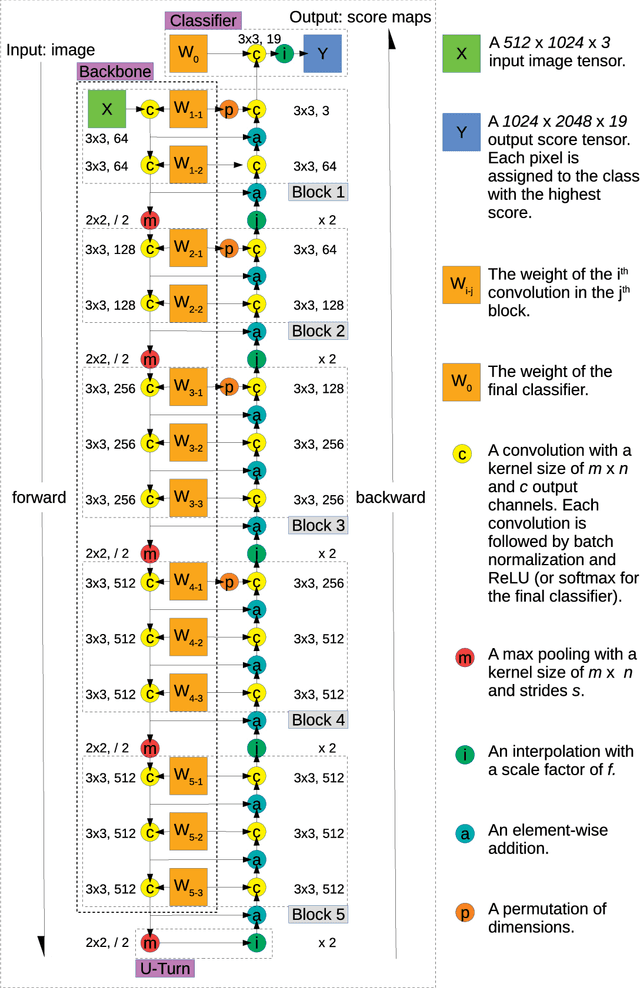

Feedbackward Decoding for Semantic Segmentation

Aug 22, 2019

Abstract:We propose a novel approach for semantic segmentation that uses an encoder in the reverse direction to decode. Many semantic segmentation networks adopt a feedforward encoder-decoder architecture. Typically, an input is first downsampled by the encoder to extract high-level semantic features and continues to be fed forward through the decoder module to recover low-level spatial clues. Our method works in an alternative direction that lets information flow backward from the last layer of the encoder towards the first. The encoder performs encoding in the forward pass and the same network performs decoding in the backward pass. Therefore, the encoder itself is also the decoder. Compared to conventional encoder-decoder architectures, ours doesn't require additional layers for decoding and further reuses the encoder weights thereby reducing the total number of parameters required for processing. We show by using only the 13 convolutional layers from VGG-16 plus one tiny classification layer, our model significantly outperforms other frequently cited models that are also adapted from VGG-16. On the Cityscapes semantic segmentation benchmark, our model uses 50.0% less parameters than SegNet and achieves an 18.1% higher "IoU class" score; it uses 28.3% less parameters than DeepLab LargeFOV and the achieved "IoU class" score is 3.9% higher; it uses 89.1% fewer parameters than FCN-8s and the achieved "IoU class" score is 3.1% higher. Our code will be publicly available on Github later.

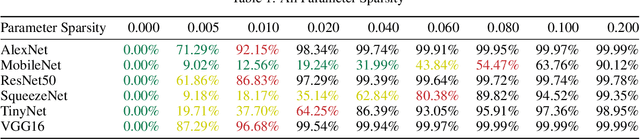

Dynamic Runtime Feature Map Pruning

Dec 24, 2018

Abstract:High bandwidth requirements are an obstacle for accelerating the training and inference of deep neural networks. Most previous research focuses on reducing the size of kernel maps for inference. We analyze parameter sparsity of six popular convolutional neural networks - AlexNet, MobileNet, ResNet-50, SqueezeNet, TinyNet, and VGG16. Of the networks considered, those using ReLU (AlexNet, SqueezeNet, VGG16) contain a high percentage of 0-valued parameters and can be statically pruned. Networks with Non-ReLU activation functions in some cases may not contain any 0-valued parameters (ResNet-50, TinyNet). We also investigate runtime feature map usage and find that input feature maps comprise the majority of bandwidth requirements when depth-wise convolution and point-wise convolutions used. We introduce dynamic runtime pruning of feature maps and show that 10% of dynamic feature map execution can be removed without loss of accuracy. We then extend dynamic pruning to allow for values within an epsilon of zero and show a further 5% reduction of feature map loading with a 1% loss of accuracy in top-1.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge