Joachim Nyborg

RainPro-8: An Efficient Deep Learning Model to Estimate Rainfall Probabilities Over 8 Hours

May 15, 2025Abstract:We present a deep learning model for high-resolution probabilistic precipitation forecasting over an 8-hour horizon in Europe, overcoming the limitations of radar-only deep learning models with short forecast lead times. Our model efficiently integrates multiple data sources - including radar, satellite, and physics-based numerical weather prediction (NWP) - while capturing long-range interactions, resulting in accurate forecasts with robust uncertainty quantification through consistent probabilistic maps. Featuring a compact architecture, it enables more efficient training and faster inference than existing models. Extensive experiments demonstrate that our model surpasses current operational NWP systems, extrapolation-based methods, and deep-learning nowcasting models, setting a new standard for high-resolution precipitation forecasting in Europe, ensuring a balance between accuracy, interpretability, and computational efficiency.

Multi-Source Temporal Attention Network for Precipitation Nowcasting

Oct 11, 2024

Abstract:Precipitation nowcasting is crucial across various industries and plays a significant role in mitigating and adapting to climate change. We introduce an efficient deep learning model for precipitation nowcasting, capable of predicting rainfall up to 8 hours in advance with greater accuracy than existing operational physics-based and extrapolation-based models. Our model leverages multi-source meteorological data and physics-based forecasts to deliver high-resolution predictions in both time and space. It captures complex spatio-temporal dynamics through temporal attention networks and is optimized using data quality maps and dynamic thresholds. Experiments demonstrate that our model outperforms state-of-the-art, and highlight its potential for fast reliable responses to evolving weather conditions.

RainAI -- Precipitation Nowcasting from Satellite Data

Nov 30, 2023

Abstract:This paper presents a solution to the Weather4Cast 2023 competition, where the goal is to forecast high-resolution precipitation with an 8-hour lead time using lower-resolution satellite radiance images. We propose a simple, yet effective method for spatiotemporal feature learning using a 2D U-Net model, that outperforms the official 3D U-Net baseline in both performance and efficiency. We place emphasis on refining the dataset, through importance sampling and dataset preparation, and show that such techniques have a significant impact on performance. We further study an alternative cross-entropy loss function that improves performance over the standard mean squared error loss, while also enabling models to produce probabilistic outputs. Additional techniques are explored regarding the generation of predictions at different lead times, specifically through Conditioning Lead Time. Lastly, to generate high-resolution forecasts, we evaluate standard and learned upsampling methods. The code and trained parameters are available at https://github.com/rafapablos/w4c23-rainai.

Generalized Classification of Satellite Image Time Series with Thermal Positional Encoding

Mar 17, 2022

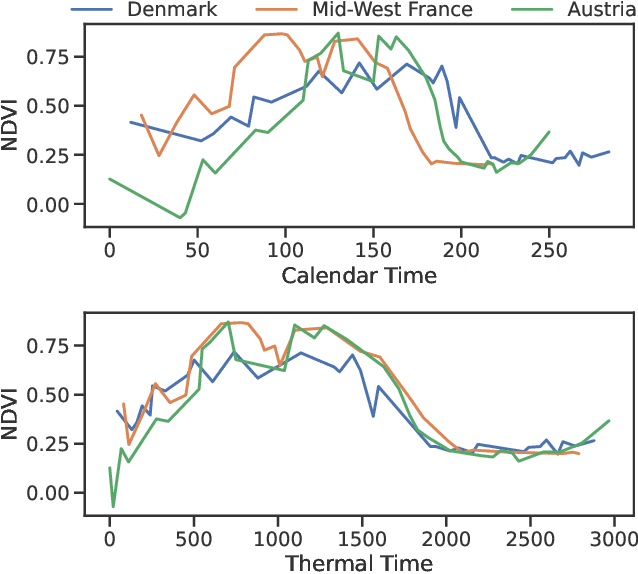

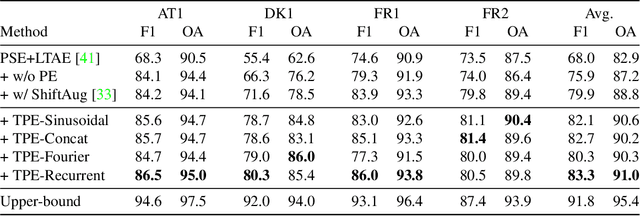

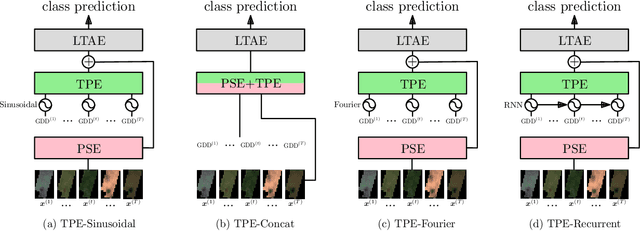

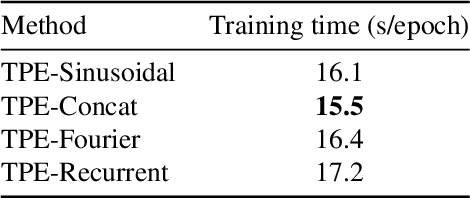

Abstract:Large-scale crop type classification is a task at the core of remote sensing efforts with applications of both economic and ecological importance. Current state-of-the-art deep learning methods are based on self-attention and use satellite image time series (SITS) to discriminate crop types based on their unique growth patterns. However, existing methods generalize poorly to regions not seen during training mainly due to not being robust to temporal shifts of the growing season caused by variations in climate. To this end, we propose Thermal Positional Encoding (TPE) for attention-based crop classifiers. Unlike previous positional encoding based on calendar time (e.g. day-of-year), TPE is based on thermal time, which is obtained by accumulating daily average temperatures over the growing season. Since crop growth is directly related to thermal time, but not calendar time, TPE addresses the temporal shifts between different regions to improve generalization. We propose multiple TPE strategies, including learnable methods, to further improve results compared to the common fixed positional encodings. We demonstrate our approach on a crop classification task across four different European regions, where we obtain state-of-the-art generalization results.

Weakly-Supervised Cloud Detection with Fixed-Point GANs

Nov 23, 2021

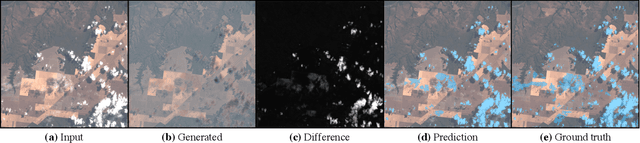

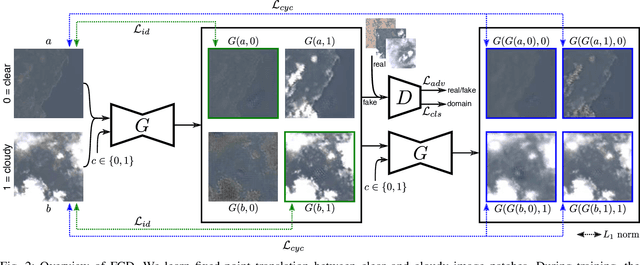

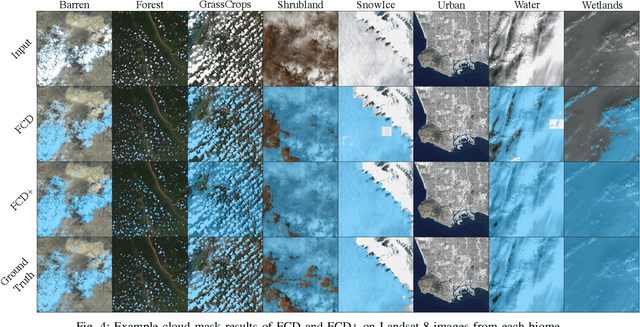

Abstract:The detection of clouds in satellite images is an essential preprocessing task for big data in remote sensing. Convolutional neural networks (CNNs) have greatly advanced the state-of-the-art in the detection of clouds in satellite images, but existing CNN-based methods are costly as they require large amounts of training images with expensive pixel-level cloud labels. To alleviate this cost, we propose Fixed-Point GAN for Cloud Detection (FCD), a weakly-supervised approach. Training with only image-level labels, we learn fixed-point translation between clear and cloudy images, so only clouds are affected during translation. Doing so enables our approach to predict pixel-level cloud labels by translating satellite images to clear ones and setting a threshold to the difference between the two images. Moreover, we propose FCD+, where we exploit the label-noise robustness of CNNs to refine the prediction of FCD, leading to further improvements. We demonstrate the effectiveness of our approach on the Landsat-8 Biome cloud detection dataset, where we obtain performance close to existing fully-supervised methods that train with expensive pixel-level labels. By fine-tuning our FCD+ with just 1% of the available pixel-level labels, we match the performance of fully-supervised methods.

TimeMatch: Unsupervised Cross-Region Adaptation by Temporal Shift Estimation

Nov 04, 2021

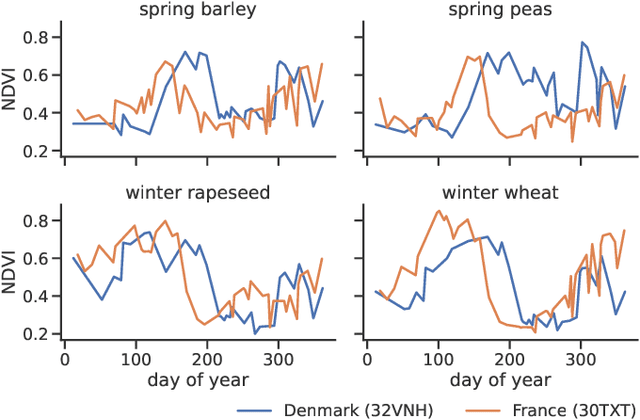

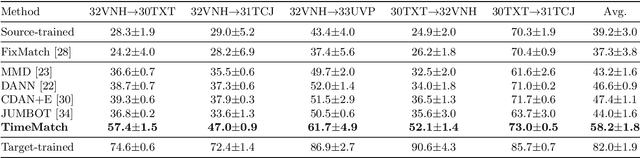

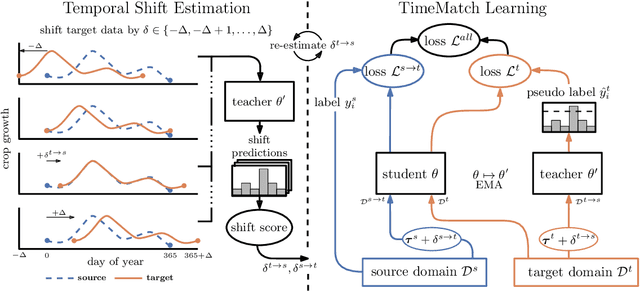

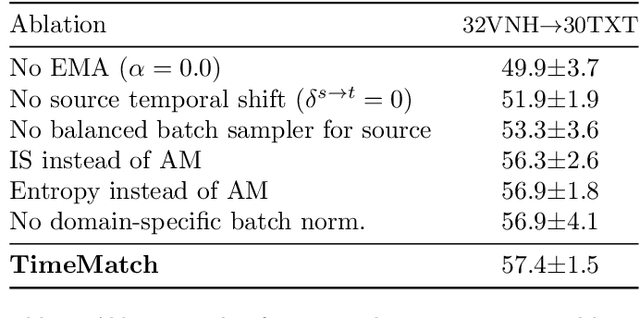

Abstract:The recent developments of deep learning models that capture the complex temporal patterns of crop phenology have greatly advanced crop classification of Satellite Image Time Series (SITS). However, when applied to target regions spatially different from the training region, these models perform poorly without any target labels due to the temporal shift of crop phenology between regions. To address this unsupervised cross-region adaptation setting, existing methods learn domain-invariant features without any target supervision, but not the temporal shift itself. As a consequence, these techniques provide only limited benefits for SITS. In this paper, we propose TimeMatch, a new unsupervised domain adaptation method for SITS that directly accounts for the temporal shift. TimeMatch consists of two components: 1) temporal shift estimation, which estimates the temporal shift of the unlabeled target region with a source-trained model, and 2) TimeMatch learning, which combines temporal shift estimation with semi-supervised learning to adapt a classifier to an unlabeled target region. We also introduce an open-access dataset for cross-region adaptation with SITS from four different regions in Europe. On this dataset, we demonstrate that TimeMatch outperforms all competing methods by 11% in F1-score across five different adaptation scenarios, setting a new state-of-the-art for cross-region adaptation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge