Jizhao Liu

BIMII-Net: Brain-Inspired Multi-Iterative Interactive Network for RGB-T Road Scene Semantic Segmentation

Mar 25, 2025

Abstract:RGB-T road scene semantic segmentation enhances visual scene understanding in complex environments characterized by inadequate illumination or occlusion by fusing information from RGB and thermal images. Nevertheless, existing RGB-T semantic segmentation models typically depend on simple addition or concatenation strategies or ignore the differences between information at different levels. To address these issues, we proposed a novel RGB-T road scene semantic segmentation network called Brain-Inspired Multi-Iteration Interaction Network (BIMII-Net). First, to meet the requirements of accurate texture and local information extraction in road scenarios like autonomous driving, we proposed a deep continuous-coupled neural network (DCCNN) architecture based on a brain-inspired model. Second, to enhance the interaction and expression capabilities among multi-modal information, we designed a cross explicit attention-enhanced fusion module (CEAEF-Module) in the feature fusion stage of BIMII-Net to effectively integrate features at different levels. Finally, we constructed a complementary interactive multi-layer decoder structure, incorporating the shallow-level feature iteration module (SFI-Module), the deep-level feature iteration module (DFI-Module), and the multi-feature enhancement module (MFE-Module) to collaboratively extract texture details and global skeleton information, with multi-module joint supervision further optimizing the segmentation results. Experimental results demonstrate that BIMII-Net achieves state-of-the-art (SOTA) performance in the brain-inspired computing domain and outperforms most existing RGB-T semantic segmentation methods. It also exhibits strong generalization capabilities on multiple RGB-T datasets, proving the effectiveness of brain-inspired computer models in multi-modal image segmentation tasks.

Chaos in Motion: Unveiling Robustness in Remote Heart Rate Measurement through Brain-Inspired Skin Tracking

Apr 11, 2024

Abstract:Heart rate is an important physiological indicator of human health status. Existing remote heart rate measurement methods typically involve facial detection followed by signal extraction from the region of interest (ROI). These SOTA methods have three serious problems: (a) inaccuracies even failures in detection caused by environmental influences or subject movement; (b) failures for special patients such as infants and burn victims; (c) privacy leakage issues resulting from collecting face video. To address these issues, we regard the remote heart rate measurement as the process of analyzing the spatiotemporal characteristics of the optical flow signal in the video. We apply chaos theory to computer vision tasks for the first time, thus designing a brain-inspired framework. Firstly, using an artificial primary visual cortex model to extract the skin in the videos, and then calculate heart rate by time-frequency analysis on all pixels. Our method achieves Robust Skin Tracking for Heart Rate measurement, called HR-RST. The experimental results show that HR-RST overcomes the difficulty of environmental influences and effectively tracks the subject movement. Moreover, the method could extend to other body parts. Consequently, the method can be applied to special patients and effectively protect individual privacy, offering an innovative solution.

InvKA: Gait Recognition via Invertible Koopman Autoencoder

Sep 27, 2023Abstract:Most current gait recognition methods suffer from poor interpretability and high computational cost. To improve interpretability, we investigate gait features in the embedding space based on Koopman operator theory. The transition matrix in this space captures complex kinematic features of gait cycles, namely the Koopman operator. The diagonal elements of the operator matrix can represent the overall motion trend, providing a physically meaningful descriptor. To reduce the computational cost of our algorithm, we use a reversible autoencoder to reduce the model size and eliminate convolutional layers to compress its depth, resulting in fewer floating-point operations. Experimental results on multiple datasets show that our method reduces computational cost to 1% compared to state-of-the-art methods while achieving competitive recognition accuracy 98% on non-occlusion datasets.

A Novel Neuron Model of Visual Processor

Apr 15, 2021

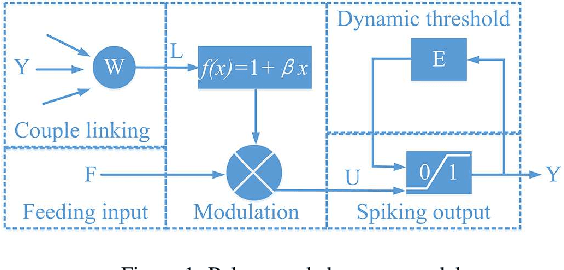

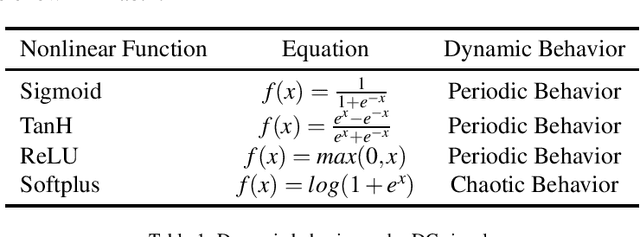

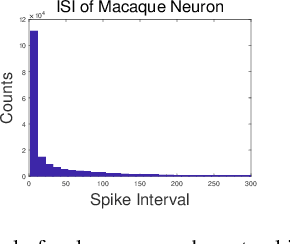

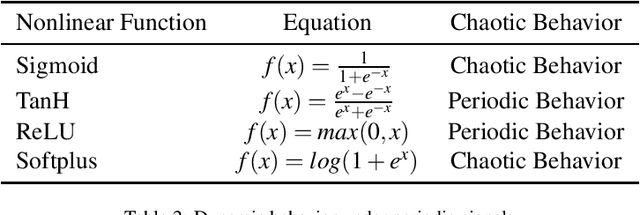

Abstract:Simulating and imitating the neuronal network of humans or mammals is a popular topic that has been explored for many years in the fields of pattern recognition and computer vision. Inspired by neuronal conduction characteristics in the primary visual cortex of cats, pulse-coupled neural networks (PCNNs) can exhibit synchronous oscillation behavior, which can process digital images without training. However, according to the study of single cells in the cat primary visual cortex, when a neuron is stimulated by an external periodic signal, the interspike-interval (ISI) distributions represent a multimodal distribution. This phenomenon cannot be explained by all PCNN models. By analyzing the working mechanism of the PCNN, we present a novel neuron model of the primary visual cortex consisting of a continuous-coupled neural network (CCNN). Our model inherited the threshold exponential decay and synchronous pulse oscillation property of the original PCNN model, and it can exhibit chaotic behavior consistent with the testing results of cat primary visual cortex neurons. Therefore, our CCNN model is closer to real visual neural networks. For image segmentation tasks, the algorithm based on CCNN model has better performance than the state-of-art of visual cortex neural network model. The strength of our approach is that it helps neurophysiologists further understand how the primary visual cortex works and can be used to quantitatively predict the temporal-spatial behavior of real neural networks. CCNN may also inspire engineers to create brain-inspired deep learning networks for artificial intelligence purposes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge