Jiyun Park

A Real-Time Lyrics Alignment System Using Chroma And Phonetic Features For Classical Vocal Performance

Jan 17, 2024Abstract:The goal of real-time lyrics alignment is to take live singing audio as input and to pinpoint the exact position within given lyrics on the fly. The task can benefit real-world applications such as the automatic subtitling of live concerts or operas. However, designing a real-time model poses a great challenge due to the constraints of only using past input and operating within a minimal latency. Furthermore, due to the lack of datasets for real-time models for lyrics alignment, previous studies have mostly evaluated with private in-house datasets, resulting in a lack of standard evaluation methods. This paper presents a real-time lyrics alignment system for classical vocal performances with two contributions. First, we improve the lyrics alignment algorithm by finding an optimal combination of chromagram and phonetic posteriorgram (PPG) that capture melodic and phonetics features of the singing voice, respectively. Second, we recast the Schubert Winterreise Dataset (SWD) which contains multiple performance renditions of the same pieces as an evaluation set for the real-time lyrics alignment.

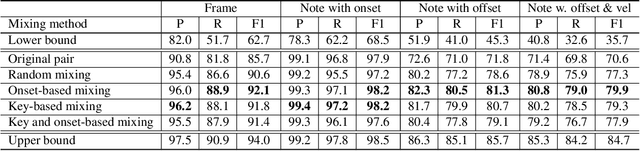

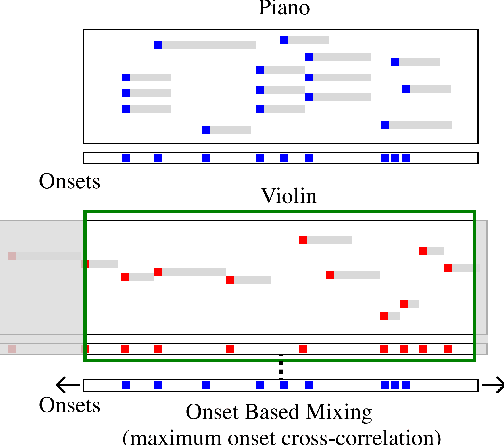

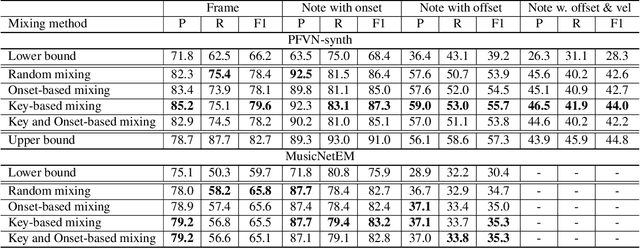

A study of audio mixing methods for piano transcription in violin-piano ensembles

May 23, 2023

Abstract:While piano music transcription models have shown high performance for solo piano recordings, their performance degrades when applied to ensemble recordings. This study aims to analyze the impact of different data augmentation methods on piano transcription performance, specifically focusing on mixing techniques applied to violin-piano ensembles. We apply mixing methods that consider both harmonic and temporal characteristics of the audio. To create datasets for this study, we generated the PFVN-synth dataset, which contains 7 hours of violin-piano ensemble audio by rendering MIDI files and corresponding labels, and also collected unaccompanied violin recordings and mixed them with the MAESTRO dataset. We evaluated the transcription results on both synthesized and real audio recordings datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge