Jitao Ma

High-Energy Concentration for Federated Learning in Frequency Domain

Sep 16, 2025

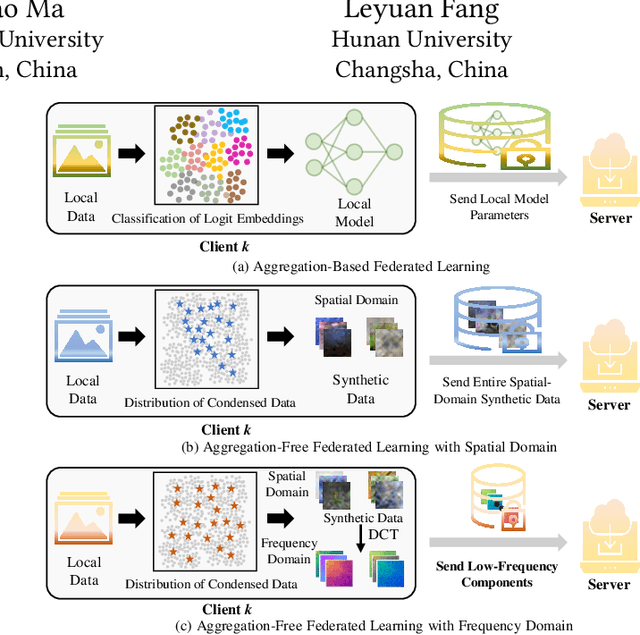

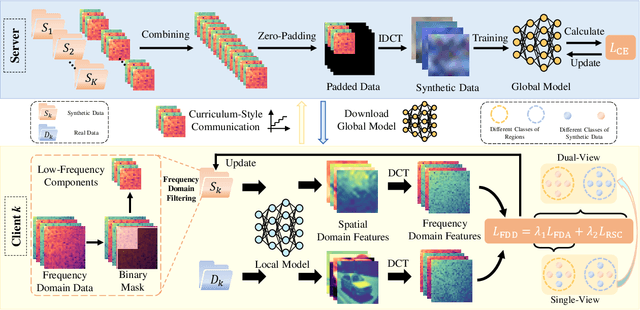

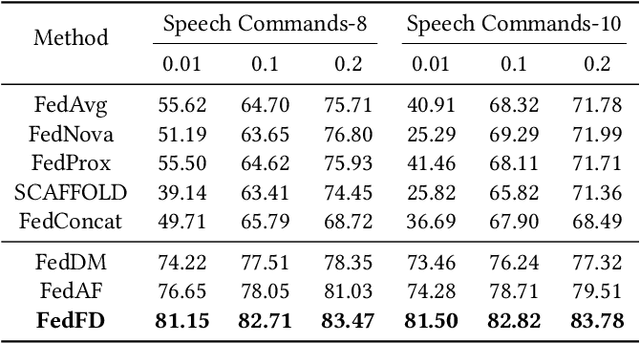

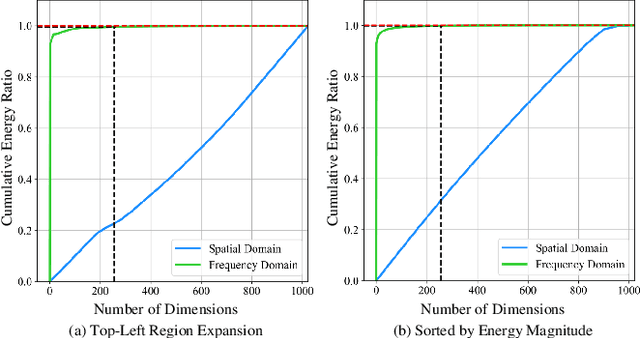

Abstract:Federated Learning (FL) presents significant potential for collaborative optimization without data sharing. Since synthetic data is sent to the server, leveraging the popular concept of dataset distillation, this FL framework protects real data privacy while alleviating data heterogeneity. However, such methods are still challenged by the redundant information and noise in entire spatial-domain designs, which inevitably increases the communication burden. In this paper, we propose a novel Frequency-Domain aware FL method with high-energy concentration (FedFD) to address this problem. Our FedFD is inspired by the discovery that the discrete cosine transform predominantly distributes energy to specific regions, referred to as high-energy concentration. The principle behind FedFD is that low-energy like high-frequency components usually contain redundant information and noise, thus filtering them helps reduce communication costs and optimize performance. Our FedFD is mathematically formulated to preserve the low-frequency components using a binary mask, facilitating an optimal solution through frequency-domain distribution alignment. In particular, real data-driven synthetic classification is imposed into the loss to enhance the quality of the low-frequency components. On five image and speech datasets, FedFD achieves superior performance than state-of-the-art methods while reducing communication costs. For example, on the CIFAR-10 dataset with Dirichlet coefficient $\alpha = 0.01$, FedFD achieves a minimum reduction of 37.78\% in the communication cost, while attaining a 10.88\% performance gain.

Towards Efficient Model-Heterogeneity Federated Learning for Large Models

Nov 25, 2024Abstract:As demand grows for complex tasks and high-performance applications in edge computing, the deployment of large models in federated learning has become increasingly urgent, given their superior representational power and generalization capabilities. However, the resource constraints and heterogeneity among clients present significant challenges to this deployment. To tackle these challenges, we introduce HeteroTune, an innovative fine-tuning framework tailored for model-heterogeneity federated learning (MHFL). In particular, we propose a novel parameter-efficient fine-tuning (PEFT) structure, called FedAdapter, which employs a multi-branch cross-model aggregator to enable efficient knowledge aggregation across diverse models. Benefiting from the lightweight FedAdapter, our approach significantly reduces both the computational and communication overhead. Finally, our approach is simple yet effective, making it applicable to a wide range of large model fine-tuning tasks. Extensive experiments on computer vision (CV) and natural language processing (NLP) tasks demonstrate that our method achieves state-of-the-art results, seamlessly integrating efficiency and performance.

Exploring Hyperspectral Anomaly Detection with Human Vision: A Small Target Aware Detector

Jan 02, 2024Abstract:Hyperspectral anomaly detection (HAD) aims to localize pixel points whose spectral features differ from the background. HAD is essential in scenarios of unknown or camouflaged target features, such as water quality monitoring, crop growth monitoring and camouflaged target detection, where prior information of targets is difficult to obtain. Existing HAD methods aim to objectively detect and distinguish background and anomalous spectra, which can be achieved almost effortlessly by human perception. However, the underlying processes of human visual perception are thought to be quite complex. In this paper, we analyze hyperspectral image (HSI) features under human visual perception, and transfer the solution process of HAD to the more robust feature space for the first time. Specifically, we propose a small target aware detector (STAD), which introduces saliency maps to capture HSI features closer to human visual perception. STAD not only extracts more anomalous representations, but also reduces the impact of low-confidence regions through a proposed small target filter (STF). Furthermore, considering the possibility of HAD algorithms being applied to edge devices, we propose a full connected network to convolutional network knowledge distillation strategy. It can learn the spectral and spatial features of the HSI while lightening the network. We train the network on the HAD100 training set and validate the proposed method on the HAD100 test set. Our method provides a new solution space for HAD that is closer to human visual perception with high confidence. Sufficient experiments on real HSI with multiple method comparisons demonstrate the excellent performance and unique potential of the proposed method. The code is available at https://github.com/majitao-xd/STAD-HAD.

RS-DGC: Exploring Neighborhood Statistics for Dynamic Gradient Compression on Remote Sensing Image Interpretation

Dec 29, 2023Abstract:Distributed deep learning has recently been attracting more attention in remote sensing (RS) applications due to the challenges posed by the increased amount of open data that are produced daily by Earth observation programs. However, the high communication costs of sending model updates among multiple nodes are a significant bottleneck for scalable distributed learning. Gradient sparsification has been validated as an effective gradient compression (GC) technique for reducing communication costs and thus accelerating the training speed. Existing state-of-the-art gradient sparsification methods are mostly based on the "larger-absolute-more-important" criterion, ignoring the importance of small gradients, which is generally observed to affect the performance. Inspired by informative representation of manifold structures from neighborhood information, we propose a simple yet effective dynamic gradient compression scheme leveraging neighborhood statistics indicator for RS image interpretation, termed RS-DGC. We first enhance the interdependence between gradients by introducing the gradient neighborhood to reduce the effect of random noise. The key component of RS-DGC is a Neighborhood Statistical Indicator (NSI), which can quantify the importance of gradients within a specified neighborhood on each node to sparsify the local gradients before gradient transmission in each iteration. Further, a layer-wise dynamic compression scheme is proposed to track the importance changes of each layer in real time. Extensive downstream tasks validate the superiority of our method in terms of intelligent interpretation of RS images. For example, we achieve an accuracy improvement of 0.51% with more than 50 times communication compression on the NWPU-RESISC45 dataset using VGG-19 network.

BSDM: Background Suppression Diffusion Model for Hyperspectral Anomaly Detection

Jul 19, 2023Abstract:Hyperspectral anomaly detection (HAD) is widely used in Earth observation and deep space exploration. A major challenge for HAD is the complex background of the input hyperspectral images (HSIs), resulting in anomalies confused in the background. On the other hand, the lack of labeled samples for HSIs leads to poor generalization of existing HAD methods. This paper starts the first attempt to study a new and generalizable background learning problem without labeled samples. We present a novel solution BSDM (background suppression diffusion model) for HAD, which can simultaneously learn latent background distributions and generalize to different datasets for suppressing complex background. It is featured in three aspects: (1) For the complex background of HSIs, we design pseudo background noise and learn the potential background distribution in it with a diffusion model (DM). (2) For the generalizability problem, we apply a statistical offset module so that the BSDM adapts to datasets of different domains without labeling samples. (3) For achieving background suppression, we innovatively improve the inference process of DM by feeding the original HSIs into the denoising network, which removes the background as noise. Our work paves a new background suppression way for HAD that can improve HAD performance without the prerequisite of manually labeled data. Assessments and generalization experiments of four HAD methods on several real HSI datasets demonstrate the above three unique properties of the proposed method. The code is available at https://github.com/majitao-xd/BSDM-HAD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge