Jinsheng Sun

Open-world Point Cloud Semantic Segmentation: A Human-in-the-loop Framework

Aug 07, 2025Abstract:Open-world point cloud semantic segmentation (OW-Seg) aims to predict point labels of both base and novel classes in real-world scenarios. However, existing methods rely on resource-intensive offline incremental learning or densely annotated support data, limiting their practicality. To address these limitations, we propose HOW-Seg, the first human-in-the-loop framework for OW-Seg. Specifically, we construct class prototypes, the fundamental segmentation units, directly on the query data, avoiding the prototype bias caused by intra-class distribution shifts between the support and query data. By leveraging sparse human annotations as guidance, HOW-Seg enables prototype-based segmentation for both base and novel classes. Considering the lack of granularity of initial prototypes, we introduce a hierarchical prototype disambiguation mechanism to refine ambiguous prototypes, which correspond to annotations of different classes. To further enrich contextual awareness, we employ a dense conditional random field (CRF) upon the refined prototypes to optimize their label assignments. Through iterative human feedback, HOW-Seg dynamically improves its predictions, achieving high-quality segmentation for both base and novel classes. Experiments demonstrate that with sparse annotations (e.g., one-novel-class-one-click), HOW-Seg matches or surpasses the state-of-the-art generalized few-shot segmentation (GFS-Seg) method under the 5-shot setting. When using advanced backbones (e.g., Stratified Transformer) and denser annotations (e.g., 10 clicks per sub-scene), HOW-Seg achieves 85.27% mIoU on S3DIS and 66.37% mIoU on ScanNetv2, significantly outperforming alternatives.

Refining Segmentation On-the-Fly: An Interactive Framework for Point Cloud Semantic Segmentation

Mar 11, 2024

Abstract:Existing interactive point cloud segmentation approaches primarily focus on the object segmentation, which aim to determine which points belong to the object of interest guided by user interactions. This paper concentrates on an unexplored yet meaningful task, i.e., interactive point cloud semantic segmentation, which assigns high-quality semantic labels to all points in a scene with user corrective clicks. Concretely, we presents the first interactive framework for point cloud semantic segmentation, named InterPCSeg, which seamlessly integrates with off-the-shelf semantic segmentation networks without offline re-training, enabling it to run in an on-the-fly manner. To achieve online refinement, we treat user interactions as sparse training examples during the test-time. To address the instability caused by the sparse supervision, we design a stabilization energy to regulate the test-time training process. For objective and reproducible evaluation, we develop an interaction simulation scheme tailored for the interactive point cloud semantic segmentation task. We evaluate our framework on the S3DIS and ScanNet datasets with off-the-shelf segmentation networks, incorporating interactions from both the proposed interaction simulator and real users. Quantitative and qualitative experimental results demonstrate the efficacy of our framework in refining the semantic segmentation results with user interactions. The source code will be publicly available.

AU-PD: An Arbitrary-size and Uniform Downsampling Framework for Point Clouds

Nov 02, 2022

Abstract:Point cloud downsampling is a crucial pre-processing operation to downsample the points in the point cloud in order to reduce computational cost, and communication load, to name a few. Recent research on point cloud downsampling has achieved great success which concentrates on learning to sample in a task-aware way. However, existing learnable samplers can not perform arbitrary-size sampling directly. Moreover, their sampled results always comprise many overlapping points. In this paper, we introduce the AU-PD, a novel task-aware sampling framework that directly downsamples point cloud to any smaller size based on a sample-to-refine strategy. Given a specified arbitrary size, we first perform task-agnostic pre-sampling to sample the input point cloud. Then, we refine the pre-sampled set to make it task-aware, driven by downstream task losses. The refinement is realized by adding each pre-sampled point with a small offset predicted by point-wise multi-layer perceptrons (MLPs). In this way, the sampled set remains almost unchanged from the original in distribution, and therefore contains fewer overlapping cases. With the attention mechanism and proper training scheme, the framework learns to adaptively refine the pre-sampled set of different sizes. We evaluate sampled results for classification and registration tasks, respectively. The proposed AU-PD gets competitive downstream performance with the state-of-the-art method while being more flexible and containing fewer overlapping points in the sampled set. The source code will be publicly available at https://zhiyongsu.github.io/Project/AUPD.html.

One-way Hash Function Based on Neural Network

Jul 27, 2007

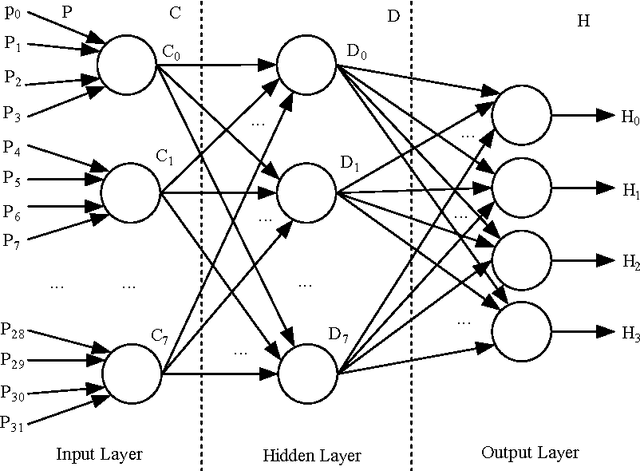

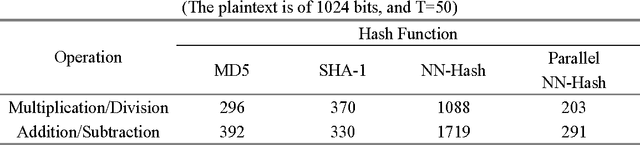

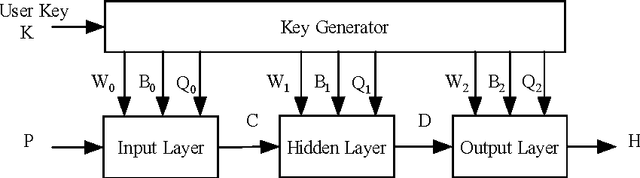

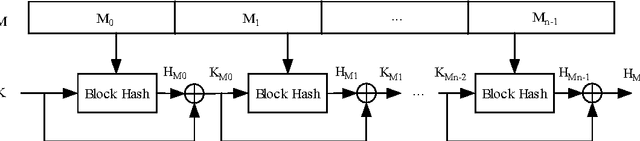

Abstract:A hash function is constructed based on a three-layer neural network. The three neuron-layers are used to realize data confusion, diffusion and compression respectively, and the multi-block hash mode is presented to support the plaintext with variable length. Theoretical analysis and experimental results show that this hash function is one-way, with high key sensitivity and plaintext sensitivity, and secure against birthday attacks or meet-in-the-middle attacks. Additionally, the neural network's property makes it practical to realize in a parallel way. These properties make it a suitable choice for data signature or authentication.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge