Jingzhou Sun

Age of Information Guaranteed Scheduling for Asynchronous Status Updates in Collaborative Perception

Oct 07, 2023

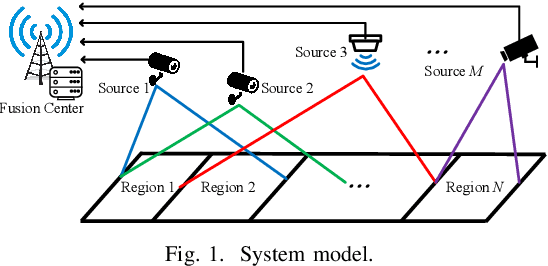

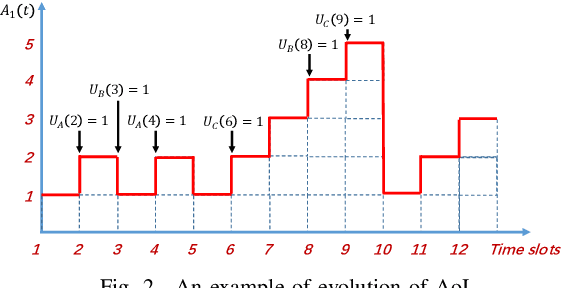

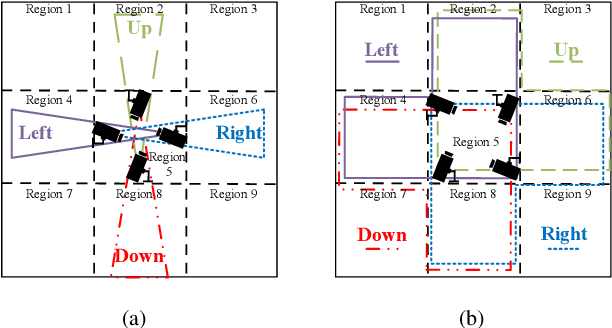

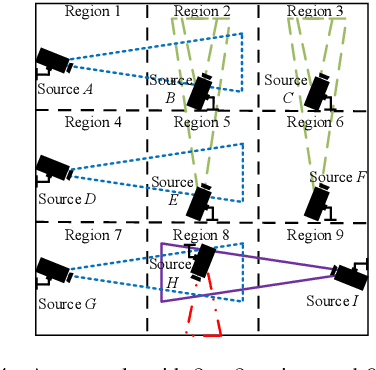

Abstract:We consider collaborative perception (CP) systems where a fusion center monitors various regions by multiple sources. The center has different age of information (AoI) constraints for different regions. Multi-view sensing data for a region generated by sources can be fused by the center for a reliable representation of the region. To ensure accurate perception, differences between generation time of asynchronous status updates for CP fusion should not exceed a certain threshold. An algorithm named scheduling for CP with asynchronous status updates (SCPA) is proposed to minimize the number of required channels and subject to AoI constraints with asynchronous status updates. SCPA first identifies a set of sources that can satisfy the constraints with minimum updating rates. It then chooses scheduling intervals and offsets for the sources such that the number of required channels is optimized. According to numerical results, the number of channels required by SCPA can reach only 12% more than a derived lower bound.

A Grouping-based Scheduler for Efficient Channel Utilization under Age of Information Constraints

Oct 07, 2023Abstract:We consider a status information updating system where a fusion center collects the status information from a large number of sources and each of them has its own age of information (AoI) constraints. A novel grouping-based scheduler is proposed to solve this complex large-scale problem by dividing the sources into different scheduling groups. The problem is then transformed into deriving the optimal grouping scheme. A two-step grouping algorithm (TGA) is proposed: 1) Given AoI constraints, we first identify the sources with harmonic AoI constraints, then design a fast grouping method and an optimal scheduler for these sources. Under harmonic AoI constraints, each constraint is divisible by the smallest one and the sum of reciprocals of the constraints with the same value is divisible by the reciprocal of the smallest one. 2) For the other sources without such a special property, we pack the sources which can be scheduled together with minimum update rates into the same group. Simulations show the channel usage of the proposed TGA is significantly reduced as compared to a recent work and is 0.42% larger than a derived lower bound when the number of sources is large.

SMDP-Based Dynamic Batching for Efficient Inference on GPU-Based Platforms

Jan 30, 2023Abstract:In up-to-date machine learning (ML) applications on cloud or edge computing platforms, batching is an important technique for providing efficient and economical services at scale. In particular, parallel computing resources on the platforms, such as graphics processing units (GPUs), have higher computational and energy efficiency with larger batch sizes. However, larger batch sizes may also result in longer response time, and thus it requires a judicious design. This paper aims to provide a dynamic batching policy that strikes a balance between efficiency and latency. The GPU-based inference service is modeled as a batch service queue with batch-size dependent processing time. Then, the design of dynamic batching is a continuous-time average-cost problem, and is formulated as a semi-Markov decision process (SMDP) with the objective of minimizing the weighted sum of average response time and average power consumption. The optimal policy is acquired by solving an associated discrete-time Markov decision process (MDP) problem with finite state approximation and "discretization". By creatively introducing an abstract cost to reflect the impact of "tail" states, the space complexity and the time complexity of the procedure can decrease by 63.5% and 98%, respectively. Our results show that the optimal policies potentially possess a control limit structure. Numerical results also show that SMDP-based batching policies can adapt to different traffic intensities and outperform other benchmark policies. Furthermore, the proposed solution has notable flexibility in balancing power consumption and latency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge