Jingyuan Hu

V-Zero: Self-Improving Multimodal Reasoning with Zero Annotation

Jan 15, 2026Abstract:Recent advances in multimodal learning have significantly enhanced the reasoning capabilities of vision-language models (VLMs). However, state-of-the-art approaches rely heavily on large-scale human-annotated datasets, which are costly and time-consuming to acquire. To overcome this limitation, we introduce V-Zero, a general post-training framework that facilitates self-improvement using exclusively unlabeled images. V-Zero establishes a co-evolutionary loop by instantiating two distinct roles: a Questioner and a Solver. The Questioner learns to synthesize high-quality, challenging questions by leveraging a dual-track reasoning reward that contrasts intuitive guesses with reasoned results. The Solver is optimized using pseudo-labels derived from majority voting over its own sampled responses. Both roles are trained iteratively via Group Relative Policy Optimization (GRPO), driving a cycle of mutual enhancement. Remarkably, without a single human annotation, V-Zero achieves consistent performance gains on Qwen2.5-VL-7B-Instruct, improving visual mathematical reasoning by +1.7 and general vision-centric by +2.6, demonstrating the potential of self-improvement in multimodal systems. Code is available at https://github.com/SatonoDia/V-Zero

AgentLens: Visual Analysis for Agent Behaviors in LLM-based Autonomous Systems

Feb 14, 2024Abstract:Recently, Large Language Model based Autonomous system(LLMAS) has gained great popularity for its potential to simulate complicated behaviors of human societies. One of its main challenges is to present and analyze the dynamic events evolution of LLMAS. In this work, we present a visualization approach to explore detailed statuses and agents' behavior within LLMAS. We propose a general pipeline that establishes a behavior structure from raw LLMAS execution events, leverages a behavior summarization algorithm to construct a hierarchical summary of the entire structure in terms of time sequence, and a cause trace method to mine the causal relationship between agent behaviors. We then develop AgentLens, a visual analysis system that leverages a hierarchical temporal visualization for illustrating the evolution of LLMAS, and supports users to interactively investigate details and causes of agents' behaviors. Two usage scenarios and a user study demonstrate the effectiveness and usability of our AgentLens.

Emoji-aware Co-attention Network with EmoGraph2vec Model for Sentiment Anaylsis

Oct 27, 2021

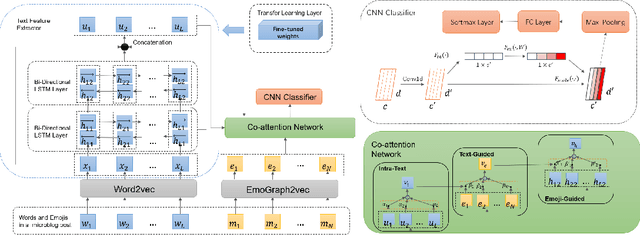

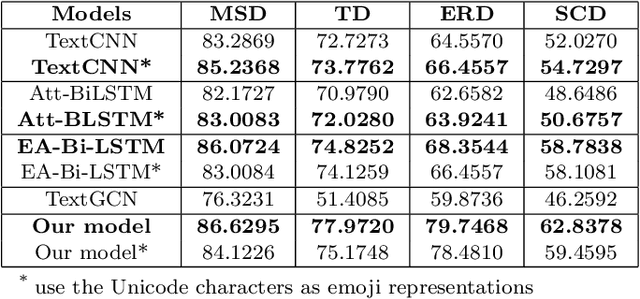

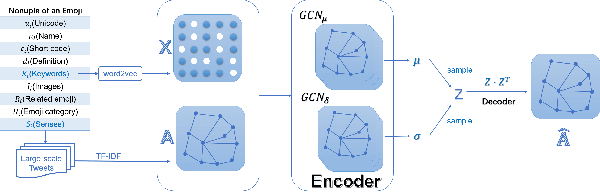

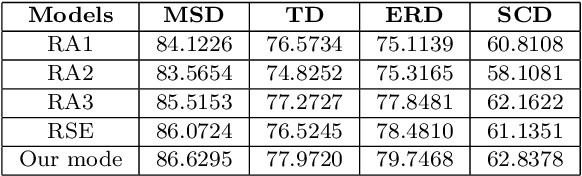

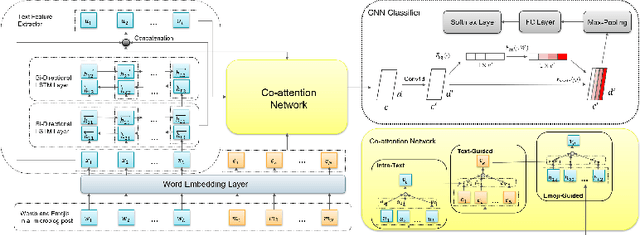

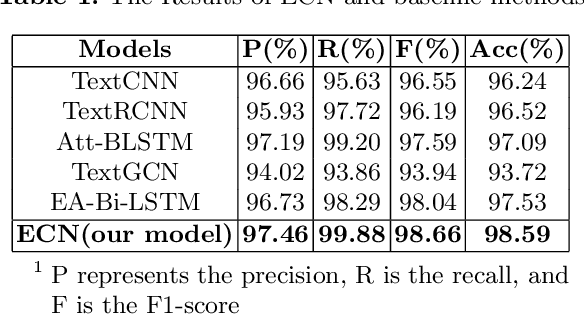

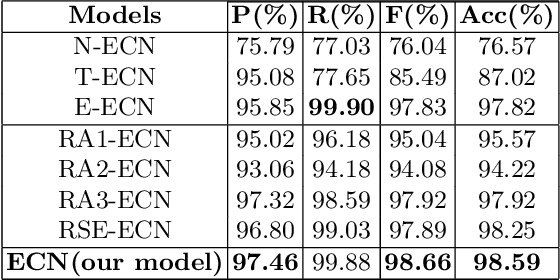

Abstract:In social media platforms, emojis have an extremely high occurrence in computer-mediated communications. Many emojis are used to strengthen the emotional expressions and the emojis that co-occurs in a sentence also have a strong sentiment connection. However, when it comes to emoji representation learning, most studies have only utilized the fixed descriptions provided by the Unicode Consortium, without consideration of actual usage scenario. As for the sentiment analysis task, many researchers ignore the emotional impact of the interaction between text and emojis. It results that the emotional semantics of emojis cannot be fully explored. In this work, we propose a method to learn emoji representations called EmoGraph2vec and design an emoji-aware co-attention network that learns the mutual emotional semantics between text and emojis on short texts of social media. In EmoGraph2vec, we form an emoji co-occurrence network on real social data and enrich the semantic information based on an external knowledge base EmojiNet to obtain emoji node embeddings. Our model designs a co-attention mechanism to incorporate the text and emojis, and integrates a squeeze-and-excitation (SE) block into a convolutional neural network as a classifier. Finally, we use the transfer learning method to increase converge speed and achieve higher accuracy. Experimental results show that the proposed model can outperform several baselines for sentiment analysis on benchmark datasets. Additionally, we conduct a series of ablation and comparison experiments to investigate the effectiveness of our model.

Emoji-based Co-attention Network for Microblog Sentiment Analysis

Oct 27, 2021

Abstract:Emojis are widely used in online social networks to express emotions, attitudes, and opinions. As emotional-oriented characters, emojis can be modeled as important features of emotions towards the recipient or subject for sentiment analysis. However, existing methods mainly take emojis as heuristic information that fails to resolve the problem of ambiguity noise. Recent researches have utilized emojis as an independent input to classify text sentiment but they ignore the emotional impact of the interaction between text and emojis. It results that the emotional semantics of emojis cannot be fully explored. In this paper, we propose an emoji-based co-attention network that learns the mutual emotional semantics between text and emojis on microblogs. Our model adopts the co-attention mechanism based on bidirectional long short-term memory incorporating the text and emojis, and integrates a squeeze-and-excitation block in a convolutional neural network classifier to increase its sensitivity to emotional semantic features. Experimental results show that the proposed method can significantly outperform several baselines for sentiment analysis on short texts of social media.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge