Jingnan Jia

Prediction of Lung CT Scores of Systemic Sclerosis by Cascaded Regression Neural Networks

Oct 15, 2021

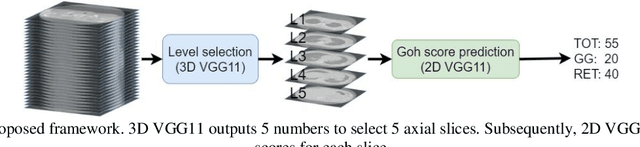

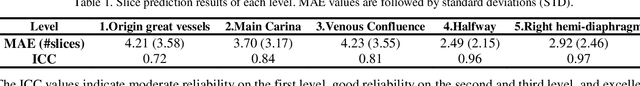

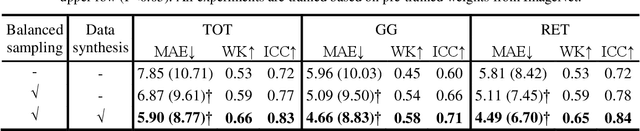

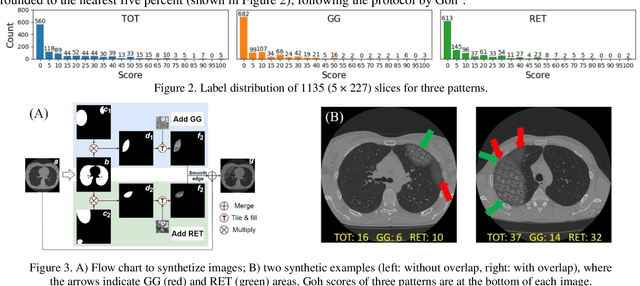

Abstract:Visually scoring lung involvement in systemic sclerosis from CT scans plays an important role in monitoring progression, but its labor intensiveness hinders practical application. We proposed, therefore, an automatic scoring framework that consists of two cascaded deep regression neural networks. The first (3D) network aims to predict the craniocaudal position of five anatomically defined scoring levels on the 3D CT scans. The second (2D) network receives the resulting 2D axial slices and predicts the scores. We used 227 3D CT scans to train and validate the first network, and the resulting 1135 axial slices were used in the second network. Two experts scored independently a subset of data to obtain intra- and interobserver variabilities and the ground truth for all data was obtained in consensus. To alleviate the unbalance in training labels in the second network, we introduced a sampling technique and to increase the diversity of the training samples synthetic data was generated, mimicking ground glass and reticulation patterns. The 4-fold cross validation showed that our proposed network achieved an average MAE of 5.90, 4.66 and 4.49, weighted kappa of 0.66, 0.58 and 0.65 for total score (TOT), ground glass (GG) and reticular pattern (RET), respectively. Our network performed slightly worse than the best experts on TOT and GG prediction but it has competitive performance on RET prediction and has the potential to be an objective alternative for the visual scoring of SSc in CT thorax studies.

Multi-task Semi-supervised Learning for Pulmonary Lobe Segmentation

Apr 22, 2021

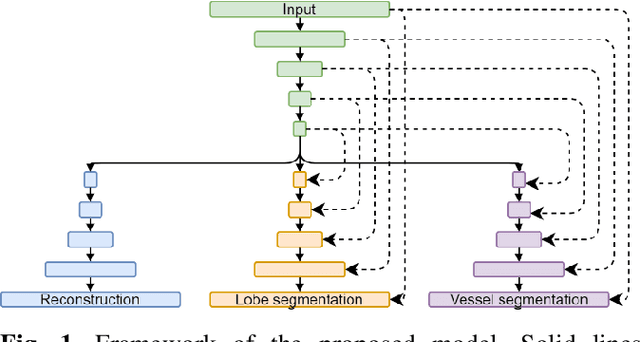

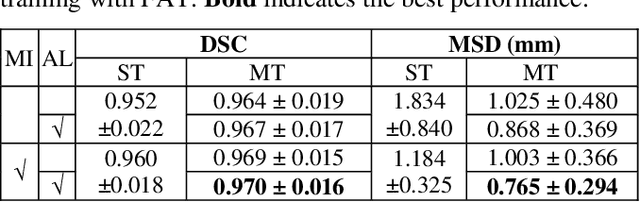

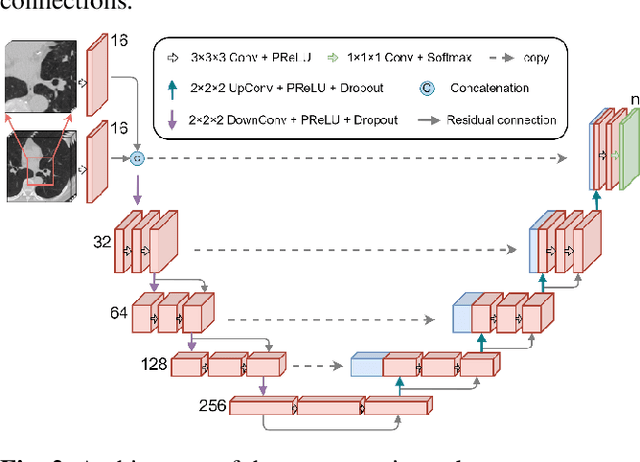

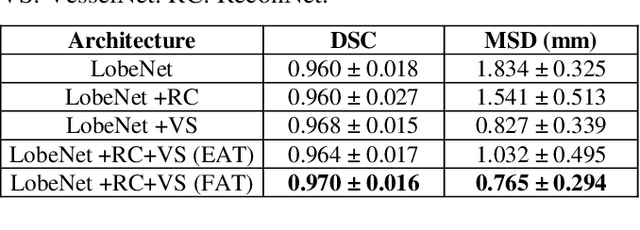

Abstract:Pulmonary lobe segmentation is an important preprocessing task for the analysis of lung diseases. Traditional methods relying on fissure detection or other anatomical features, such as the distribution of pulmonary vessels and airways, could provide reasonably accurate lobe segmentations. Deep learning based methods can outperform these traditional approaches, but require large datasets. Deep multi-task learning is expected to utilize labels of multiple different structures. However, commonly such labels are distributed over multiple datasets. In this paper, we proposed a multi-task semi-supervised model that can leverage information of multiple structures from unannotated datasets and datasets annotated with different structures. A focused alternating training strategy is presented to balance the different tasks. We evaluated the trained model on an external independent CT dataset. The results show that our model significantly outperforms single-task alternatives, improving the mean surface distance from 7.174 mm to 4.196 mm. We also demonstrated that our approach is successful for different network architectures as backbones.

A Scalable Learned Index Scheme in Storage Systems

May 08, 2019

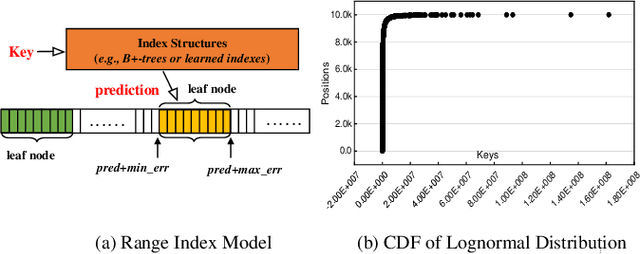

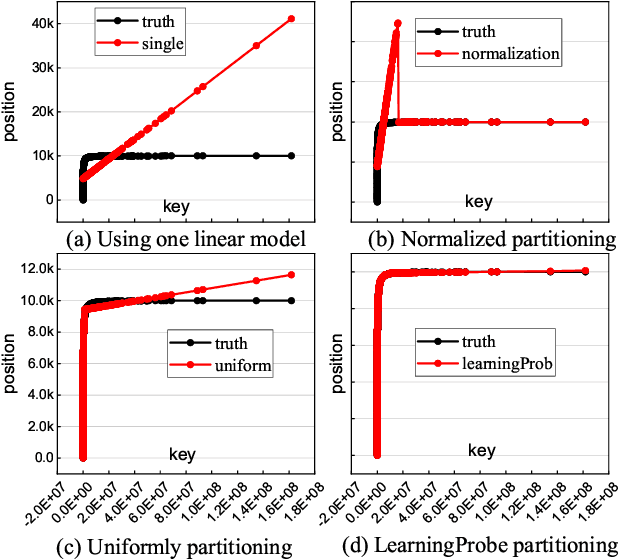

Abstract:Index structures are important for efficient data access, which have been widely used to improve the performance in many in-memory systems. Due to high in-memory overheads, traditional index structures become difficult to process the explosive growth of data, let alone providing low latency and high throughput performance with limited system resources. The promising learned indexes leverage deep-learning models to complement existing index structures and obtain significant memory savings. However, the learned indexes fail to become scalable due to the heavy inter-model dependency and expensive retraining. To address these problems, we propose a scalable learned index scheme to construct different linear regression models according to the data distribution. Moreover, the used models are independent so as to reduce the complexity of retraining and become easy to partition and store the data into different pages, blocks or distributed systems. Our experimental results show that compared with state-of-the-art schemes, AIDEL improves the insertion performance by about 2$\times$ and provides comparable lookup performance, while efficiently supporting scalability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge