Jibin Jia

Pruned Convolutional Attention Network Based Wideband Spectrum Sensing with Sub-Nyquist Sampling

Nov 30, 2024

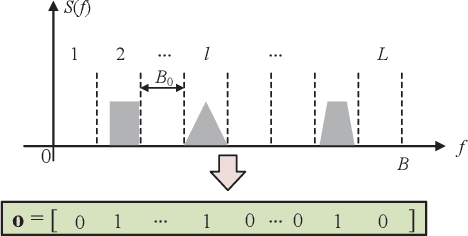

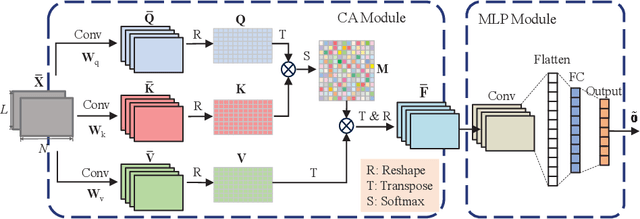

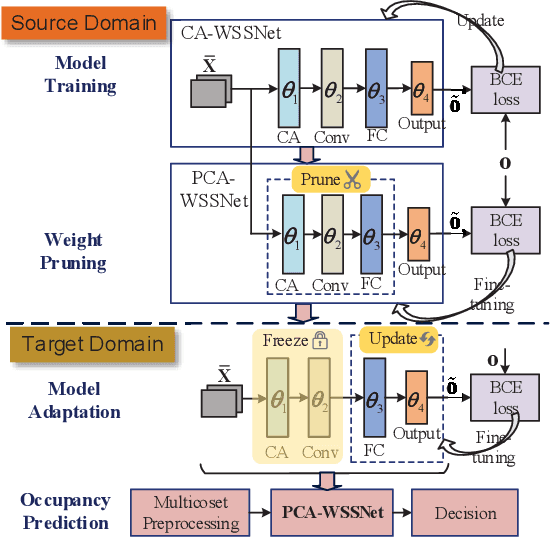

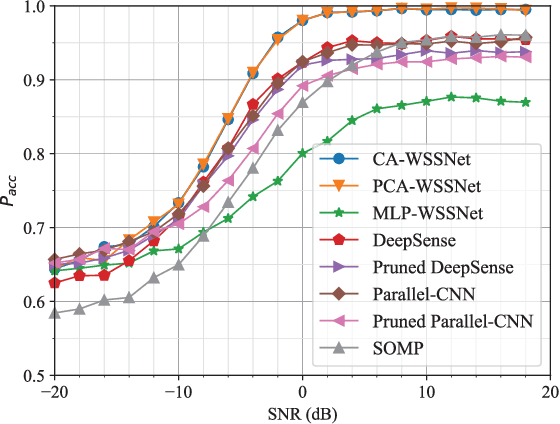

Abstract:Wideband spectrum sensing (WSS) is critical for orchestrating multitudinous wireless transmissions via spectrum sharing, but may incur excessive costs of hardware, power and computation due to the high sampling rate. In this article, a deep learning based WSS framework embedding the multicoset preprocessing is proposed to enable the low-cost sub-Nyquist sampling. A pruned convolutional attention WSS network (PCA-WSSNet) is designed to organically integrate the multicoset preprocessing and the convolutional attention mechanism as well as to reduce the model complexity remarkably via the selective weight pruning without the performance loss. Furthermore, a transfer learning (TL) strategy benefiting from the model pruning is developed to improve the robustness of PCA-WSSNet with few adaptation samples of new scenarios. Simulation results show the performance superiority of PCA-WSSNet over the state of the art. Compared with direct TL, the pruned TL strategy can simultaneously improve the prediction accuracy in unseen scenarios, reduce the model size, and accelerate the model inference.

Federated Transfer Learning Based Cooperative Wideband Spectrum Sensing with Model Pruning

Sep 09, 2024

Abstract:For ultra-wideband and high-rate wireless communication systems, wideband spectrum sensing (WSS) is critical, since it empowers secondary users (SUs) to capture the spectrum holes for opportunistic transmission. However, WSS encounters challenges such as excessive costs of hardware and computation due to the high sampling rate, as well as robustness issues arising from scenario mismatch. In this paper, a WSS neural network (WSSNet) is proposed by exploiting multicoset preprocessing to enable the sub-Nyquist sampling, with the two dimensional convolution design specifically tailored to work with the preprocessed samples. A federated transfer learning (FTL) based framework mobilizing multiple SUs is further developed to achieve a robust model adaptable to various scenarios, which is paved by the selective weight pruning for the fast model adaptation and inference. Simulation results demonstrate that the proposed FTL-WSSNet achieves the fairly good performance in different target scenarios even without local adaptation samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge