Jiazhang Wang

3D Imaging of Complex Specular Surfaces by Fusing Polarimetric and Deflectometric Information

Jun 04, 2024

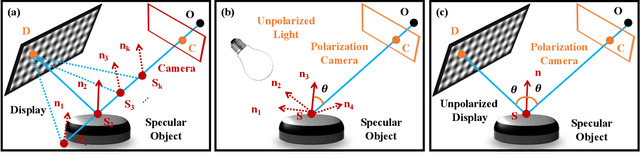

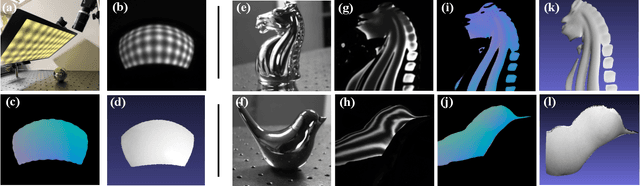

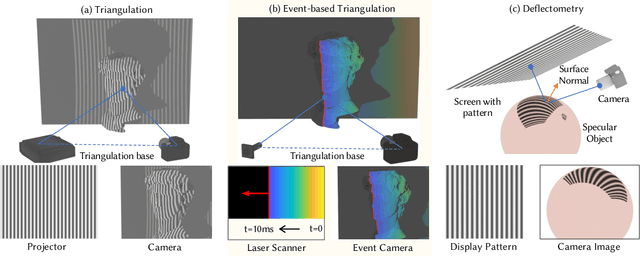

Abstract:Accurate and fast 3D imaging of specular surfaces still poses major challenges for state-of-the-art optical measurement principles. Frequently used methods, such as phase-measuring deflectometry (PMD) or shape-from-polarization (SfP), rely on strong assumptions about the measured objects, limiting their generalizability in broader application areas like medical imaging, industrial inspection, virtual reality, or cultural heritage analysis. In this paper, we introduce a measurement principle that utilizes a novel technique to effectively encode and decode the information contained in a light field reflected off a specular surface. We combine polarization cues from SfP with geometric information obtained from PMD to resolve all arising ambiguities in the 3D measurement. Moreover, our approach removes the unrealistic orthographic imaging assumption for SfP, which significantly improves the respective results. We showcase our new technique by demonstrating single-shot and multi-shot measurements on complex-shaped specular surfaces, displaying an evaluated accuracy of surface normals below $0.6^\circ$.

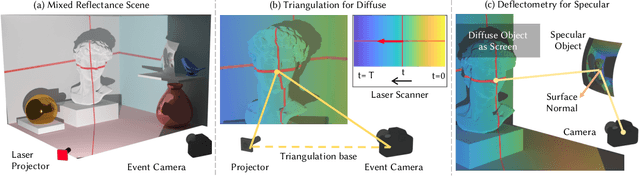

Event-based Motion-Robust Accurate Shape Estimation for Mixed Reflectance Scenes

Nov 16, 2023

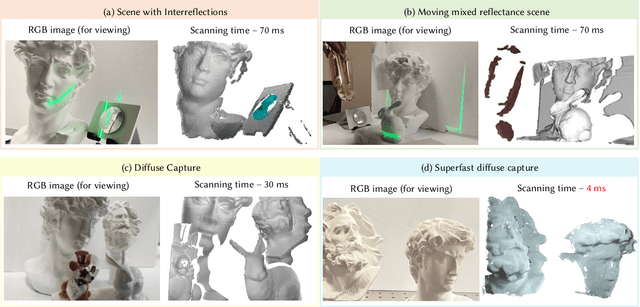

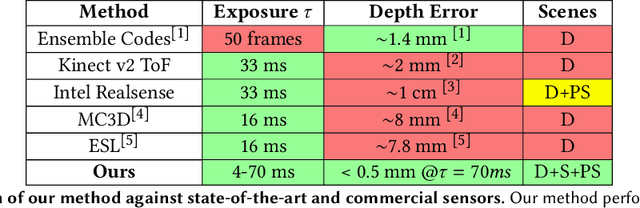

Abstract:Event-based structured light systems have recently been introduced as an exciting alternative to conventional frame-based triangulation systems for the 3D measurements of diffuse surfaces. Important benefits include the fast capture speed and the high dynamic range provided by the event camera - albeit at the cost of lower data quality. So far, both low-accuracy event-based as well as high-accuracy frame-based 3D imaging systems are tailored to a specific surface type, such as diffuse or specular, and can not be used for a broader class of object surfaces ("mixed reflectance scenes"). In this paper, we present a novel event-based structured light system that enables fast 3D imaging of mixed reflectance scenes with high accuracy. On the captured events, we use epipolar constraints that intrinsically enable decomposing the measured reflections into diffuse, two-bounce specular, and other multi-bounce reflections. The diffuse objects in the scene are reconstructed using triangulation. Eventually, the reconstructed diffuse scene parts are used as a "display" to evaluate the specular scene parts via deflectometry. This novel procedure allows us to use the entire scene as a virtual screen, using only a scanning laser and an event camera. The resulting system achieves fast and motion-robust (14Hz) reconstructions of mixed reflectance scenes with < 500 $\mu$m accuracy. Moreover, we introduce a "superfast" capture mode (250Hz) for the 3D measurement of diffuse scenes.

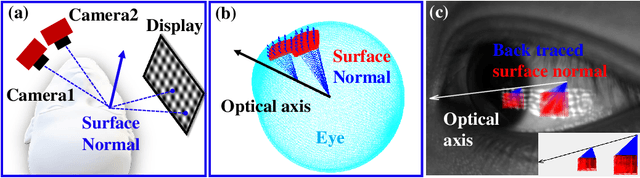

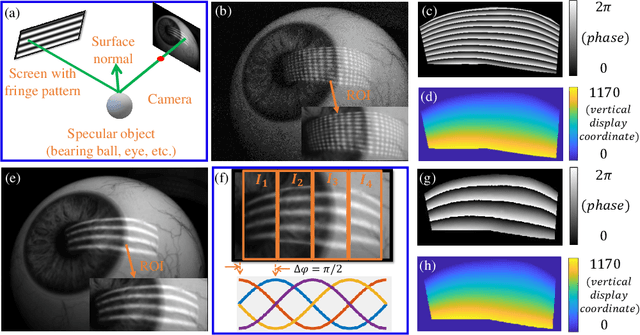

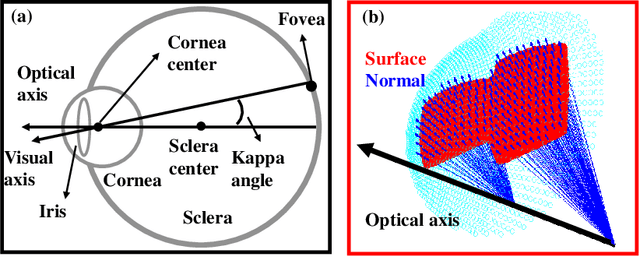

Accurate Eye Tracking from Dense 3D Surface Reconstructions using Single-Shot Deflectometry

Aug 15, 2023

Abstract:Eye-tracking plays a crucial role in the development of virtual reality devices, neuroscience research, and psychology. Despite its significance in numerous applications, achieving an accurate, robust, and fast eye-tracking solution remains a considerable challenge for current state-of-the-art methods. While existing reflection-based techniques (e.g., "glint tracking") are considered the most accurate, their performance is limited by their reliance on sparse 3D surface data acquired solely from the cornea surface. In this paper, we rethink the way how specular reflections can be used for eye tracking: We propose a novel method for accurate and fast evaluation of the gaze direction that exploits teachings from single-shot phase-measuring-deflectometry (PMD). In contrast to state-of-the-art reflection-based methods, our method acquires dense 3D surface information of both cornea and sclera within only one single camera frame (single-shot). Improvements in acquired reflection surface points("glints") of factors $>3300 \times$ are easily achievable. We show the feasibility of our approach with experimentally evaluated gaze errors of only $\leq 0.25^\circ$ demonstrating a significant improvement over the current state-of-the-art.

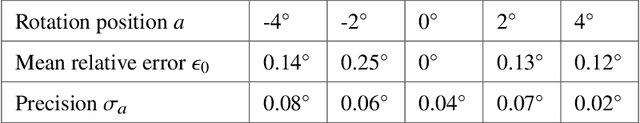

Optimization-Based Eye Tracking using Deflectometric Information

Mar 09, 2023Abstract:Eye tracking is an important tool with a wide range of applications in Virtual, Augmented, and Mixed Reality (VR/AR/MR) technologies. State-of-the-art eye tracking methods are either reflection-based and track reflections of sparse point light sources, or image-based and exploit 2D features of the acquired eye image. In this work, we attempt to significantly improve reflection-based methods by utilizing pixel-dense deflectometric surface measurements in combination with optimization-based inverse rendering algorithms. Utilizing the known geometry of our deflectometric setup, we develop a differentiable rendering pipeline based on PyTorch3D that simulates a virtual eye under screen illumination. Eventually, we exploit the image-screen-correspondence information from the captured measurements to find the eye's rotation, translation, and shape parameters with our renderer via gradient descent. In general, our method does not require a specific pattern and can work with ordinary video frames of the main VR/AR/MR screen itself. We demonstrate real-world experiments with evaluated mean relative gaze errors below 0.45 degrees at a precision better than 0.11 degrees. Moreover, we show an improvement of 6X over a representative reflection-based state-of-the-art method in simulation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge