Jiawu Xu

Deep Decomposition and Bilinear Pooling Network for Blind Night-Time Image Quality Evaluation

May 12, 2022

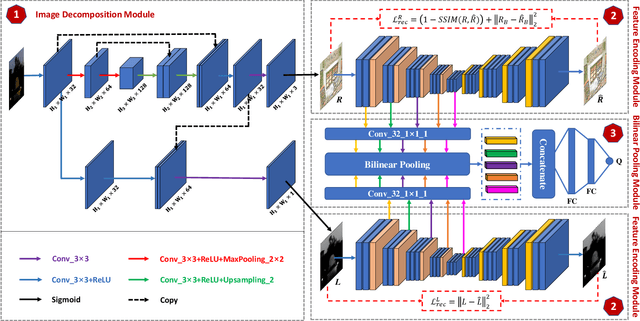

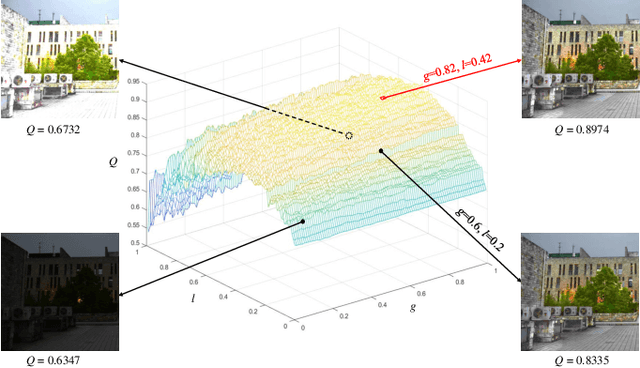

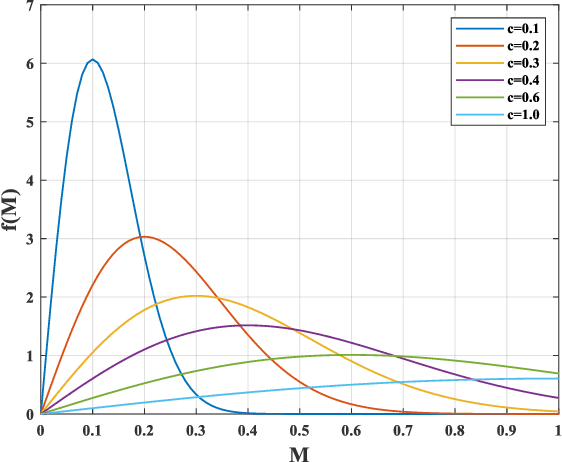

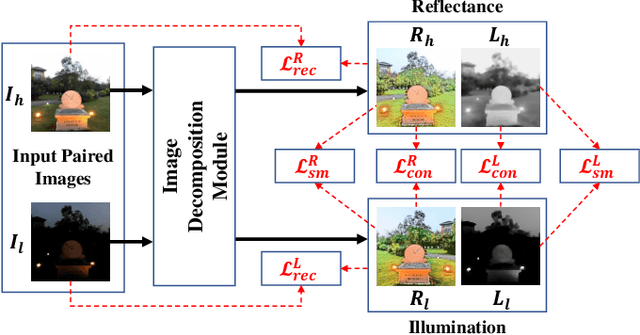

Abstract:Blind image quality assessment (BIQA), which aims to accurately predict the image quality without any pristine reference information, has been highly concerned in the past decades. Especially, with the help of deep neural networks, great progress has been achieved so far. However, it remains less investigated on BIQA for night-time images (NTIs) which usually suffer from complicated authentic distortions such as reduced visibility, low contrast, additive noises, and color distortions. These diverse authentic degradations particularly challenges the design of effective deep neural network for blind NTI quality evaluation (NTIQE). In this paper, we propose a novel deep decomposition and bilinear pooling network (DDB-Net) to better address this issue. The DDB-Net contains three modules, i.e., an image decomposition module, a feature encoding module, and a bilinear pooling module. The image decomposition module is inspired by the Retinex theory and involves decoupling the input NTI into an illumination layer component responsible for illumination information and a reflectance layer component responsible for content information. Then, the feature encoding module involves learning multi-scale feature representations of degradations that are rooted in the two decoupled components separately. Finally, by modeling illumination-related and content-related degradations as two-factor variations, the two multi-scale feature sets are bilinearly pooled and concatenated together to form a unified representation for quality prediction. The superiority of the proposed DDB-Net is well validated by extensive experiments on two publicly available night-time image databases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge