Jianrong Yan

Global-Local Dynamic Feature Alignment Network for Person Re-Identification

Sep 13, 2021

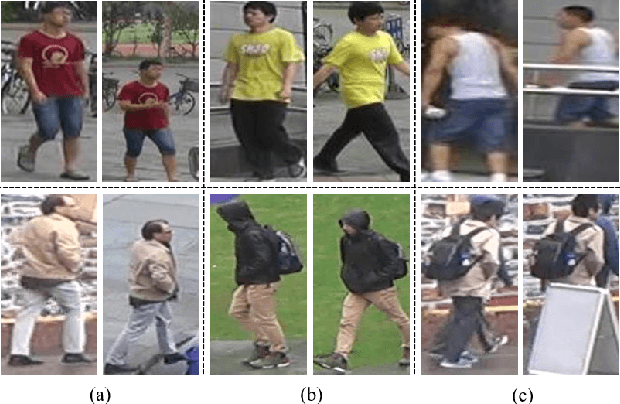

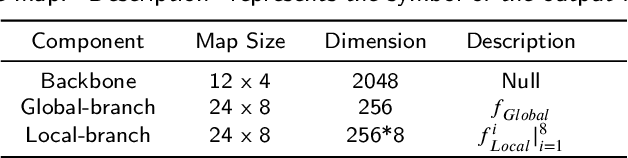

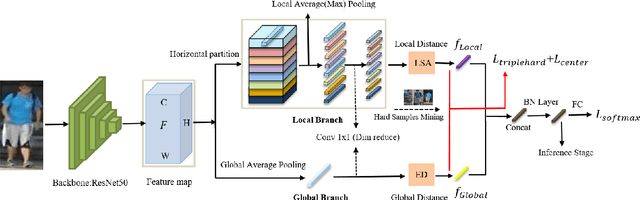

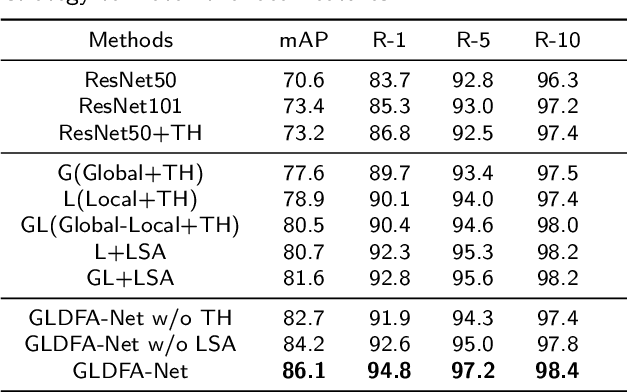

Abstract:The misalignment of human images caused by pedestrian detection bounding box errors or partial occlusions is one of the main challenges in person Re-Identification (Re-ID) tasks. Previous local-based methods mainly focus on learning local features in predefined semantic regions of pedestrians, usually use local hard alignment methods or introduce auxiliary information such as key human pose points to match local features. These methods are often not applicable when large scene differences are encountered. Targeting to solve these problems, we propose a simple and efficient Local Sliding Alignment (LSA) strategy to dynamically align the local features of two images by setting a sliding window on the local stripes of the pedestrian. LSA can effectively suppress spatial misalignment and does not need to introduce extra supervision information. Then, we design a Global-Local Dynamic Feature Alignment Network (GLDFA-Net) framework, which contains both global and local branches. We introduce LSA into the local branch of GLDFA-Net to guide the computation of distance metrics, which can further improve the accuracy of the testing phase. Evaluation experiments on several mainstream evaluation datasets including Market-1501, DukeMTMC-reID, and CUHK03 show that our method has competitive accuracy over the several state-of-the-art person Re-ID methods. Additionally, it achieves 86.1% mAP and 94.8% Rank-1 accuracy on Market1501.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge