Ji Joong Moon

Deep Neural Network Models Trained With A Fixed Random Classifier Transfer Better Across Domains

Feb 28, 2024Abstract:The recently discovered Neural collapse (NC) phenomenon states that the last-layer weights of Deep Neural Networks (DNN), converge to the so-called Equiangular Tight Frame (ETF) simplex, at the terminal phase of their training. This ETF geometry is equivalent to vanishing within-class variability of the last layer activations. Inspired by NC properties, we explore in this paper the transferability of DNN models trained with their last layer weight fixed according to ETF. This enforces class separation by eliminating class covariance information, effectively providing implicit regularization. We show that DNN models trained with such a fixed classifier significantly improve transfer performance, particularly on out-of-domain datasets. On a broad range of fine-grained image classification datasets, our approach outperforms i) baseline methods that do not perform any covariance regularization (up to 22%), as well as ii) methods that explicitly whiten covariance of activations throughout training (up to 19%). Our findings suggest that DNNs trained with fixed ETF classifiers offer a powerful mechanism for improving transfer learning across domains.

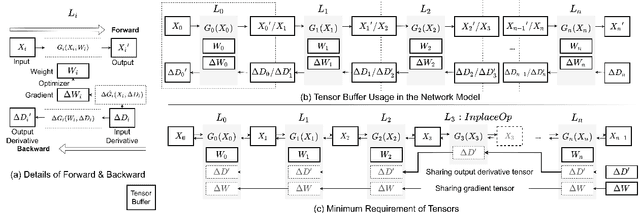

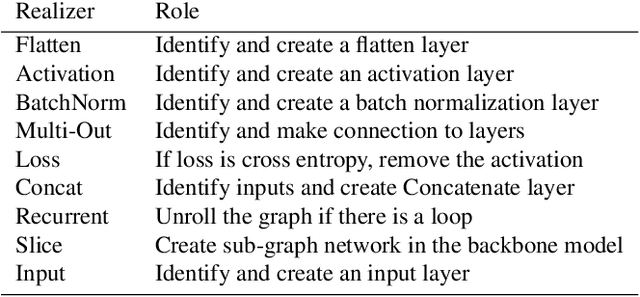

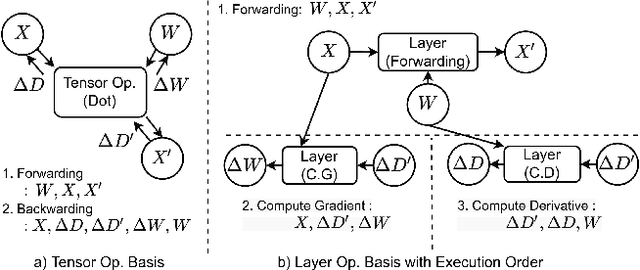

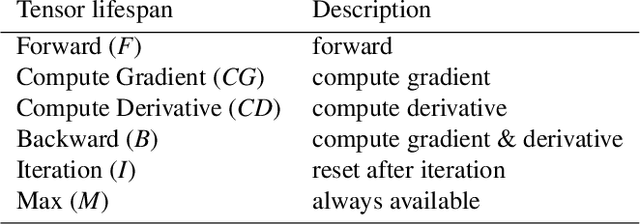

NNTrainer: Light-Weight On-Device Training Framework

Jun 09, 2022

Abstract:Modern consumer electronic devices have adopted deep learning-based intelligence services for their key features. Vendors have recently started to execute intelligence services on devices to preserve personal data in devices, reduce network and cloud costs. We find such a trend as the opportunity to personalize intelligence services by updating neural networks with user data without exposing the data out of devices: on-device training. For example, we may add a new class, my dog, Alpha, for robotic vacuums, adapt speech recognition for the users accent, let text-to-speech speak as if the user speaks. However, the resource limitations of target devices incur significant difficulties. We propose NNTrainer, a light-weight on-device training framework. We describe optimization techniques for neural networks implemented by NNTrainer, which are evaluated along with the conventional. The evaluations show that NNTrainer can reduce memory consumption down to 1/28 without deteriorating accuracy or training time and effectively personalizes applications on devices. NNTrainer is cross-platform and practical open source software, which is being deployed to millions of devices in the authors affiliation.

NNStreamer: Stream Processing Paradigm for Neural Networks, Toward Efficient Development and Execution of On-Device AI Applications

Jan 12, 2019

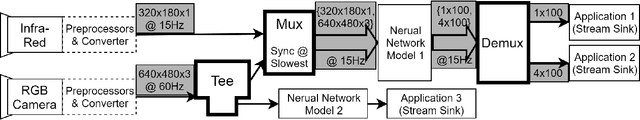

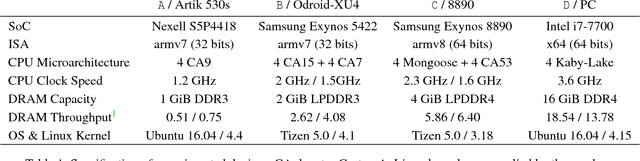

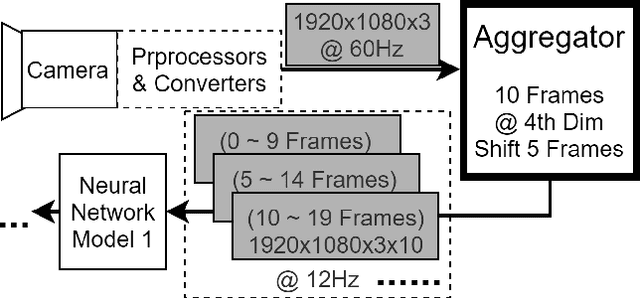

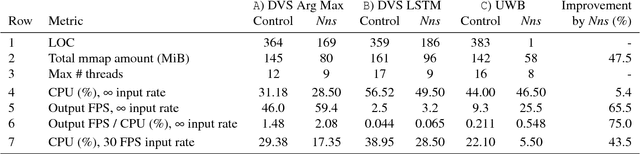

Abstract:We propose nnstreamer, a software system that handles neural networks as filters of stream pipelines, applying the stream processing paradigm to neural network applications. A new trend with the wide-spread of deep neural network applications is on-device AI; i.e., processing neural networks directly on mobile devices or edge/IoT devices instead of cloud servers. Emerging privacy issues, data transmission costs, and operational costs signifies the need for on-device AI especially when a huge number of devices with real-time data processing are deployed. Nnstreamer efficiently handles neural networks with complex data stream pipelines on devices, improving the overall performance significantly with minimal efforts. Besides, nnstreamer simplifies the neural network pipeline implementations and allows reusing off-shelf multimedia stream filters directly; thus it reduces the developmental costs significantly. Nnstreamer is already being deployed with a product releasing soon and is open source software applicable to a wide range of hardware architectures and software platforms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge